How to use AWS EventBridge.

What is AWS EventBridge?

AWS EventBridge is a serverless event bus service provided by Amazon Web Services (AWS) that allows you to route events between AWS services, your applications, and third-party SaaS applications. It provides a central location for your applications to publish and receive events, making it easier to build event-driven architectures.

It can react to state changes in resources including AWS and non-AWS resources.

How does AWS Event Bridge work?

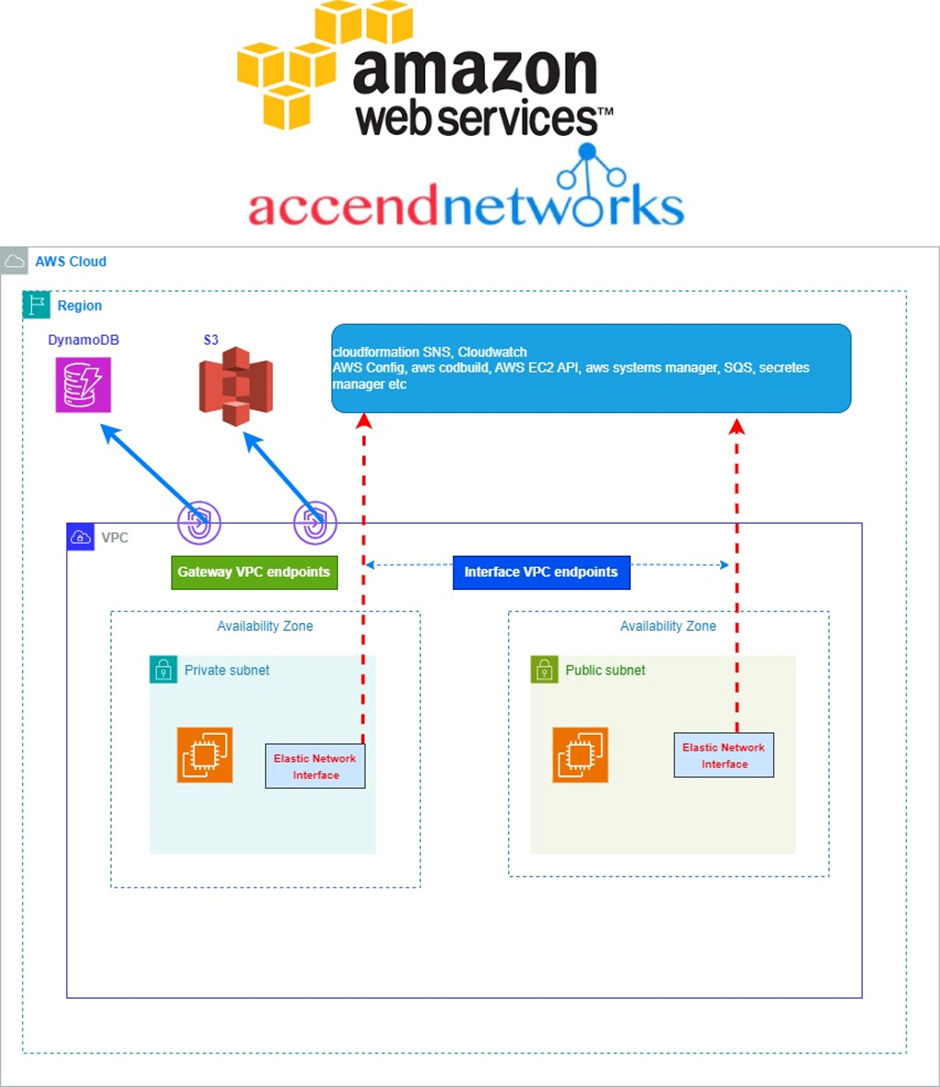

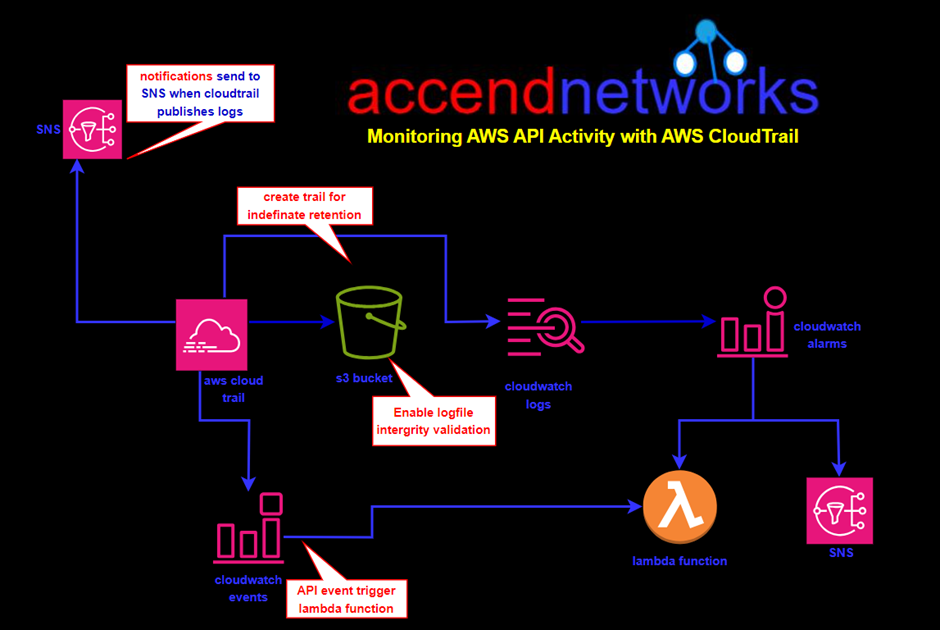

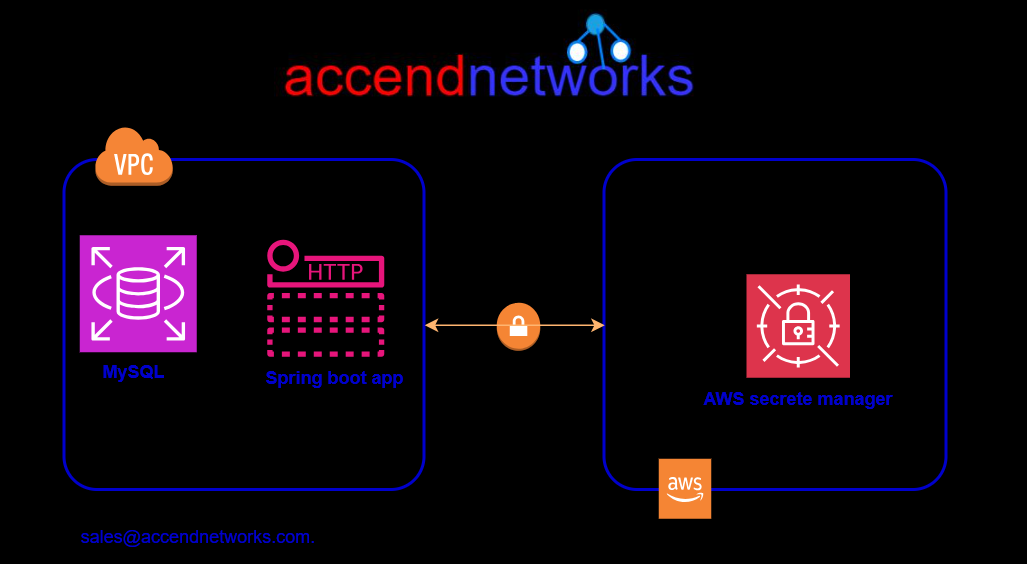

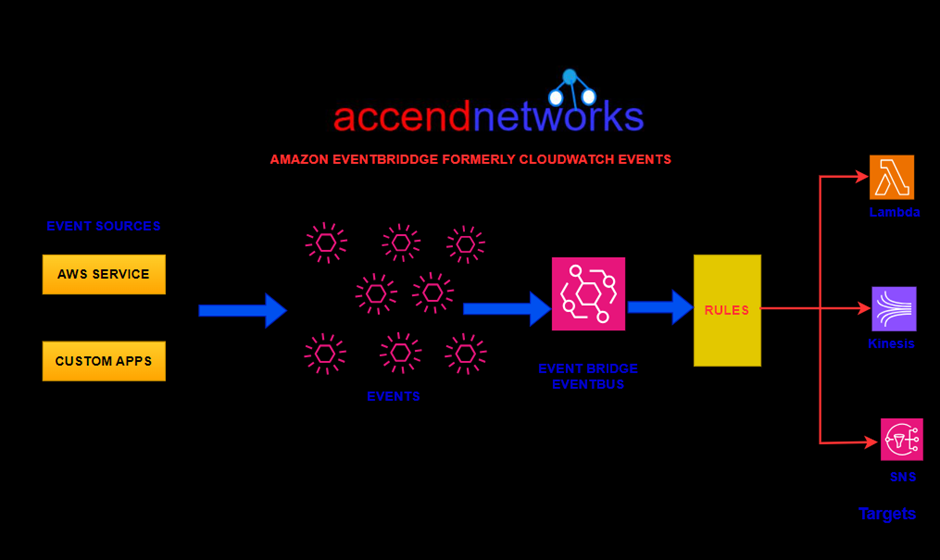

AWS EventBridge works by routing events between different AWS services, applications, and third-party SaaS applications. The event bus is the central component of Event Bridge, which provides a way to route events from different sources to different targets.

An event source is a service or application that generates events, and an event target is a service or application that receives events. You can set up rules in Event Bridge to route events from an event source to one or more event targets.

From the above architectural diagram, with AWS EventBridge we have event sources, and state change to those resources gets sent as events to what we call an event bridge event bus. Information is then processed by rules and those rules can then send information through to various destinations.

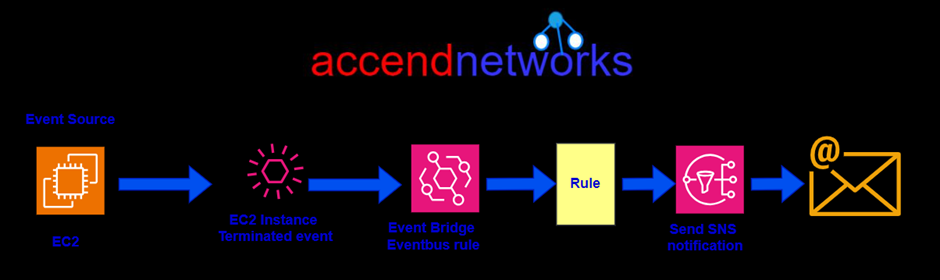

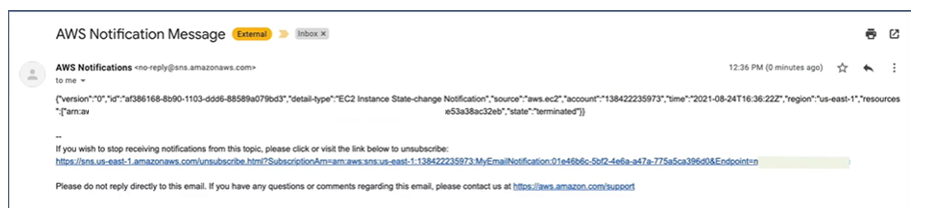

Let’s take a gain look at another example, let’s say we have an EC2 instance as an event source and an event happens. That event is a termination event of an EC2 instance that gets forwarded to the event bridge Event Bus. A rule gets processed, and that rule then gets sent through to a destination, in this case, an SNS topic after which an SNS notification gets sent through to an email address

Terms associated with EventBridge

Events: An event indicates a change in an environment. For. e.g. Change of an EC2 instance from pending to running.

Rules: Incoming events route to targets only if they match the rule that is specified.

Targets: A target can be Lambda functions, Amazon EC2 instances, Amazon Kinesis Data Streams, SNS, SQS, Pipelines in CICD, Step Functions, etc that receive events in JSON format.

Event Buses: The Event Bus receives an event. When you create a rule, you associate it with a specific event bus, and the rule is matched only to events received by that event bus.

When an event is generated by an event source, it is sent to the Event Bridge event bus. If the event matches one or more rules that you’ve defined, EventBridge forwards the event to the corresponding event targets.

Now let’s make our hands dirty.

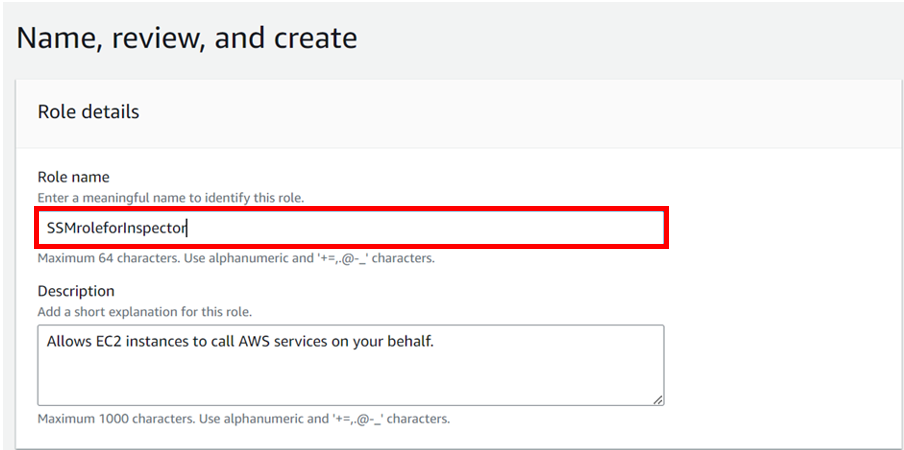

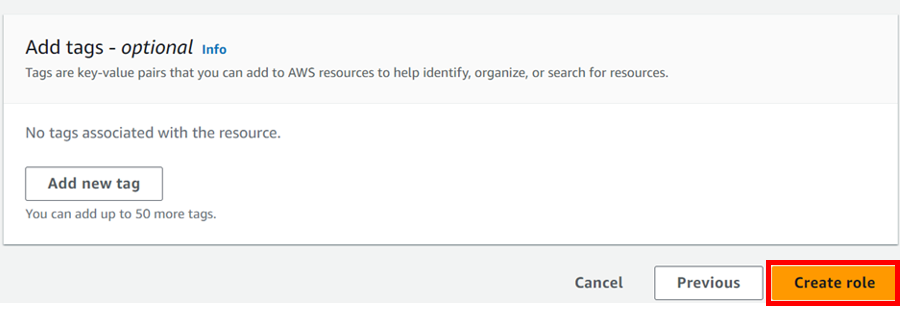

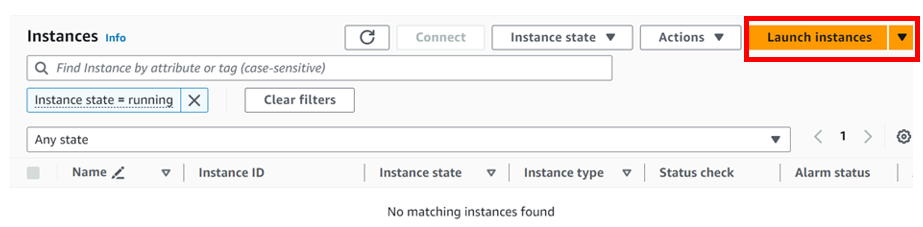

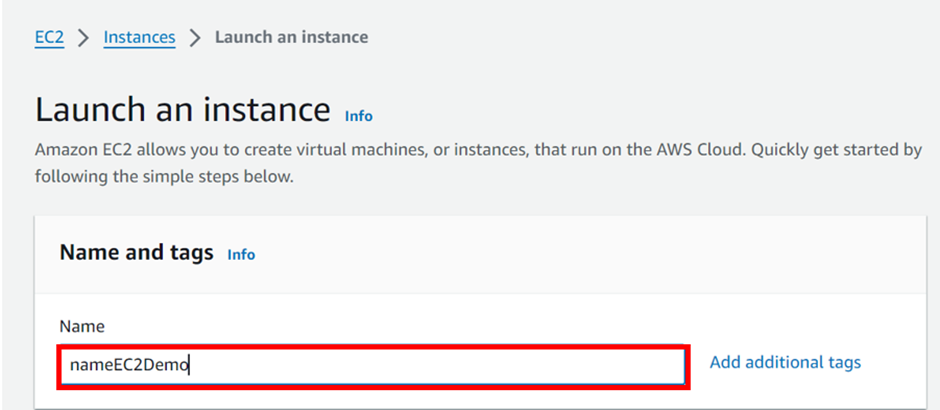

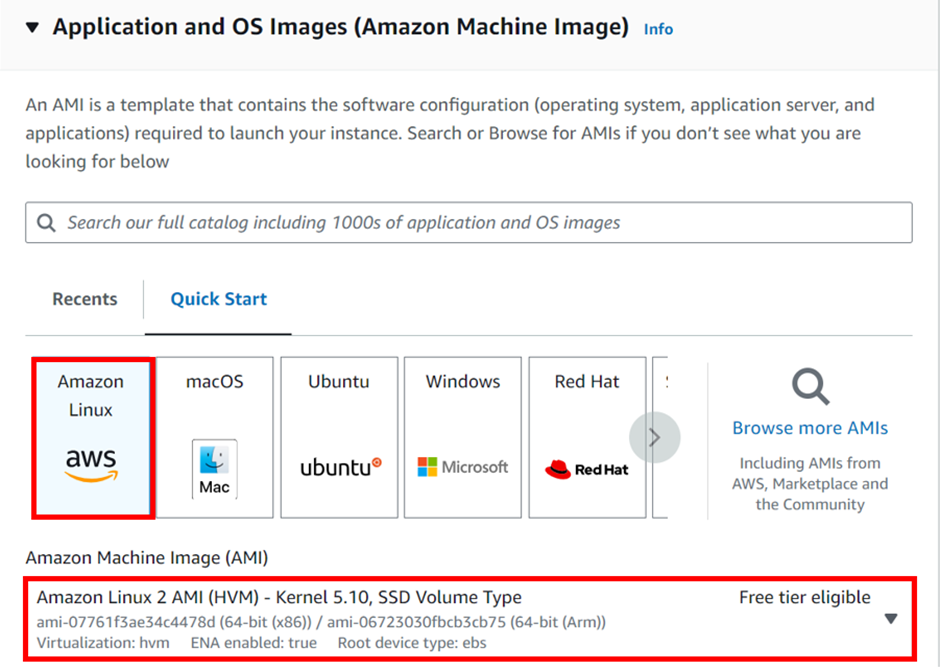

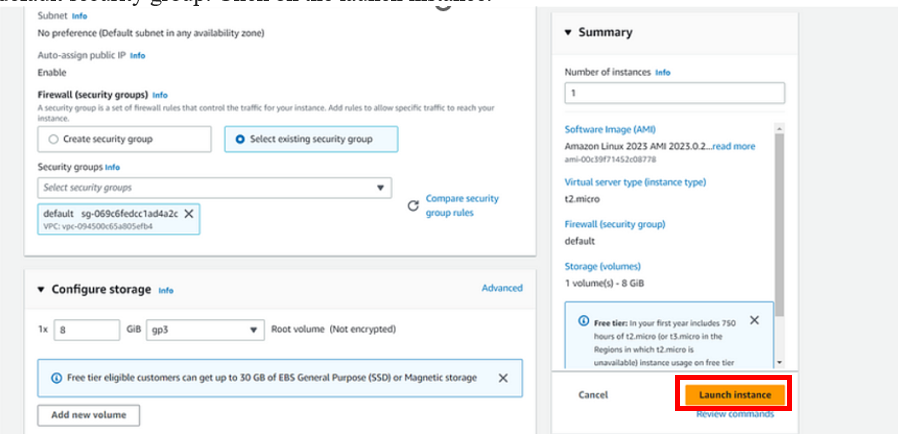

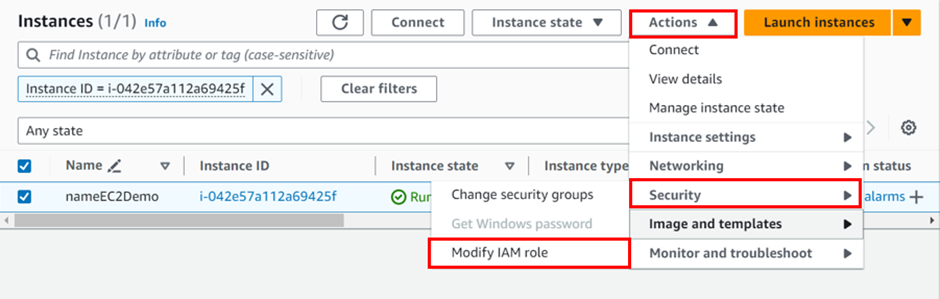

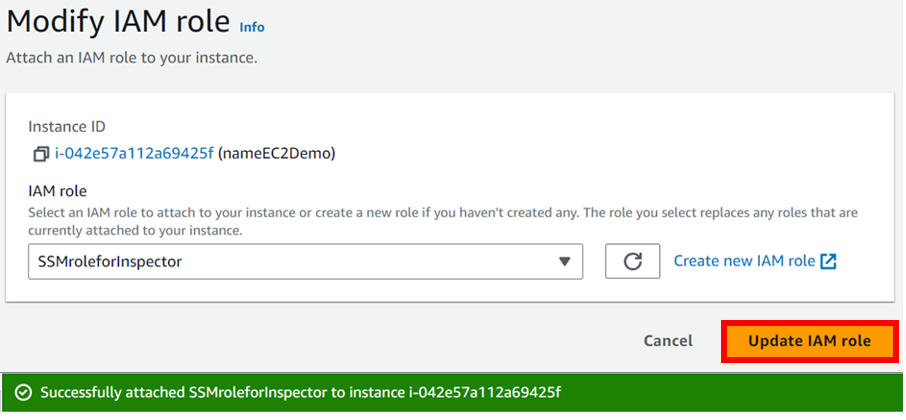

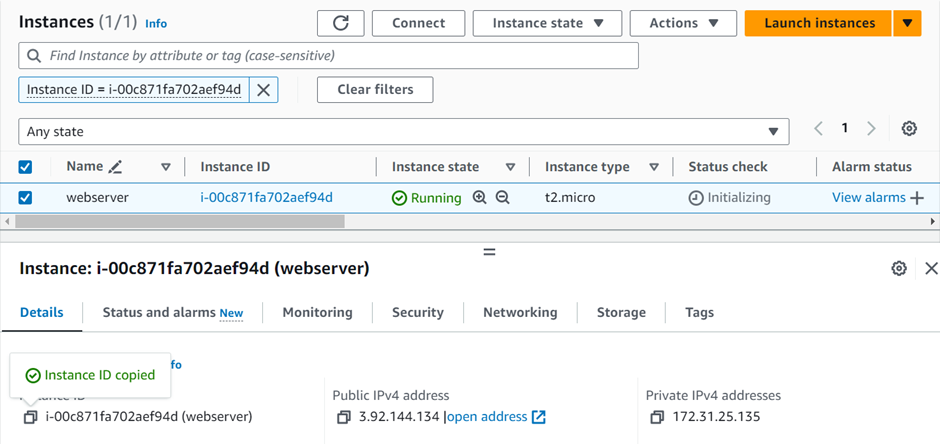

Log into the AWS management console, launch an instance, and copy the instance ID.

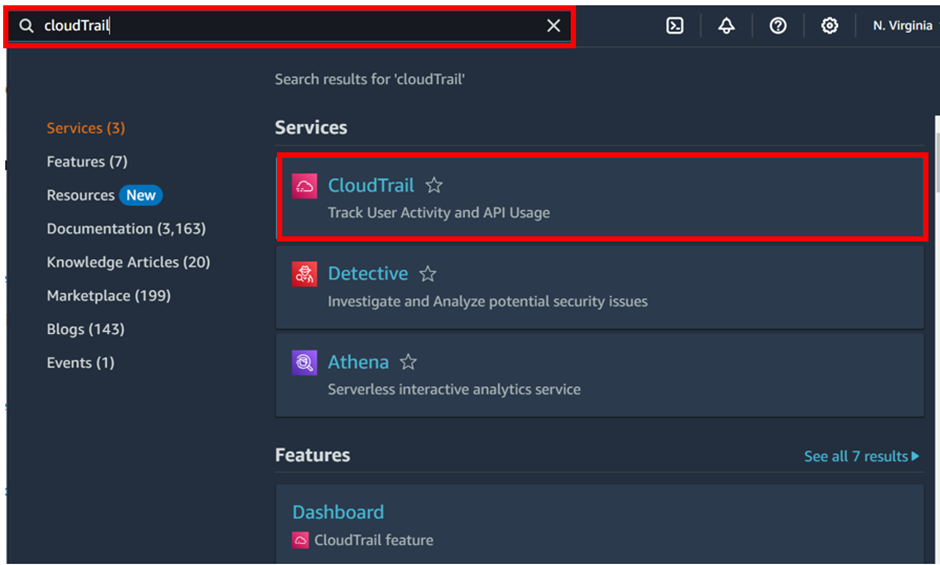

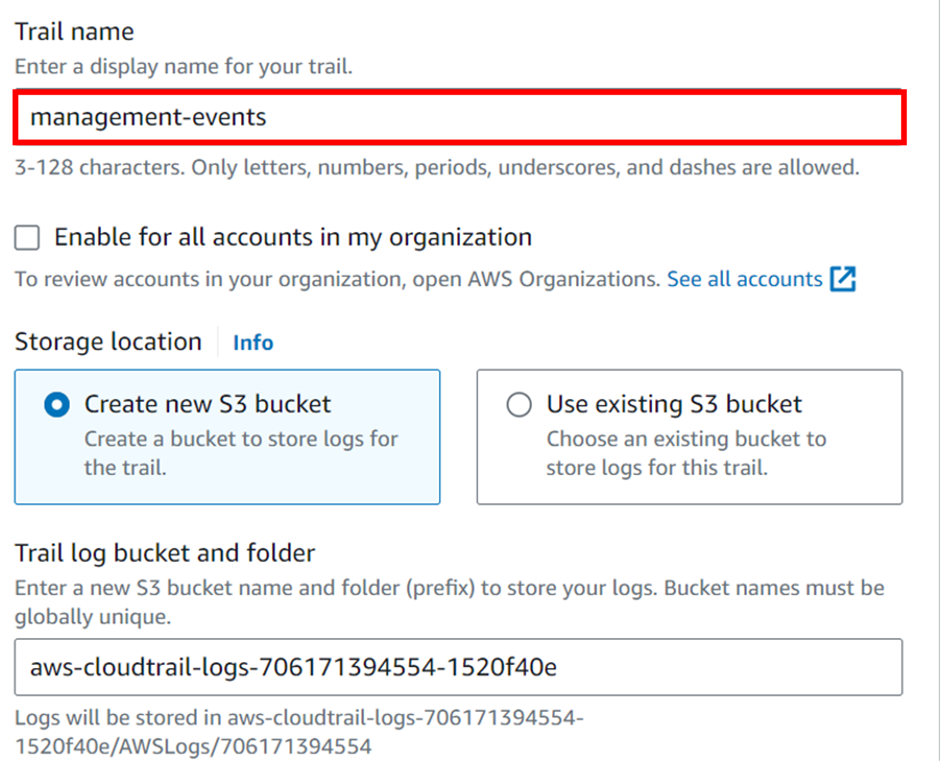

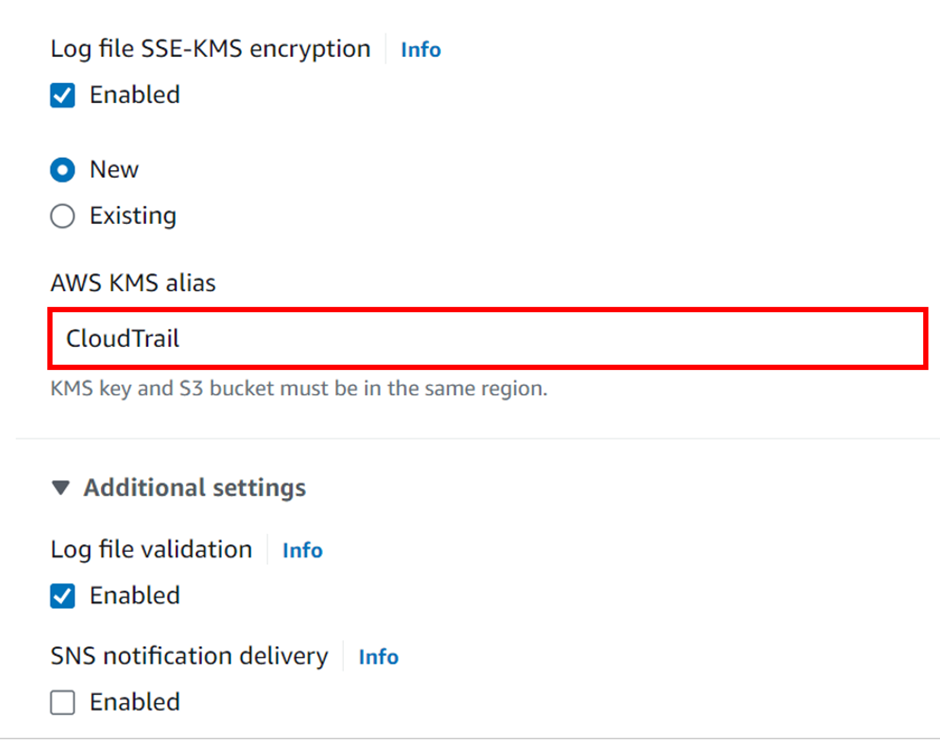

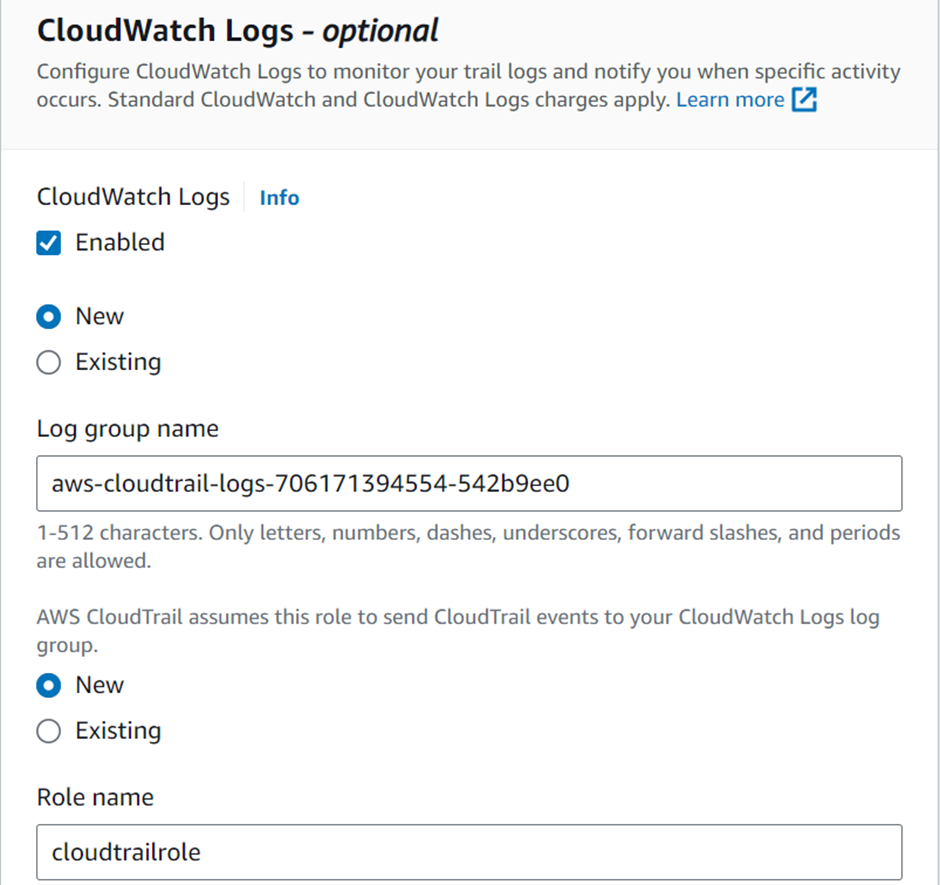

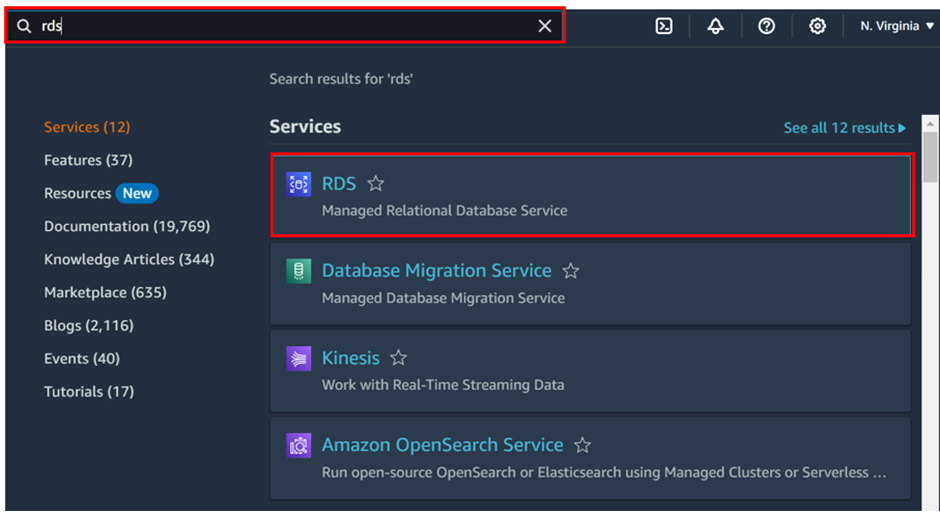

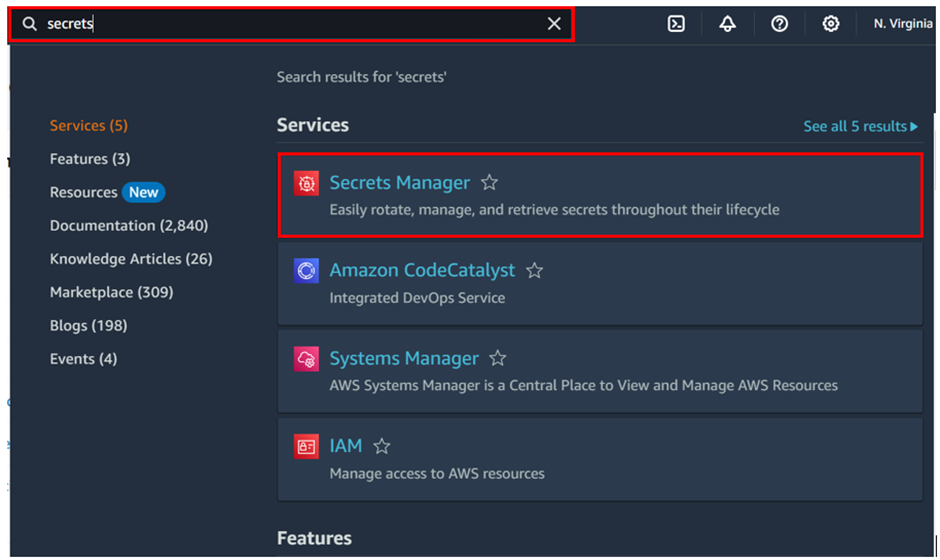

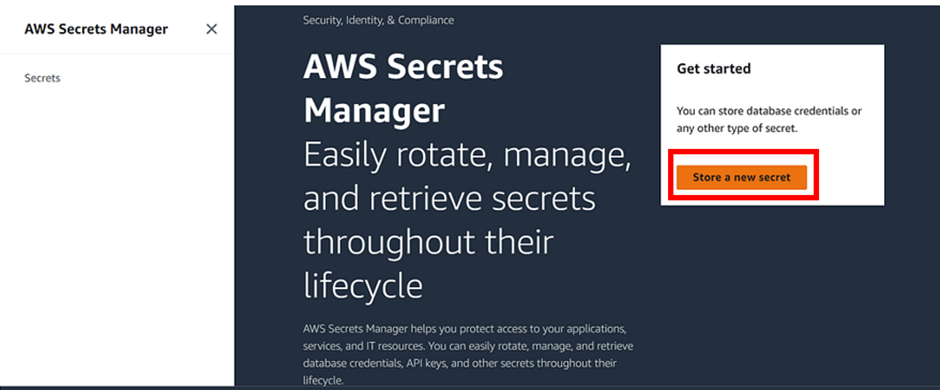

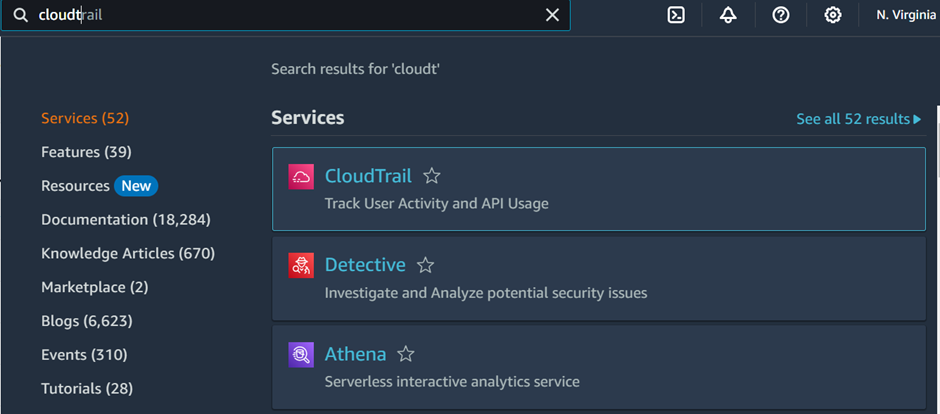

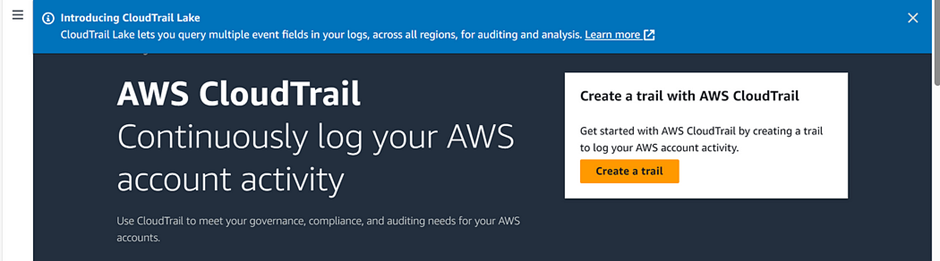

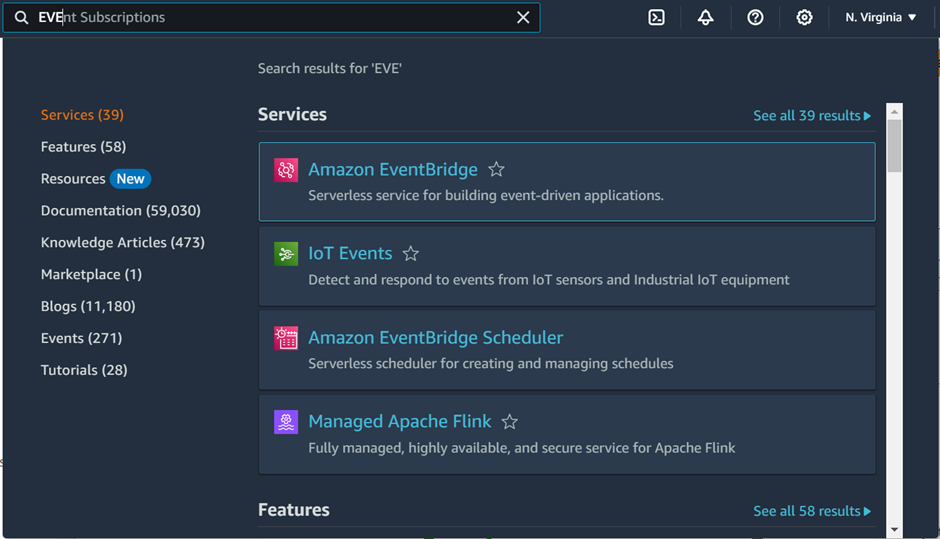

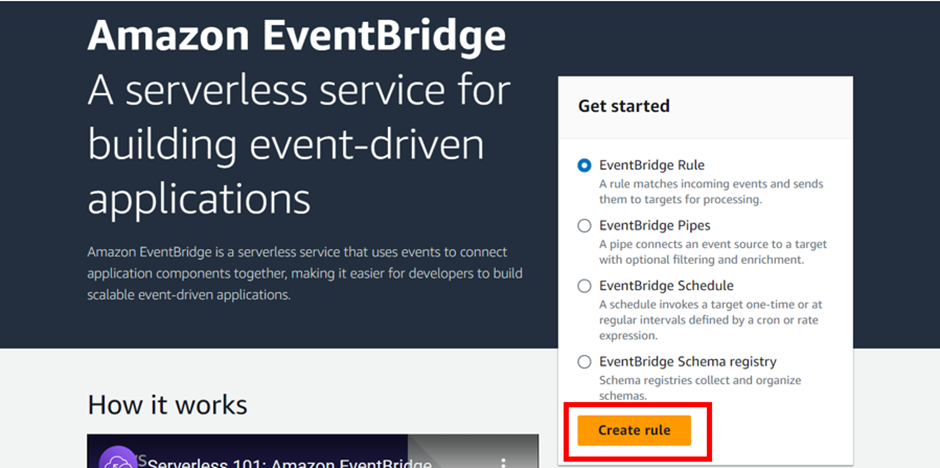

Then in the search box, type EventBridge and select EventBridge under services. In the EventBridge dashboard click Create rule.

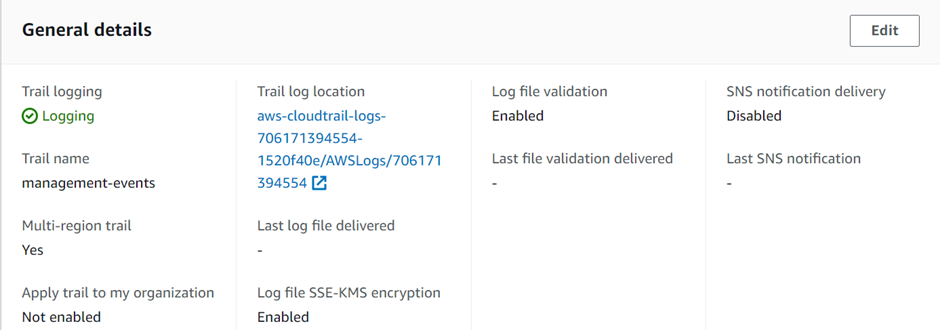

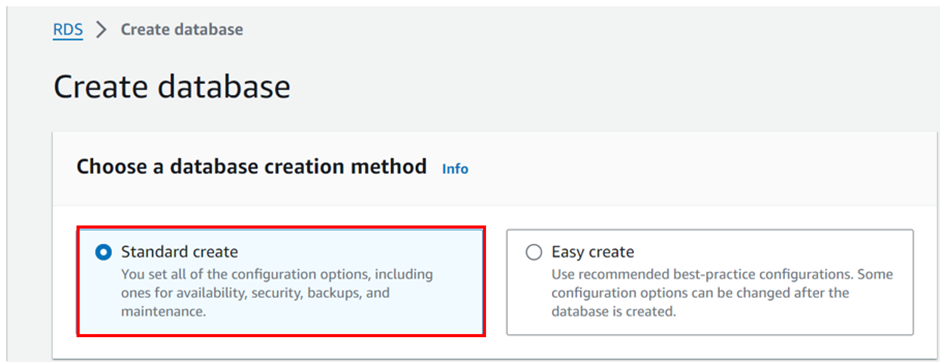

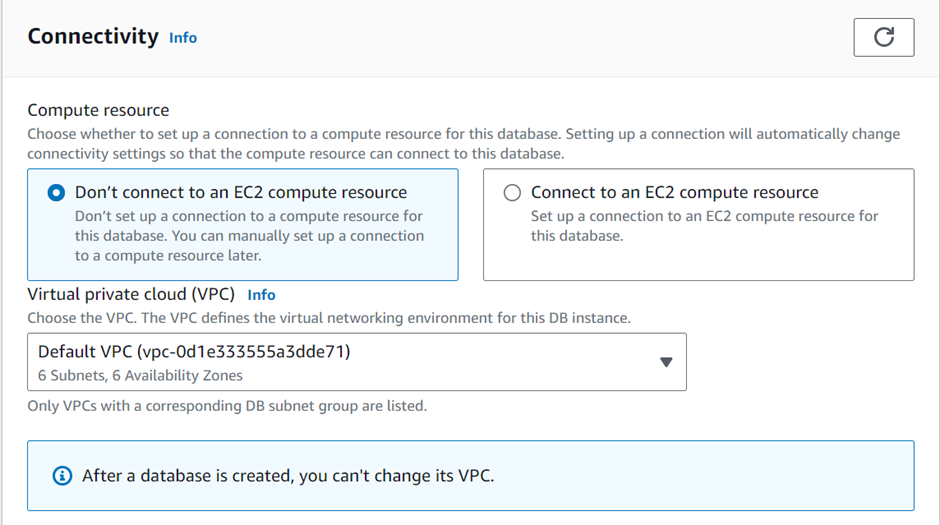

In the Create Rule dashboard, give your rule a name. call it EC2 state change. For the event bus, choose default, then toggle the enable rule on the selected event bus. Click next.

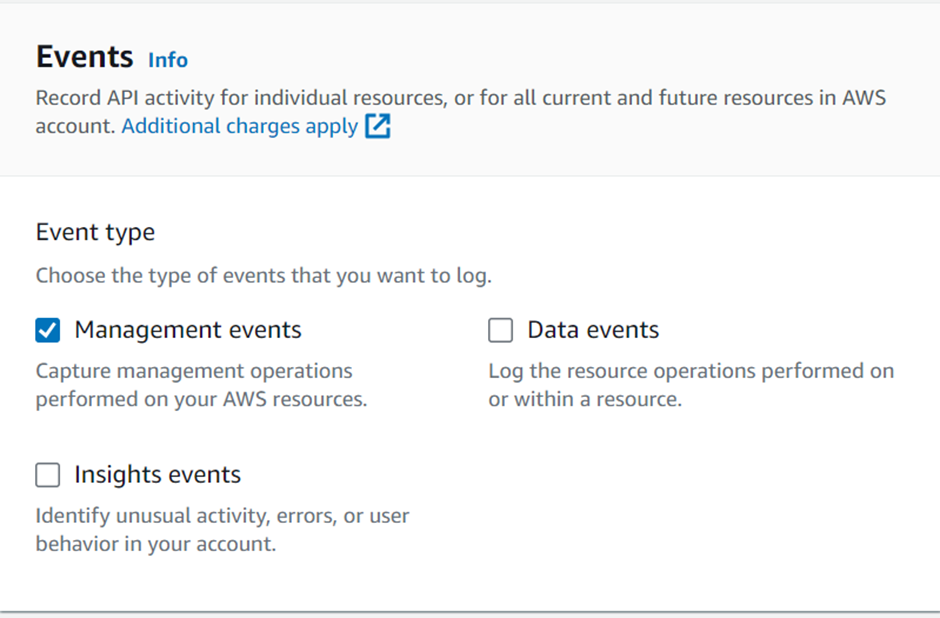

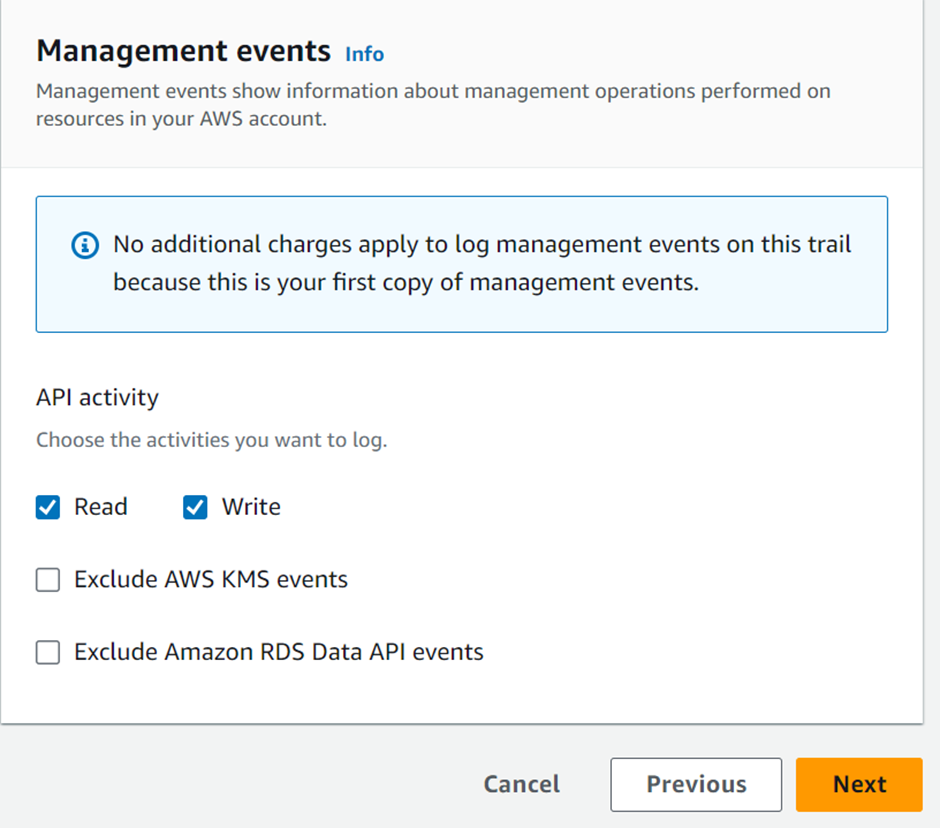

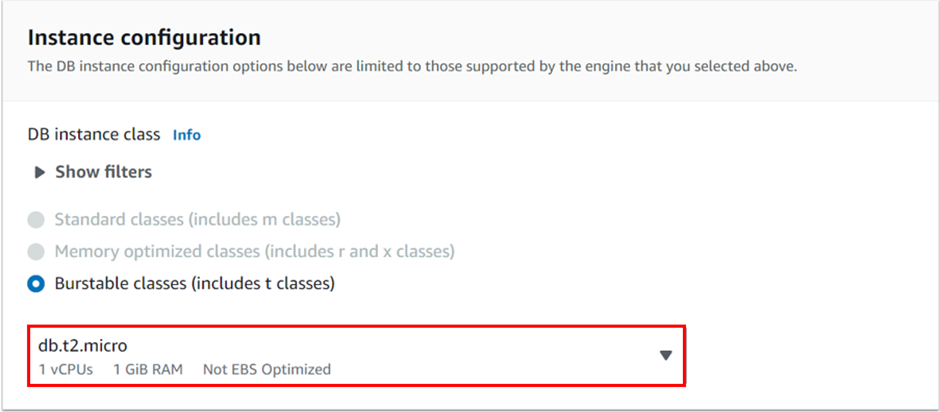

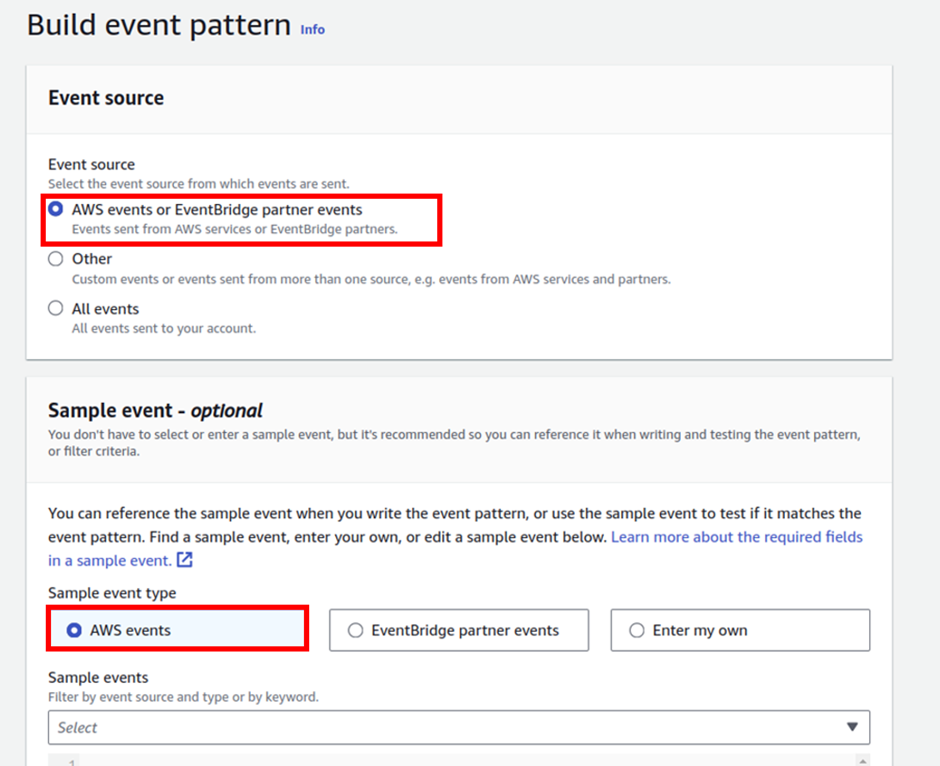

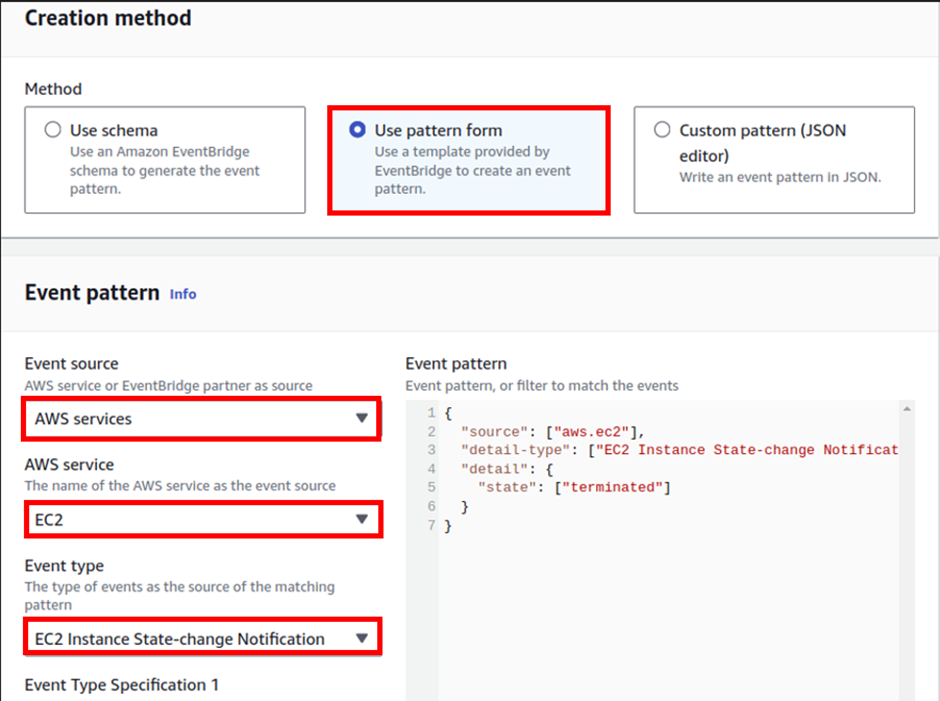

For event sources, select AWS events. Scroll down, under creation method select use pattern form.

Under event pattern for event source select the dropdown button and select AWS service. then for AWS service, select EC2.

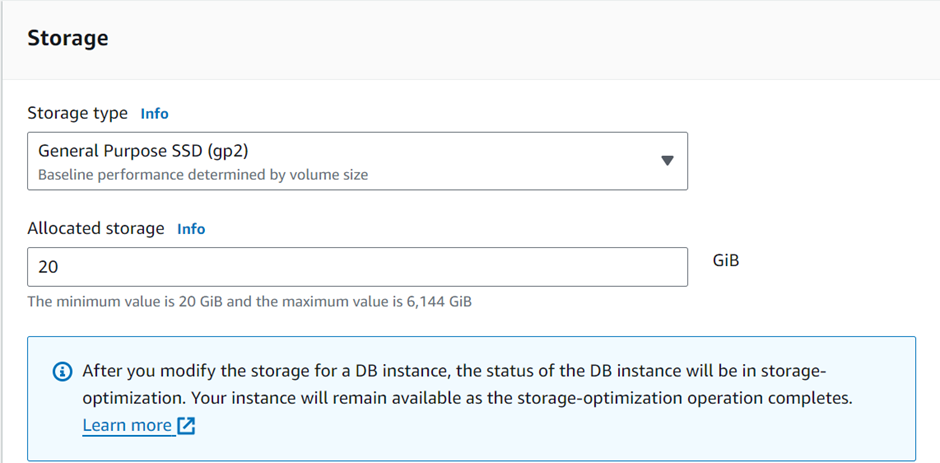

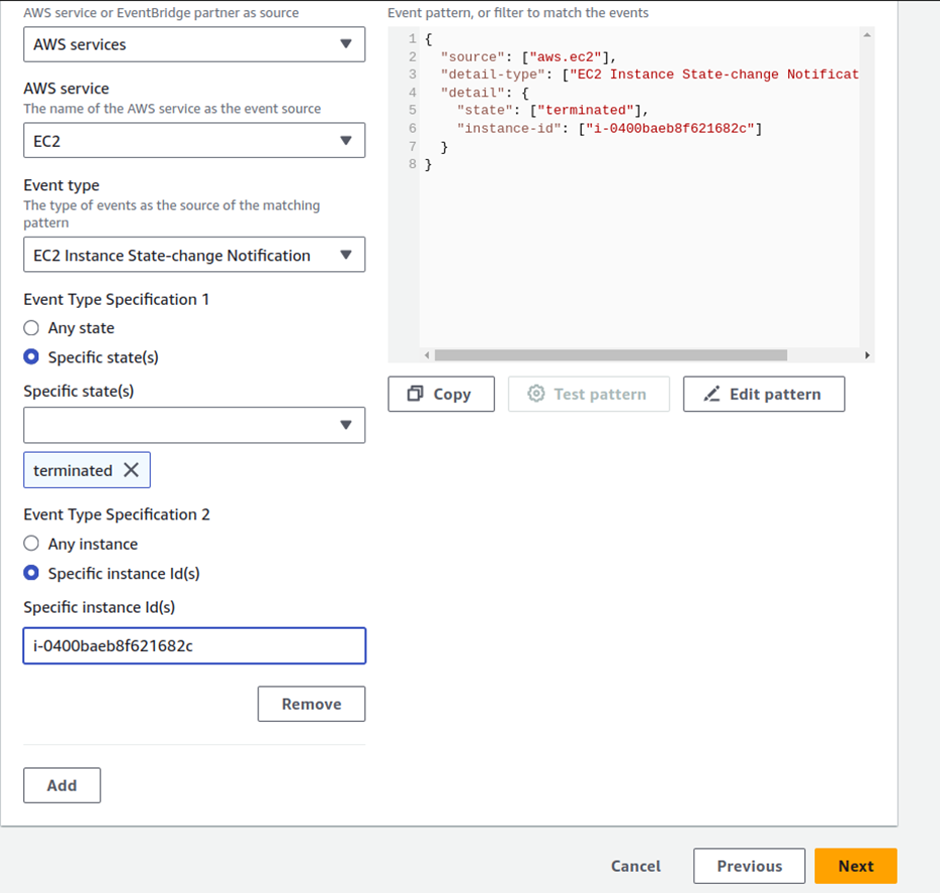

For Event-type select EC2 instance state-change notification. For event type specification 1, select a specific state. Then select the drop-down button and select terminated.

For event type specification 2 select specific instance Id. Then copy and paste the instance ID you copied then click next.

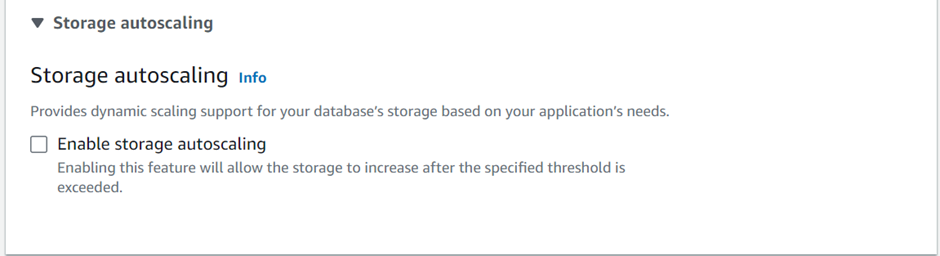

Next, scroll down, and let’s specify a target. Choose SNS. For topic go ahead and create your SNS topic. I already have my SNS topic called Email notification.

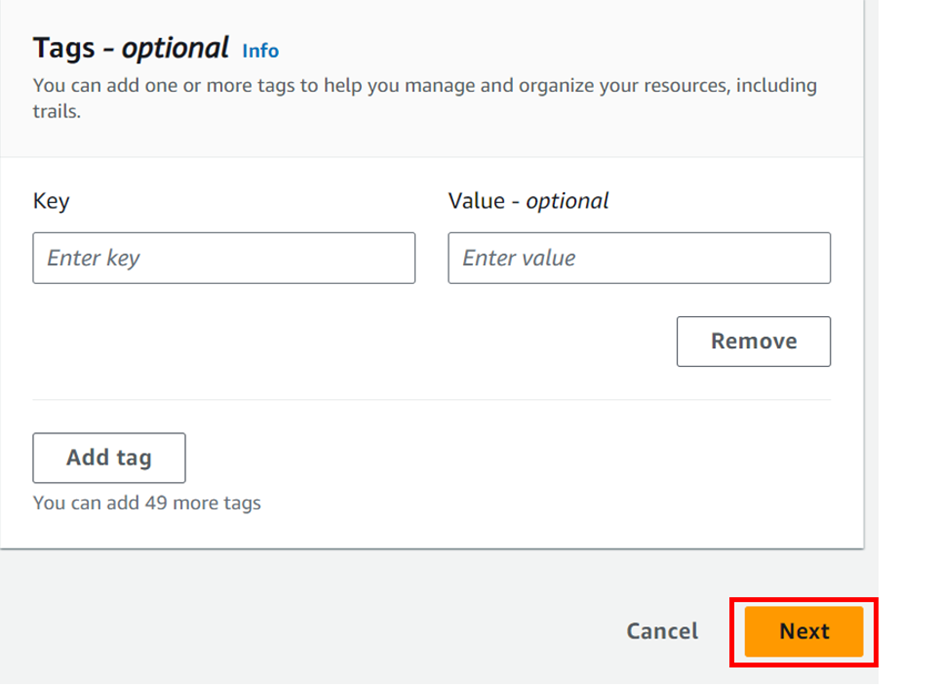

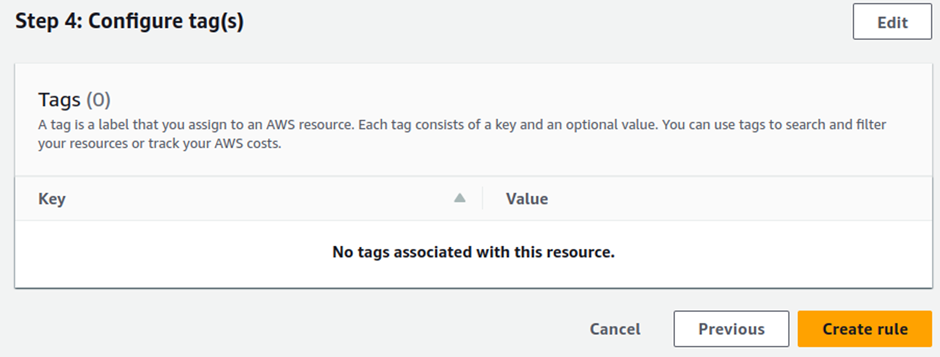

These are the only settings we need to click Create rule.

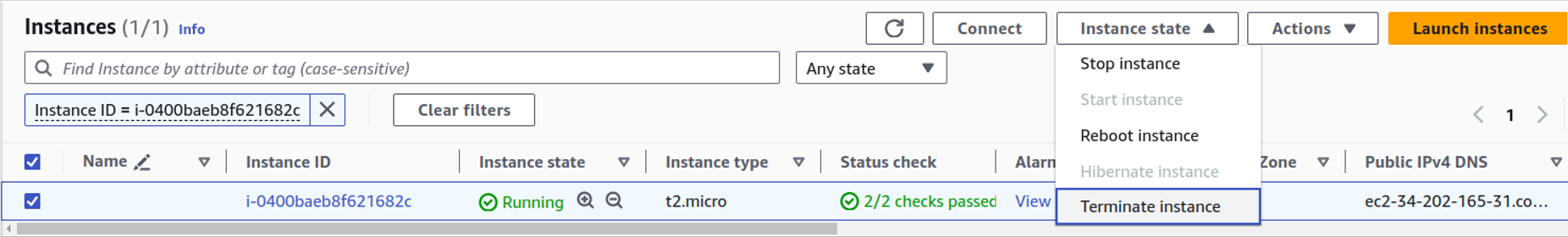

So that rule is enabled, let’s go and terminate our EC2 instance. And see what happens.

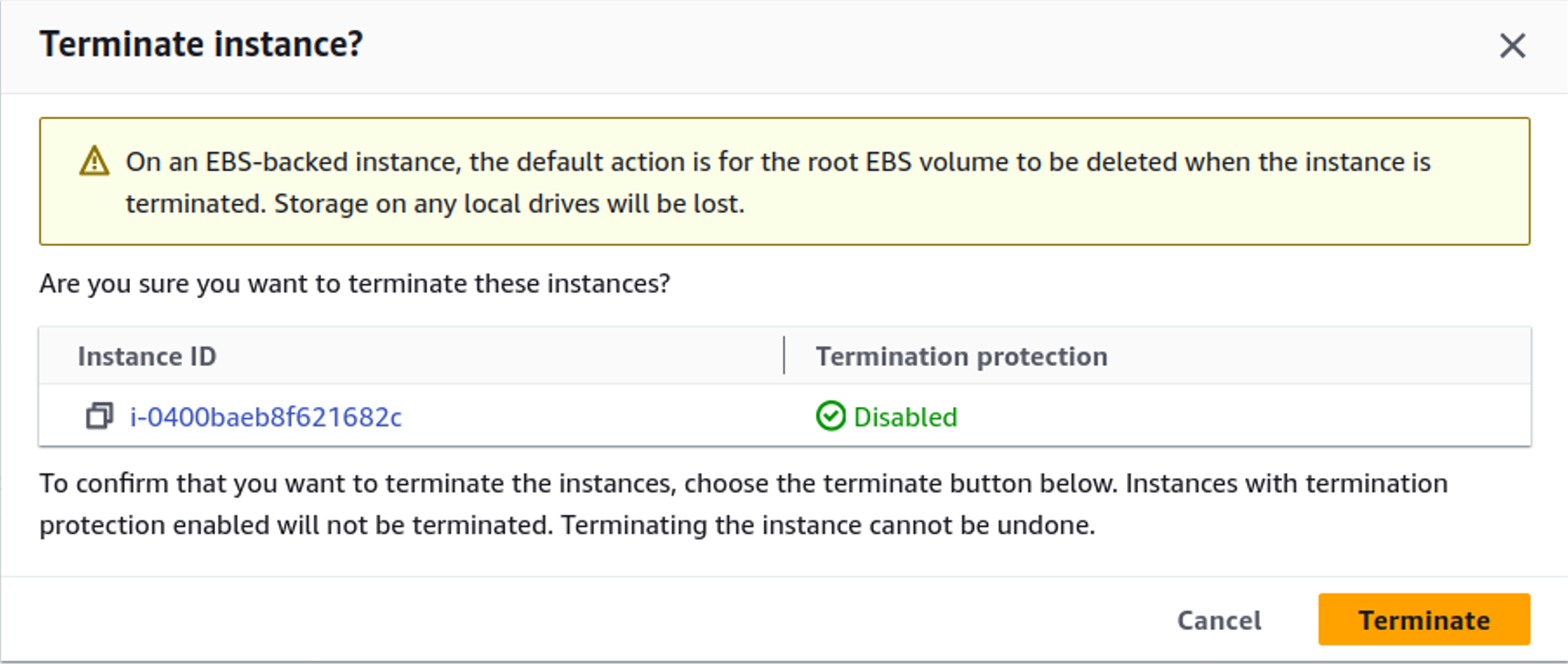

So back in EC2, click instance state and terminate your instance.

Go to your email and confirm if you’ve received a notification. Here is the notification have received.

This brings us to the end of this demo. Stay tuned for more.

Make sure to clean your resources to avoid surprise bills.

If you have any questions concerning this article or have an AWS project that requires our assistance, please reach out to us by leaving a comment below or email us at [email protected].

Thank you