Enhancing VPC Security: An Overview of AWS Network Firewall

As cloud computing keeps changing, protecting network infrastructure is key. Amazon Web Services (AWS) provides strong security tools, including AWS Network Firewall. This article looks at leveraging AWS Network Firewall to protect resources in a Virtual Private Cloud (VPC).

What is Network Firewall?

Network Firewall is a managed network firewall service for VPC. It is a stateful, network firewall and intrusion detection and prevention service for your virtual private cloud (VPC) that you create in Amazon Virtual Private Cloud (VPC).

Network Firewall lets you filter traffic at the edge of your VPC. This covers filtering traffic going to and coming from an internet gateway, NAT gateway, or through VPN or AWS Direct Connect.

Understanding AWS Network Firewall

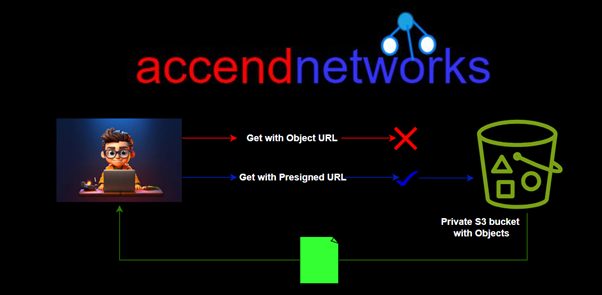

To understand the concept of a Network Firewall, let’s first explore some of the security features available for the VPC.

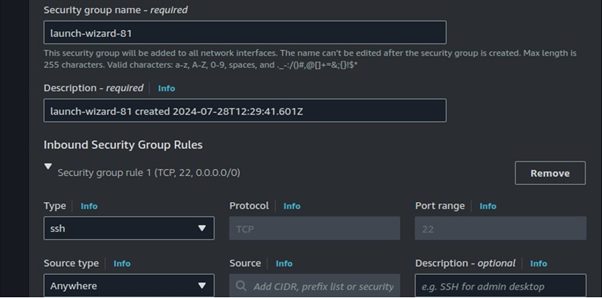

From the above architecture, we can observe that at the instance level, security groups are used to provide security for your instances and manage all incoming and outgoing traffic. At the subnet level, Network Access Control Lists (NACLs) are utilized to evaluate traffic entering and exiting the subnet.

Any traffic from the internet gateway to your instance will be evaluated by both NACLs and security group rules. Additionally. AWS Shield and AWS WAF are available for protecting web applications running within your VPC.

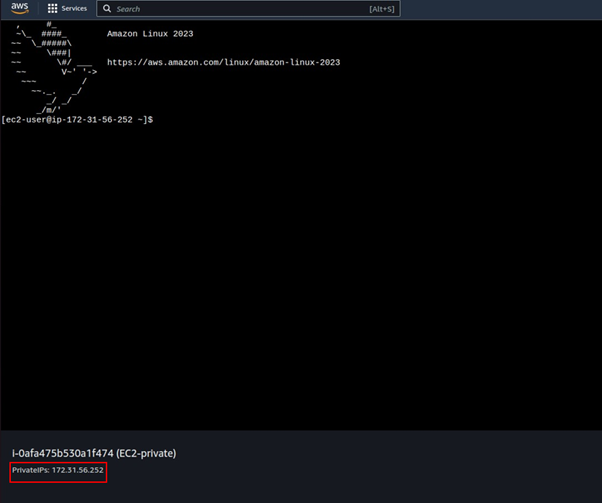

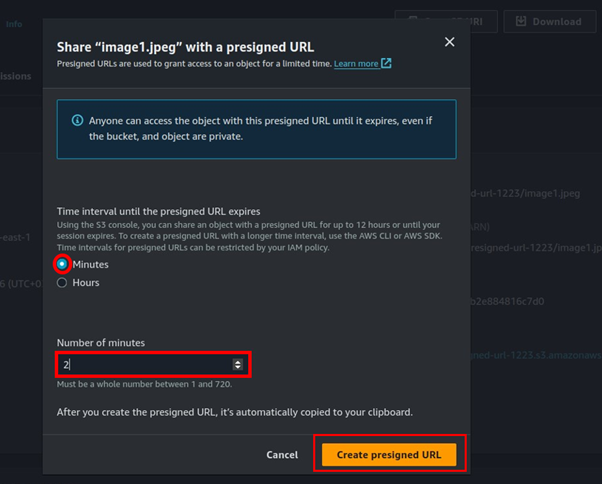

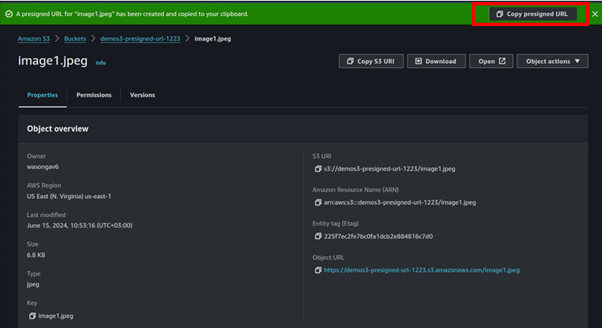

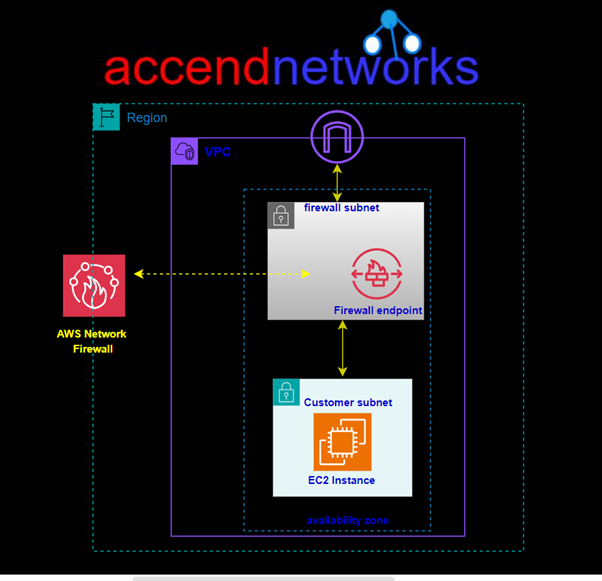

Single zone architecture with internet gateway and network firewall

AWS network firewall will establish a subnet in your VPC’s availability zone and set up an endpoint within it. All traffic from your subnet heading to the internet will be routed through this network firewall subnet for inspection before proceeding to its destination and vice versa for incoming traffic. This is a valuable feature that enhances the security of your VPC.

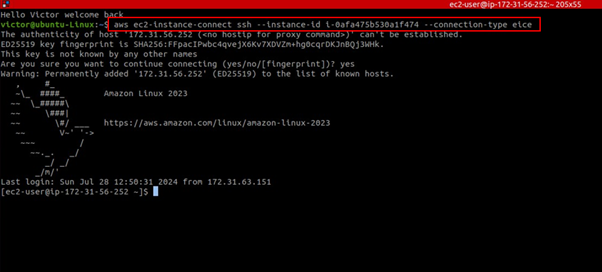

Two zone architecture with network firewall and internet gateway

In this setup, you can have firewall subnets in each availability zone, where each network firewall will inspect traffic going to and from the customer subnet in that zone. To make sure all traffic goes through these firewall subnets, you’ll need to update your route tables.

How it Network Firewall Works

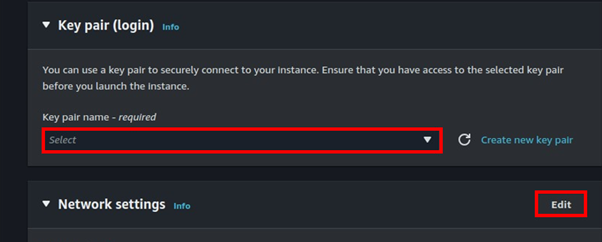

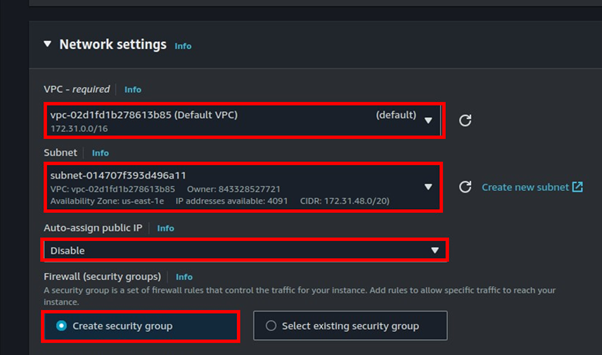

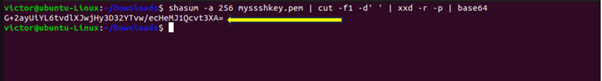

To apply traffic-filtering logic provided by Network Firewall, traffic should be routed symmetrically to the Network Firewall endpoint. Network Firewall endpoint is deployed into a dedicated subnet of a VPC (Firewall subnet). Depending on the use case and deployment model, the firewall subnet could be either public or private. For high availability and multi-AZ deployments, allocate a subnet per AZ.

Once NF is deployed, the firewall endpoint will be available in each firewall subnet. The firewall endpoint is similar to the interface endpoint and it shows up as vpce-id in the VPC route table.

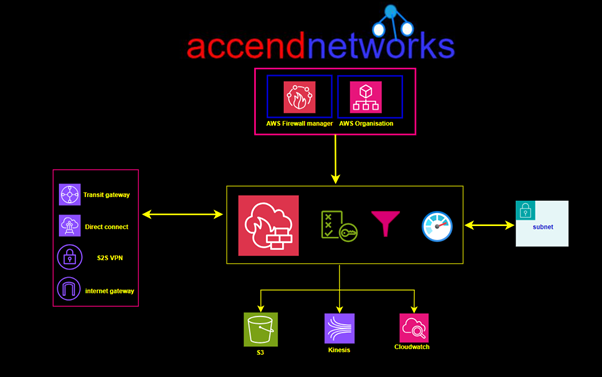

Network Firewall makes firewall activity visible in real-time via CloudWatch metrics and offers increased visibility of network traffic by sending logs to S3, CloudWatch, and Kinesis datafirehorse.

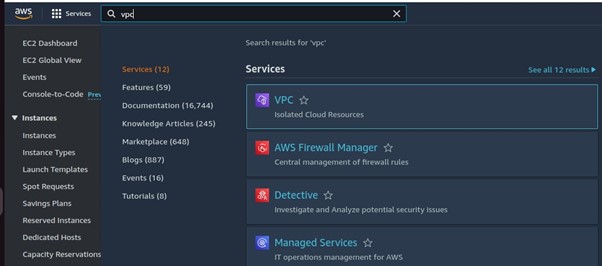

NF is integrated with AWS firewall Manager, giving customers who use AWS Organizations a single place to enable and monitor firewall activity across all VPCs and AWS accounts.

Network Firewall Components:

Firewall: A firewall connects the VPC that you want to protect to the protection behaviour that’s defined in a firewall policy.

Firewall policy: Defines the behaviour of the firewall in a collection of stateless and stateful rule groups and other settings. You can associate each firewall with only one firewall policy, but you can use a firewall policy for more than one firewall.

Rule group: A rule group is a collection of stateless or stateful rules that define how to inspect and handle network traffic.

Stateless Rules

Inspects each packet in isolation. Does not take into consideration factors such as the direction of traffic, or whether the packet is part of an existing, approved connection.

Stateful Rules

Stateful firewalls are capable of monitoring and detecting states of all traffic on a network to track and defend based on traffic patterns and flows.

This brings us to the end of this blog.

Conclusion

Protecting resources inside a VPC plays a key role in today’s cloud setups. AWS Network Firewall gives you a full set of tools to guard your network against different kinds of threats. setting AWS Network Firewall manager, will give your VPC resources strong protection.

Thanks for reading and stay tuned for more.

If you have any questions concerning this article or have an AWS project that requires our assistance, please reach out to us by leaving a comment below or email us at [email protected].

Thank you!