AWS Recycle Bin: Your Key to Enhanced Data Protection and Recovery

Introduction

As more and more companies depend on the cloud infrastructure to run their businesses, at the same time they need a strong strategy to protect and recover their data, as accidental deletions or unexpected failures can lead to critical data loss, resulting in downtime and potential financial losses. AWS well known for its wide range of services, provides various tools to keep data safe and retrievable. Among these, the AWS Recycle Bin service stands out as a powerful feature to improve data recovery options. This blog explores the AWS Recycle Bin service, what it offers, and how to use it to protect your important resources.

Getting to Know AWS Recycle Bin

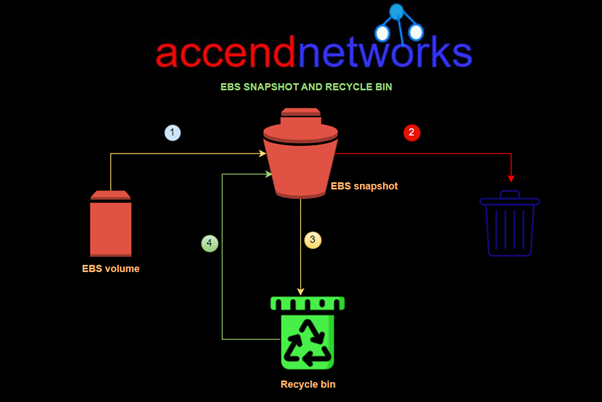

The Recycle Bin service, in the context of AWS, is a concept often used to describe a safety mechanism for storing and recovering deleted resources. While AWS doesn’t offer a specific service named “Recycle Bin,” users can implement similar functionality using AWS services like AWS Lambda and Amazon S3.

In this setup, when a resource is deleted, it isn’t immediately gone forever. Instead, it’s moved to a specific storage area, often called a “Recycle Bin” or “Trash,” where it remains for a set period before being permanently deleted. This serves as a safety net, allowing users to easily recover accidentally deleted resources without needing to go through complicated backup and recovery processes.

By using AWS Lambda functions and S3 event notifications, you can automate the transfer of deleted resources to the Recycle Bin and set up policies for how long they should be retained before final deletion. This approach strengthens your data protection and management strategy within your AWS environment.

The AWS Recycle Bin is particularly useful when mistakes happen or automated systems accidentally delete resources. By enabling the Recycle Bin, you ensure that even if a resource is deleted, it can still be restored, preventing data loss and avoiding service interruptions.

Benefits of AWS Recycle Bin

Enhanced Data Protection: It allows you to recover deleted resources within a specified period, reducing the risk of permanent data loss.

Compliance and Governance: It ensures that data is not permanently lost due to accidental deletions, which is essential for maintaining audit trails and adhering to data retention policies.

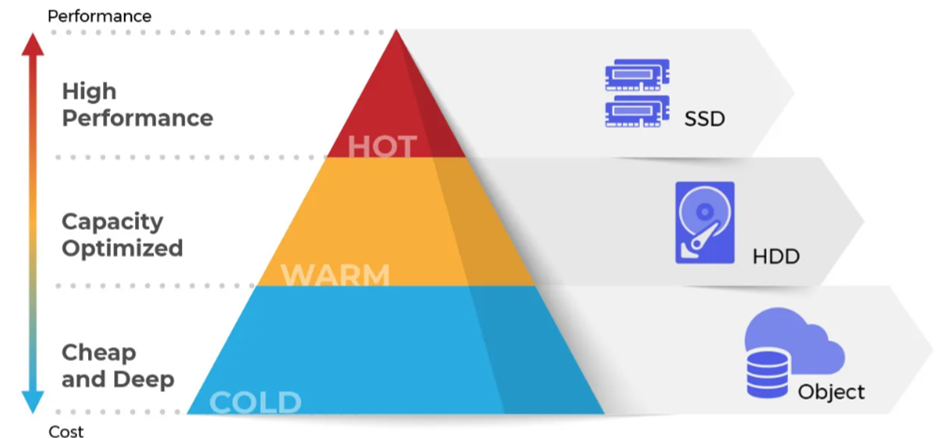

Cost Management: By setting appropriate retention periods, you can manage storage costs effectively.

Let’s now get to the hands-on.

Implementation Steps

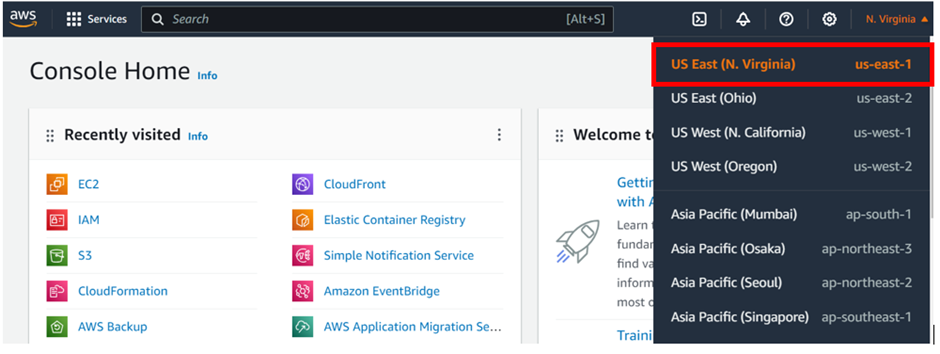

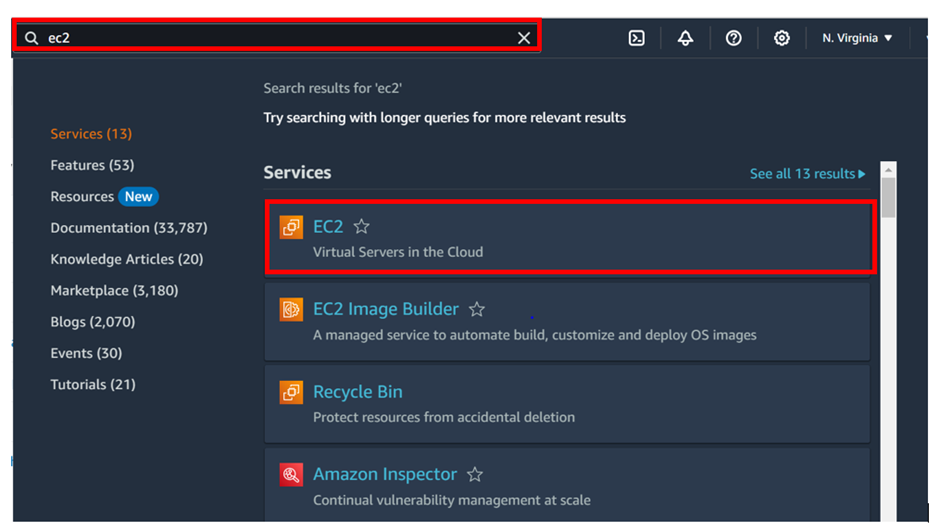

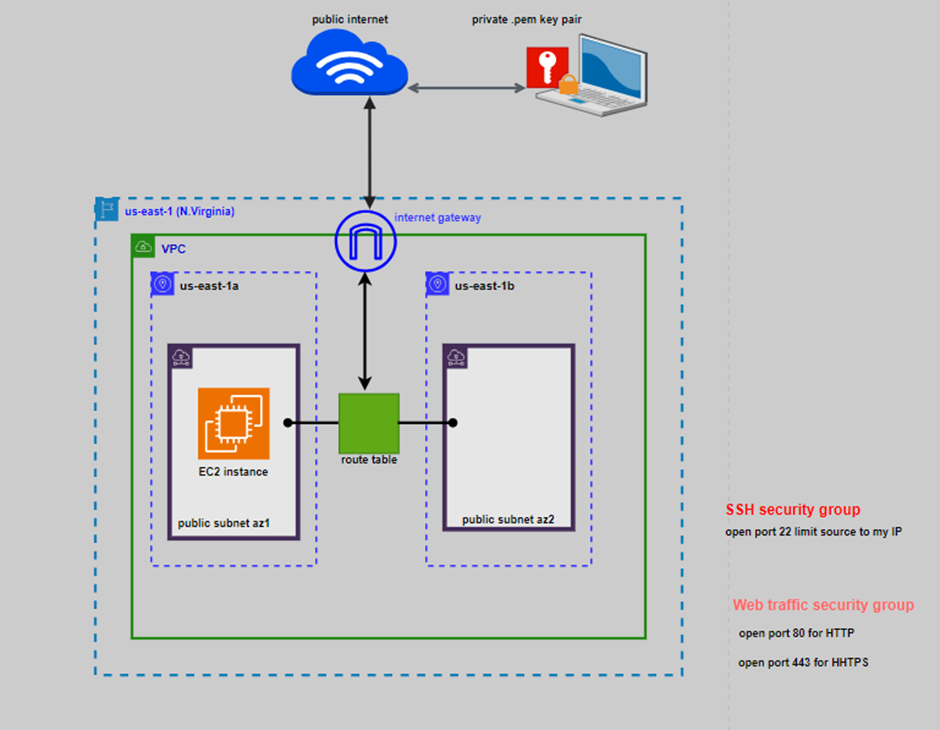

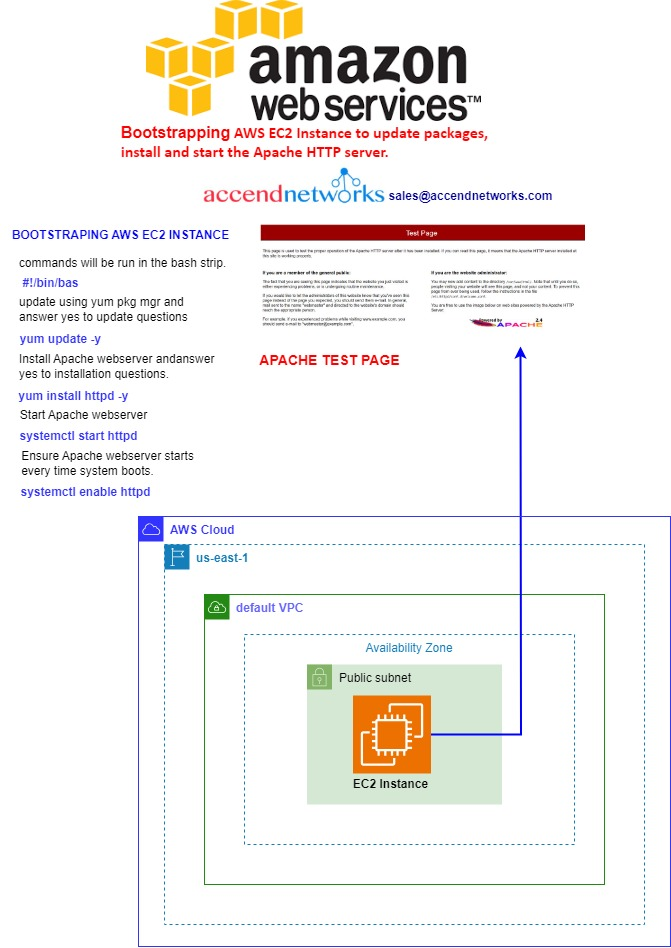

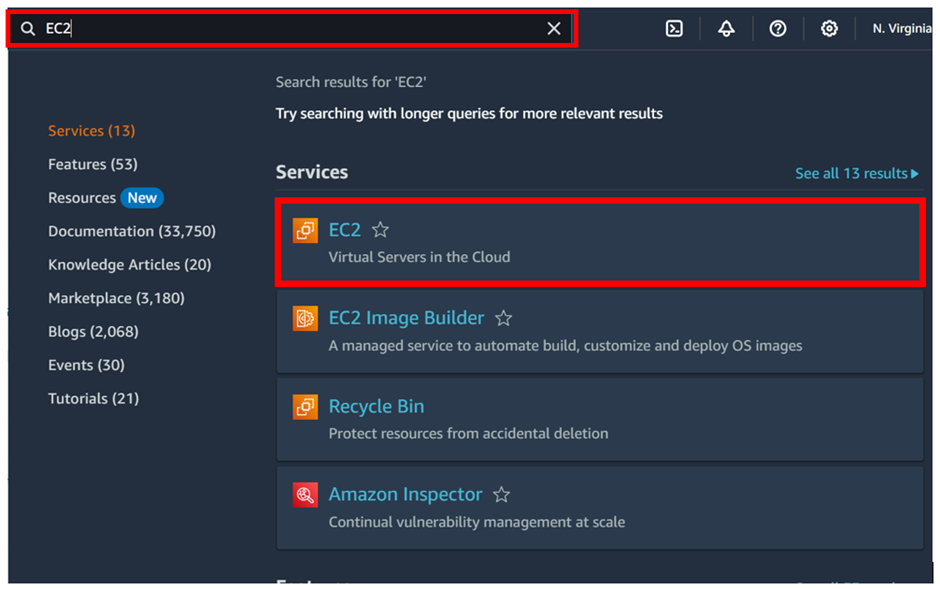

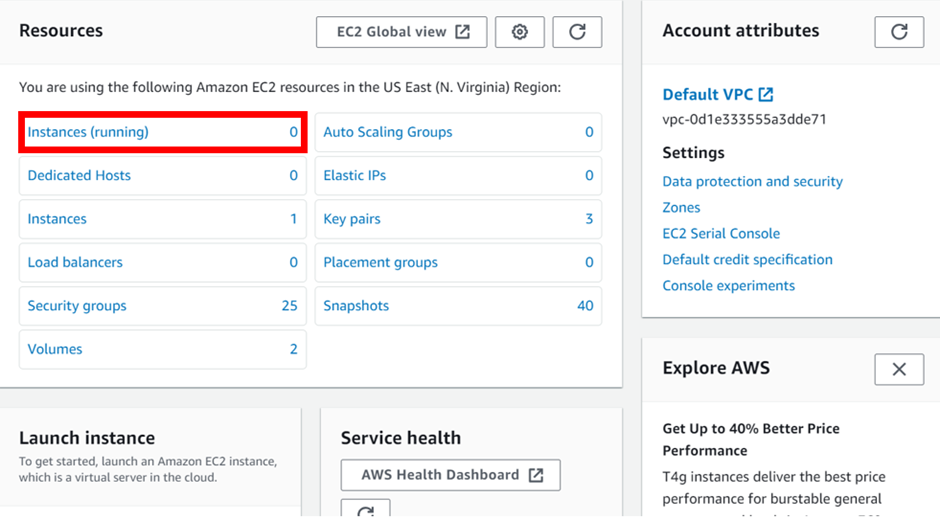

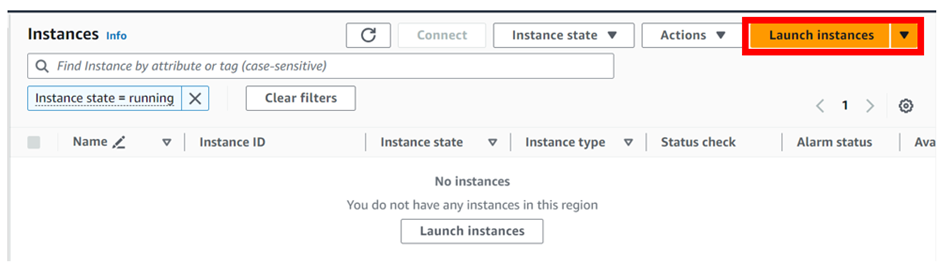

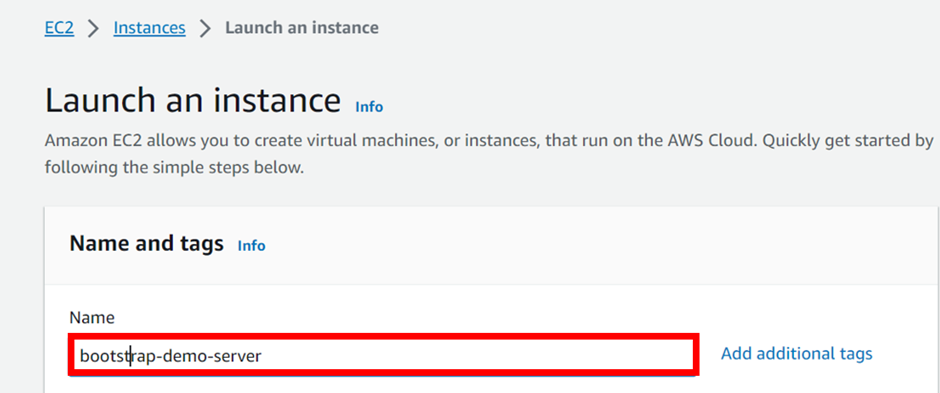

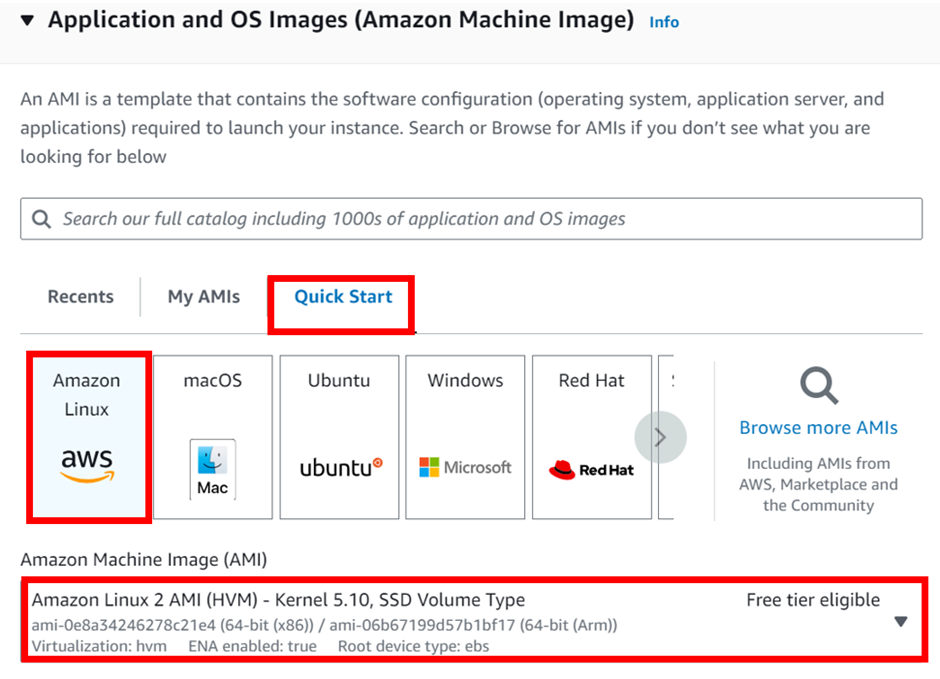

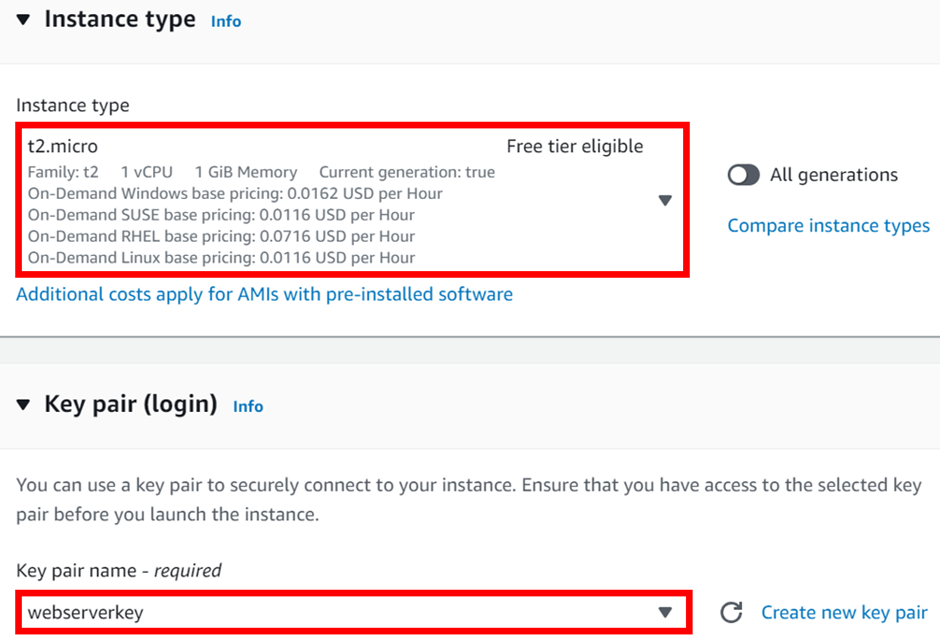

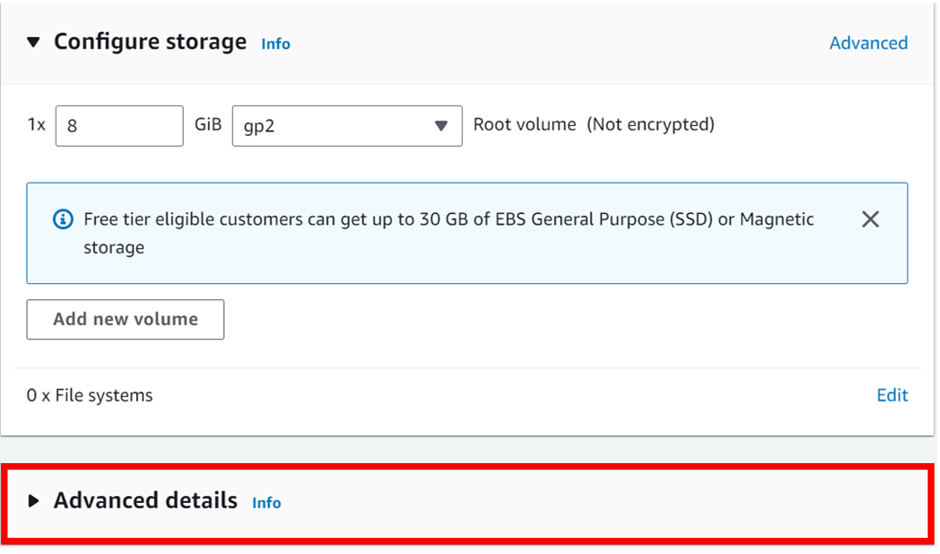

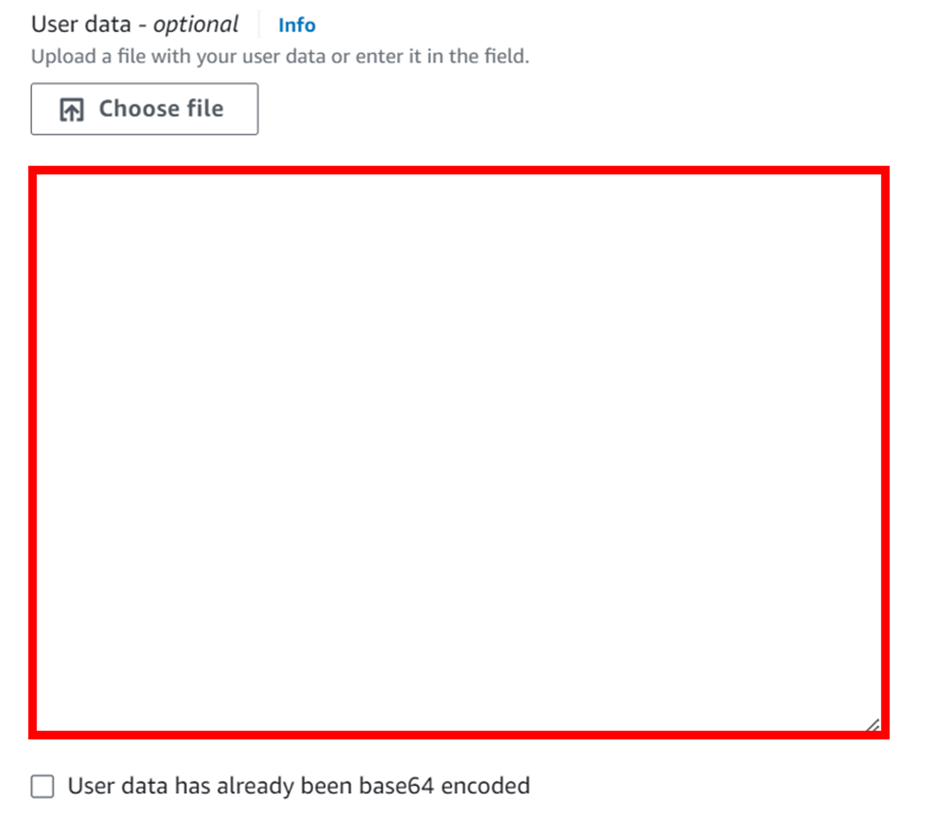

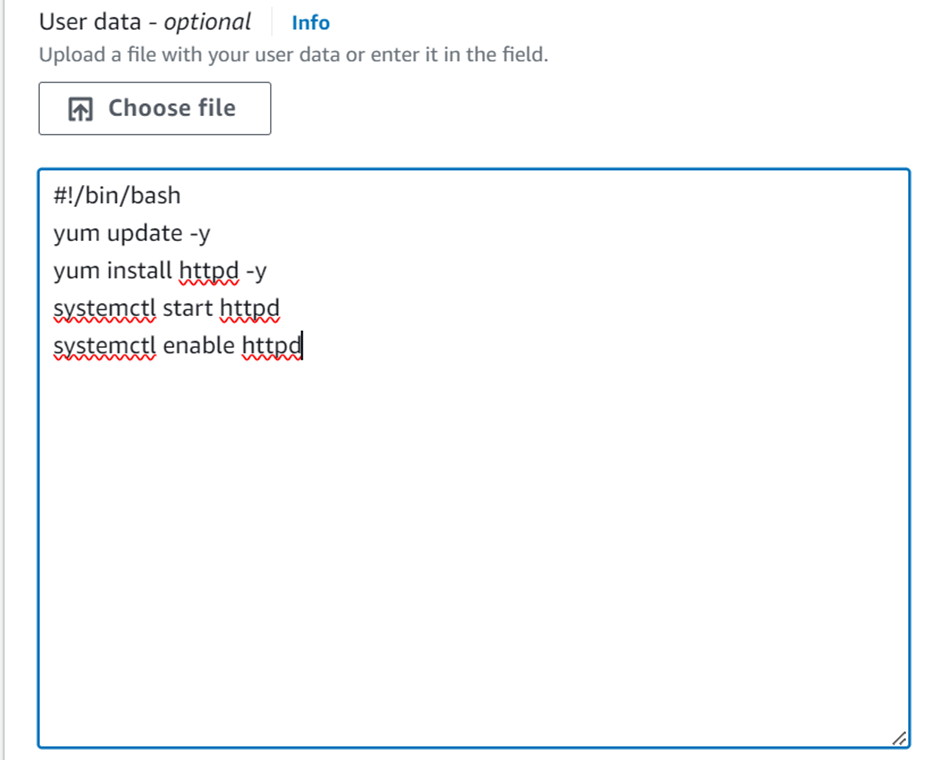

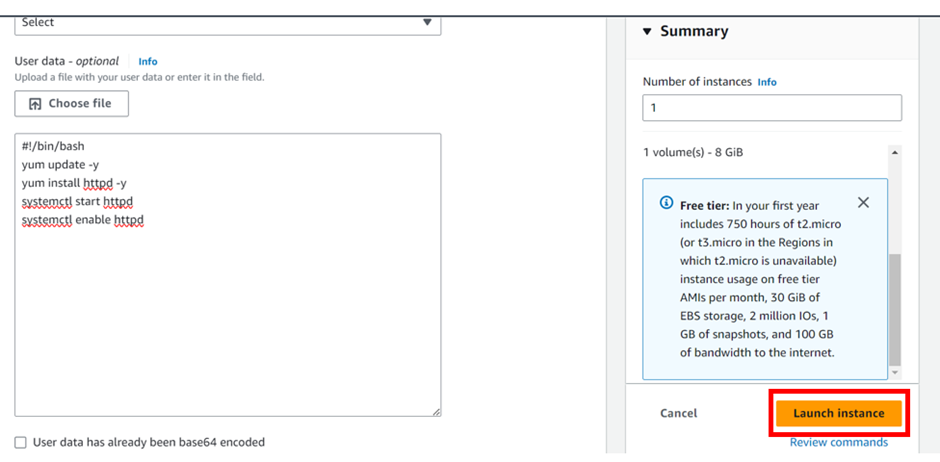

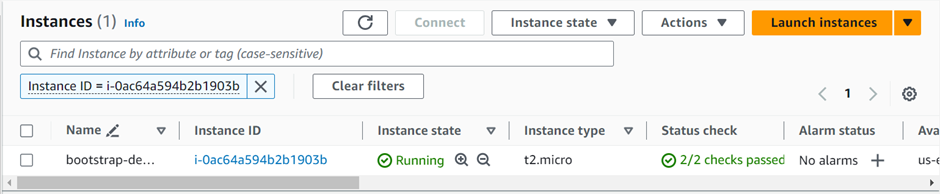

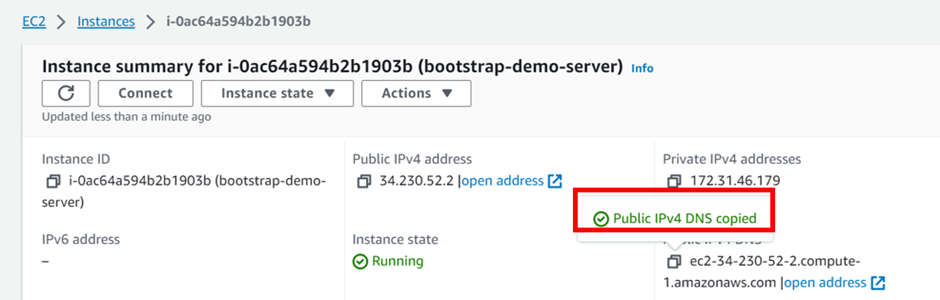

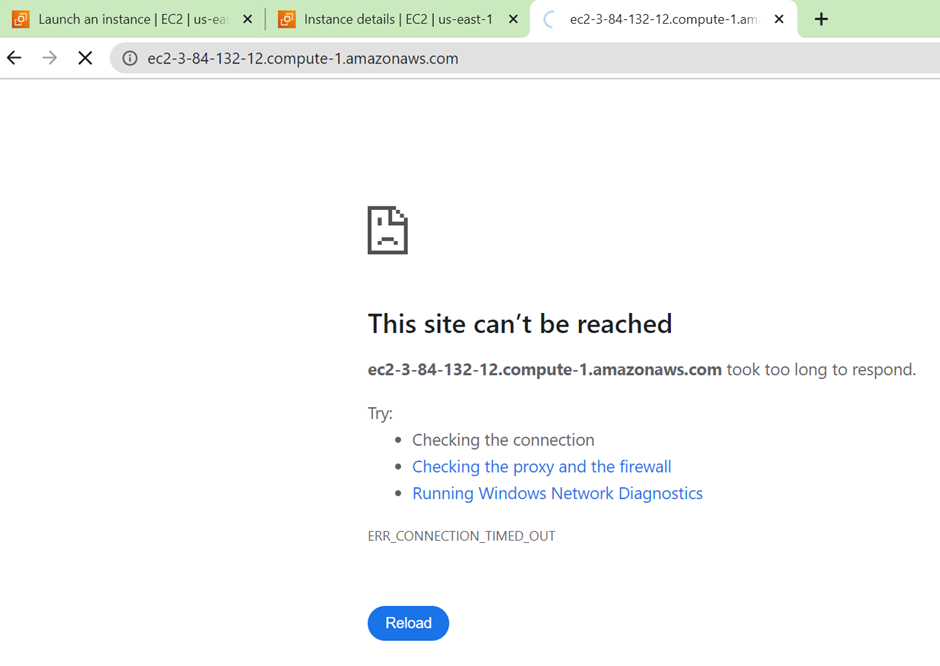

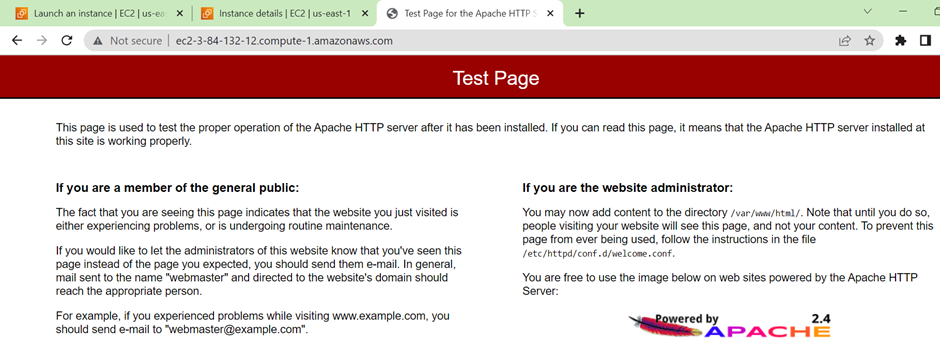

Make sure you have an EC2 instance up and running.

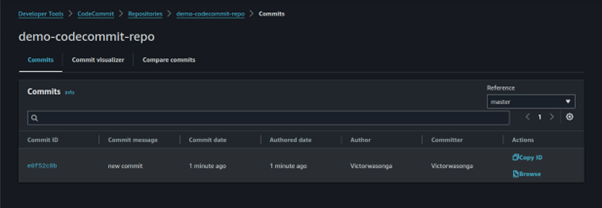

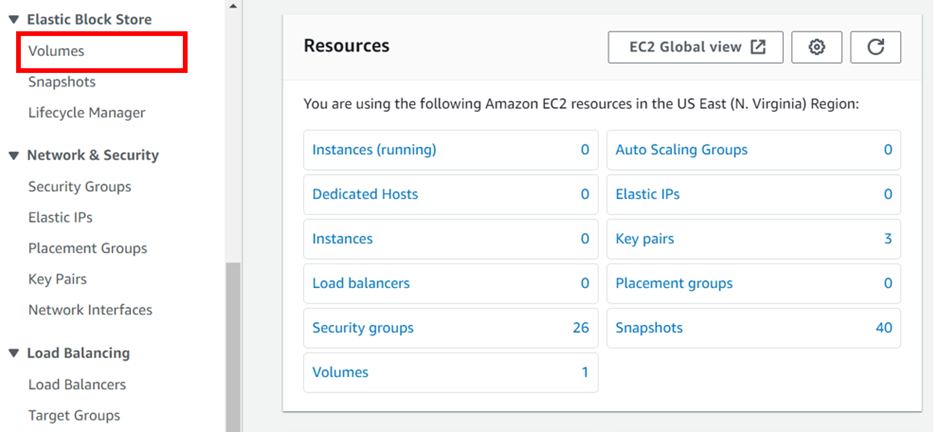

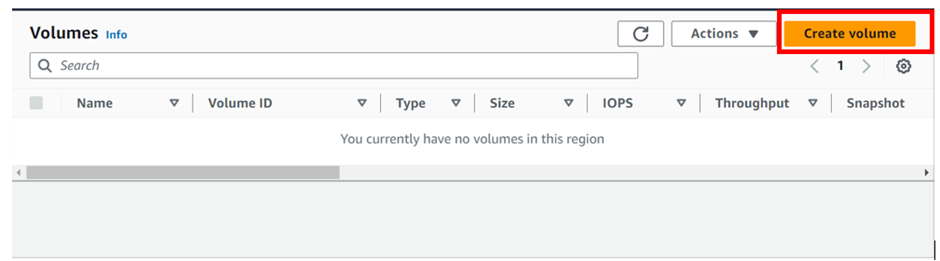

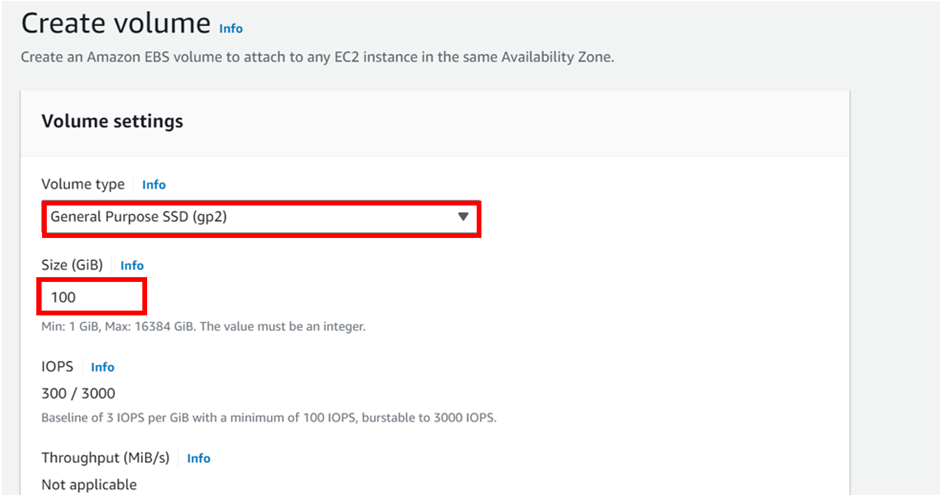

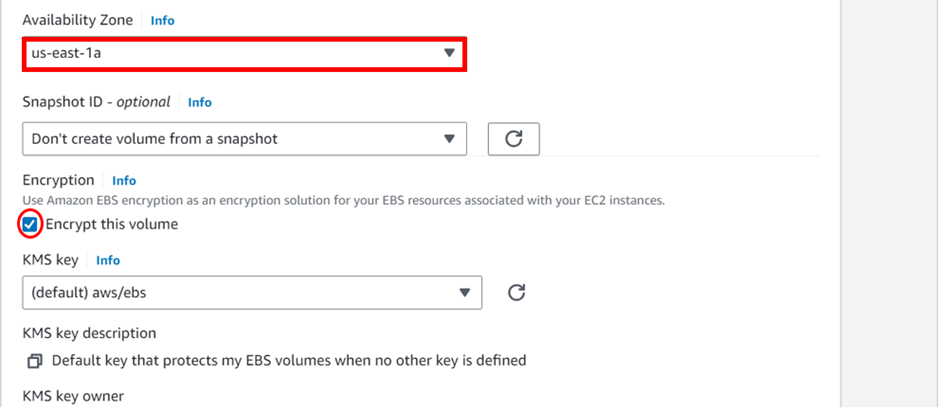

Get EBS Volume Information

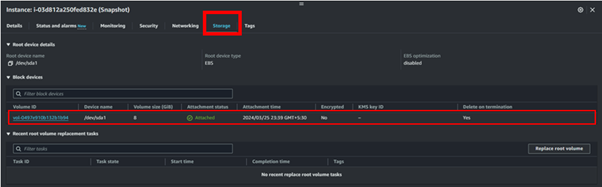

AWS Elastic Block Store (EBS) is a scalable block storage service provided by Amazon Web Services (AWS), offering persistent storage volumes for EC2 instances. To view your block storage, in the EC2 dashboard move to the storage tab.

Take a Snapshot of the Volume

An AWS EBS snapshot is a point-in-time backup of an EBS volume stored in Amazon S3. It captures all the data on the volume at the time the snapshot is taken, including the data that is in use and any data that is pending EBS snapshots are commonly used for data backup, disaster recovery, and creating new volumes from existing data.

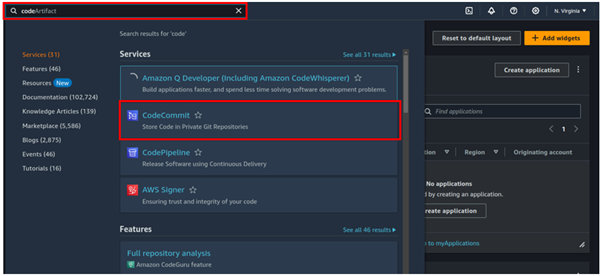

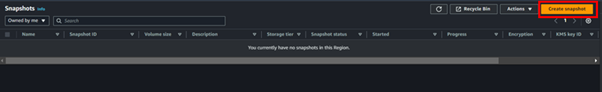

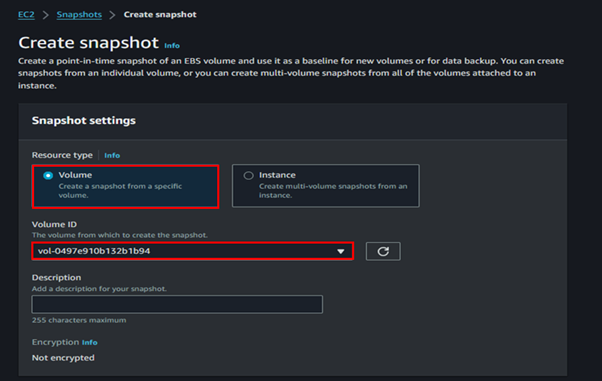

On the left side of EC2 UI, click Snapshots then click Create Snapshot.

In the Create snapshot UI, under resource types, select volumes. Then under volume ID, select the drop-down button and select your EBS volume.

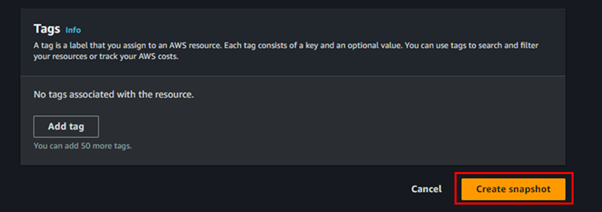

Scroll down and click Create Snapshot.

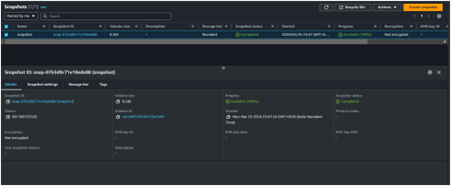

Success.

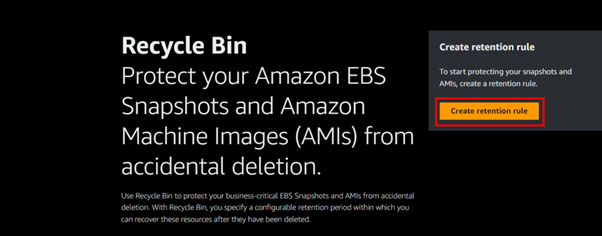

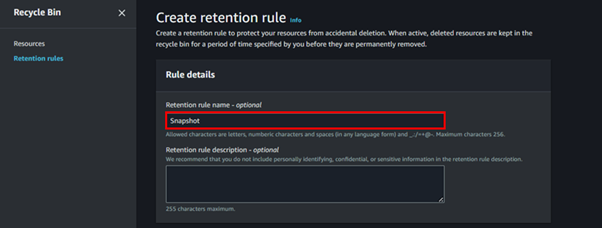

Head to the Recycle Bin console and click the Create retention rule.

Fill in retention rule details.

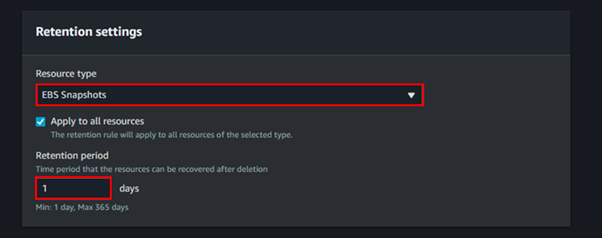

Under retention settings for resource type select the drop-down button and select EBS Snapshot, then tick the box apply for all resources, then for retention period, select one day.

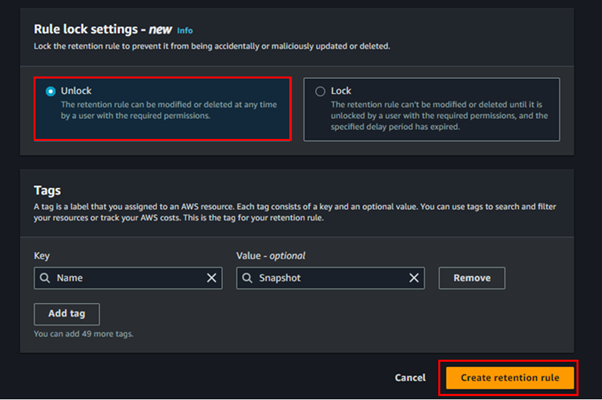

For the Rule lock settings, select Unlock.

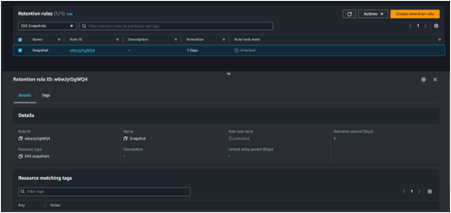

Rule Created

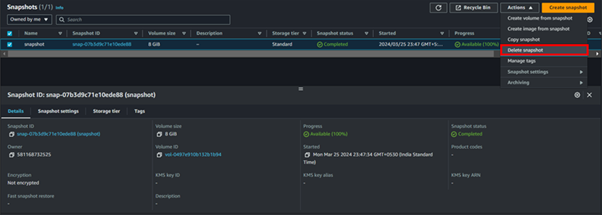

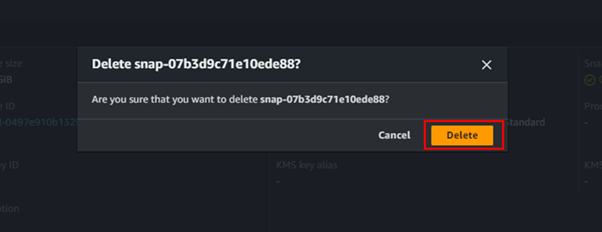

Now go ahead and delete the snapshot

Open the recycle bin

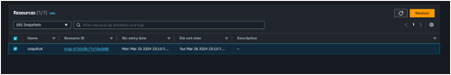

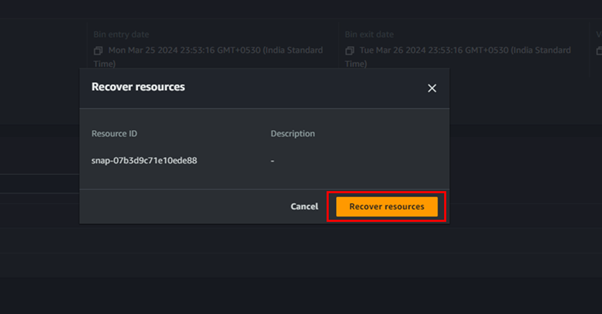

Click on the snapshot present in the recycle bin

Objective achieved

Snapshot recovered successfully

Conclusion

The AWS Recycle Bin service offers a valuable layer of protection against accidental deletions, ensuring that critical resources like EBS snapshots and AMIs can be recovered within a defined period. Whether you’re protecting against human error or looking to strengthen your disaster recovery strategy, AWS Recycle Bin is an essential tool in your AWS toolkit.

This brings us to the end of this article.

Thanks for reading and stay tuned for more.

If you have any questions concerning this article or have an AWS project that requires our assistance, please reach out to us by leaving a comment below or email us at sales@accendnetworks.com.

Thank you!