Using an Alias for Your AWS Account ID

AWS (Amazon Web Services) account IDs are unique identifiers that serve as key elements when managing resources, executing services, or configuring security measures. But remembering a 12-digit number can be tough. As a result, AWS allows users to create nicknames (aliases) for their account ID, offering an easier way to reference an account. This guide explores the benefits and steps for setting up an alias for your AWS account ID.

Why Use an Alias for Your AWS Account ID?

Readability and Memorability: A 12-digit number can be hard to recall. An alias, on the other hand, is a user-friendly label that can be descriptive and easy to recognize.

Enhanced Collaboration: If you’re working in a team, sharing an alias (like “development-team-account” or “finance-dept”) makes it easier for others to understand whose account it is.

Organizational Clarity: In a large-scale AWS environment with multiple accounts under an organization, using aliases can simplify identifying accounts, especially in multi-account setups.

Security by Hiding Details: While the account ID is not sensitive by itself, using an alias may help obscure the raw ID when sharing AWS resources or working with third-party tools. Where AWS Account Aliases Are Useful

AWS account aliases are primarily used in these scenarios:

IAM Sign-in URLs: The default sign-in URL for IAM users is based on the AWS account ID. By creating an alias, you can replace the numeric ID with the alias for easier access

Setting Up an AWS Account Alias

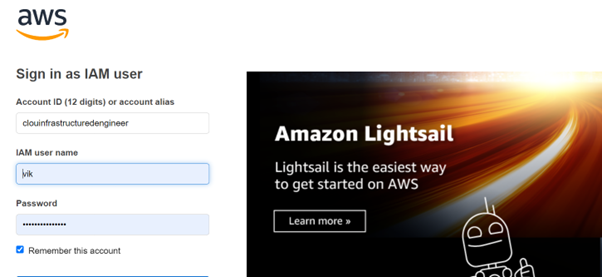

Log into your AWS Management Console as an I am user with admin privileges or you must have the following, I am roles assigned to you.

- iam:ListAccountAliases

- iam: CreateAccountAlias

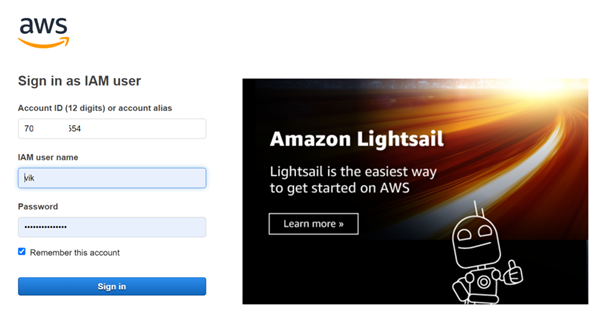

As we can see when you sign in as an I Am user, you must provide your AWS account ID which can be a daunting task to remember. This is where the account alias comes in handy.

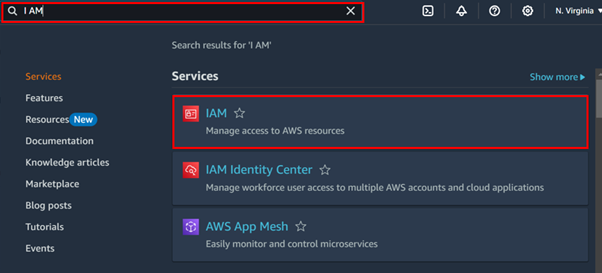

Once logged in to your AWS account, type I AM in the search bar then select I AM under services.

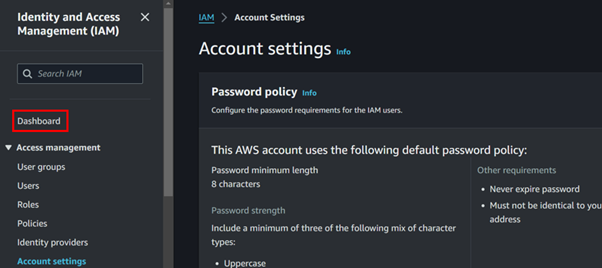

In the I AM console on the left side of the navigation pane, select dashboard.

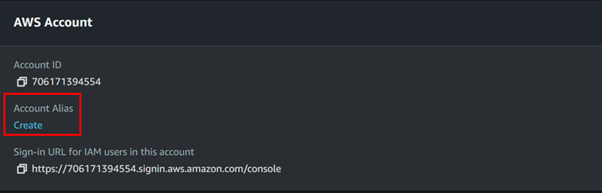

Scroll down to the AWS account section, where you will find the account Alias, then click Create.

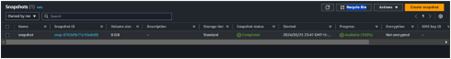

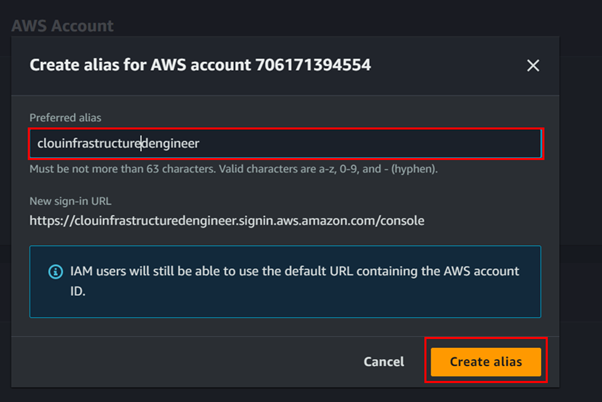

Fill in your account alias keeping in mind it must always be unique then click Create.

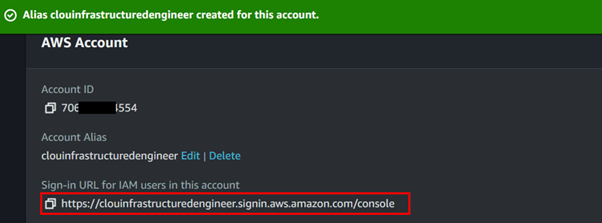

Successfully created the account alias, and as can be seen, our sign-in URL is now using our account alias, copy the URL to your clipboard then open a new browser and paste it in there.

As can now be seen, in the account ID section is our account alias, fill in your required details.

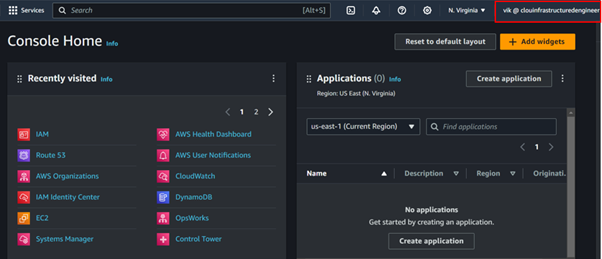

We have successfully logged in to our AWS account using the account alias and if you check at the top-hand right corner, we can see our AWS account ID is not being displayed but our account alias.

Security Considerations

While using an alias for your AWS account ID is beneficial, keep in mind:

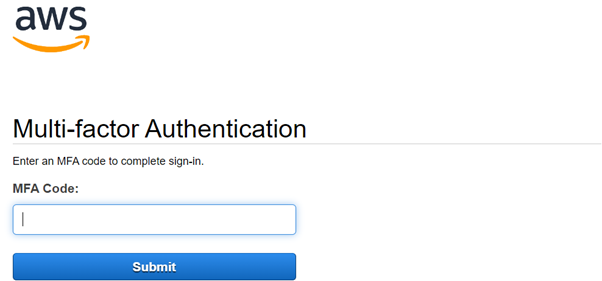

Not a Security Measure: An alias doesn’t provide additional security. It’s purely for convenience. Always ensure that your account is secured using strong IAM policies, MFA (Multi-Factor Authentication), and least-privileged access.

Unique Across AWS: Aliases are globally unique, which could result in name conflicts if your preferred alias is already in use.

Best Practices for AWS Account Aliases

Choose a Descriptive Alias: Your alias should make sense within your organization. Use department names, environments (e.g., development, production), or geographical regions to make the account easily identifiable.

Keep Aliases Short: Longer aliases can make the IAM URL cumbersome. A good balance is a short but meaningful name.

Conclusion

With just a few clicks, you can set an easily recognizable alias that replaces the default 12-digit numeric account ID in several key places.

That’s it, thanks for reading, and stay tuned for more.

If you have any questions concerning this article or have an AWS project that requires our assistance, please reach out to us by leaving a comment below or email us at sales@accendnetworks.com.

Thank you!