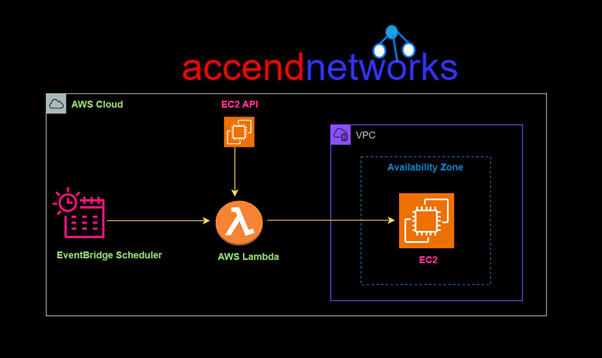

Automating Your Infrastructure: Leveraging AWS Lambda for Efficient Stale EBS Snapshot Cleanup

EBS snapshots are backups of your EBS volumes and can also be used to create new EBS volumes or Amazon Machine Images (AMIs). However, they can become orphaned when instances are terminated or volumes are deleted. These unused snapshots take up space and incur unnecessary costs.

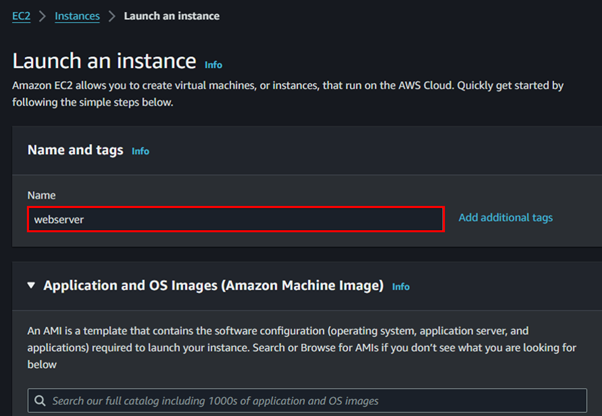

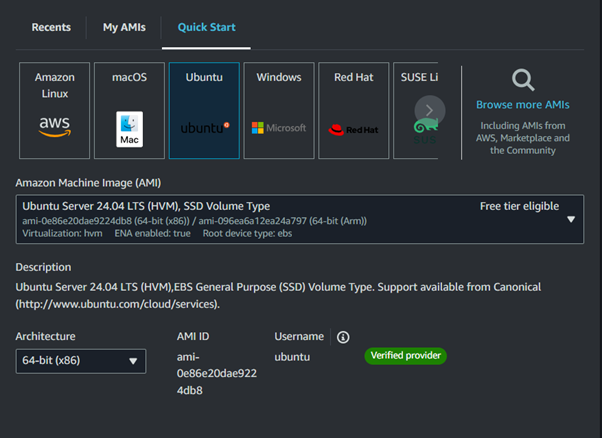

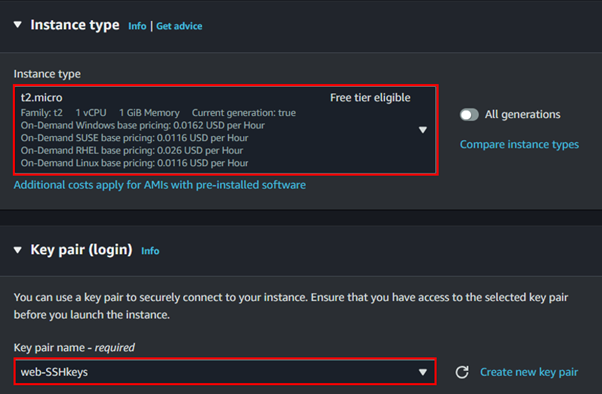

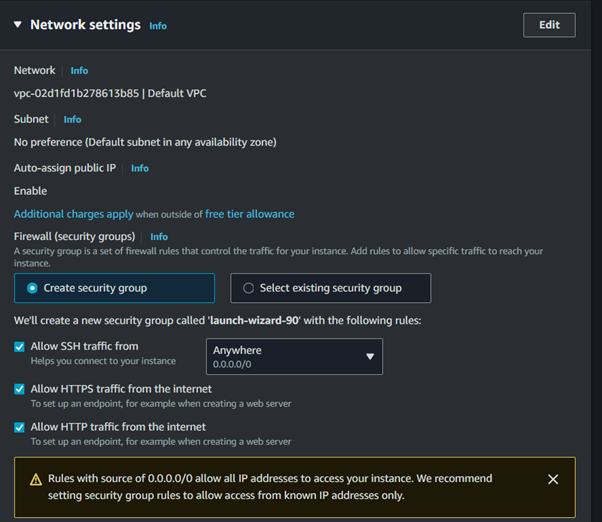

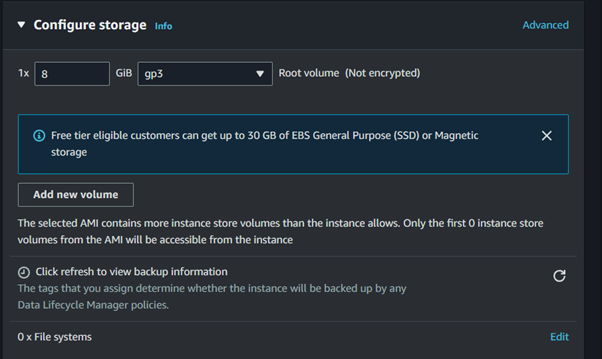

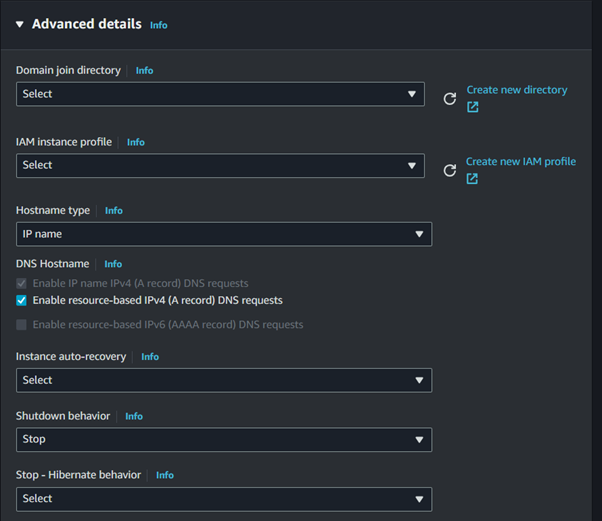

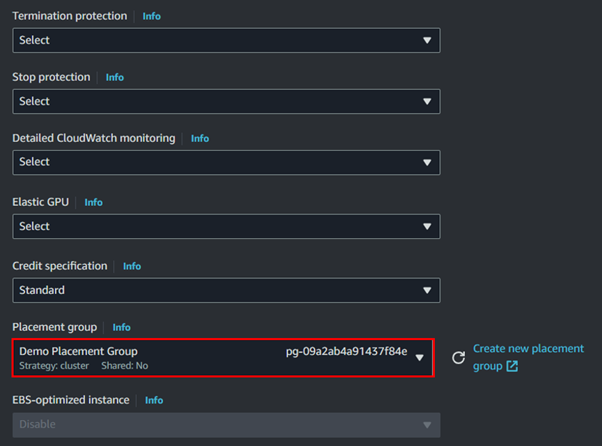

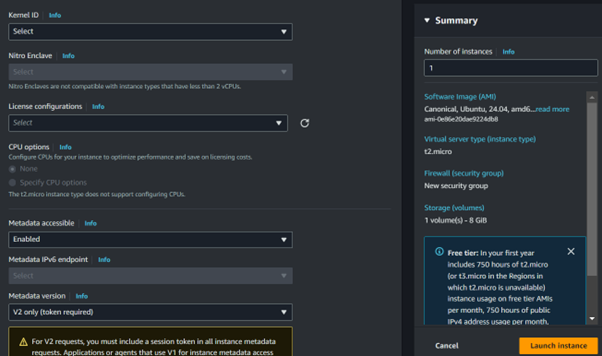

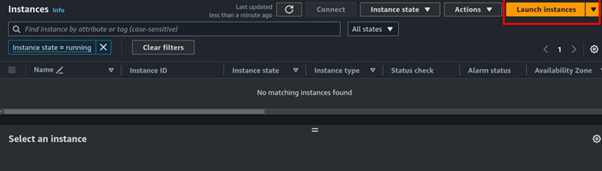

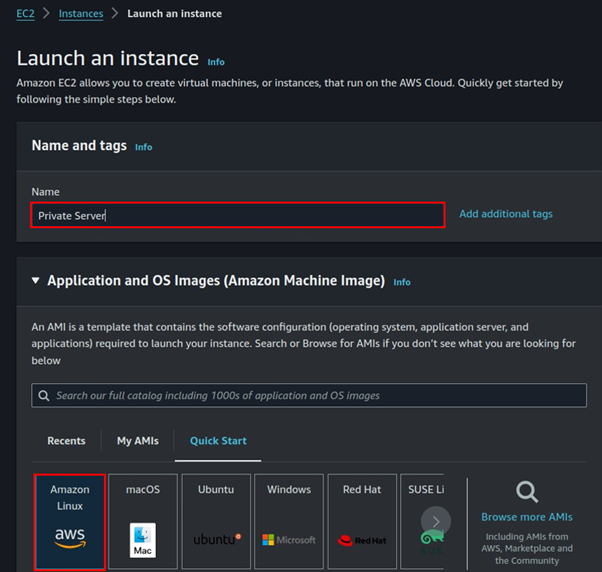

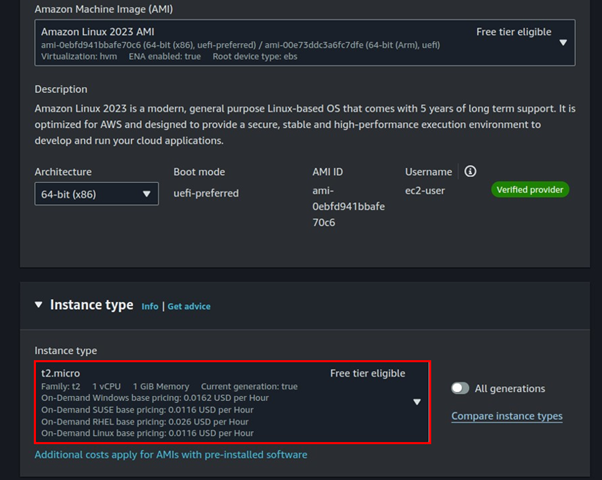

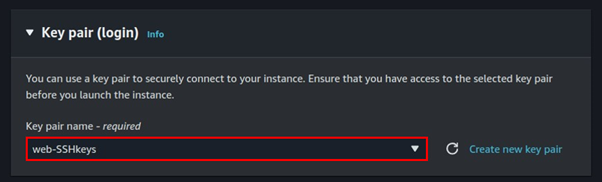

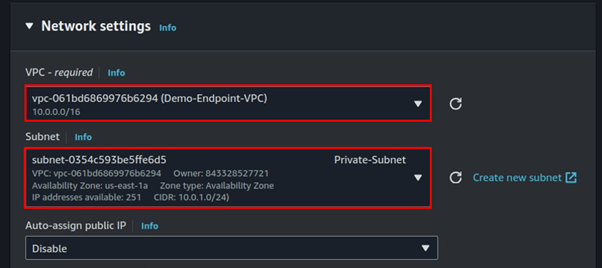

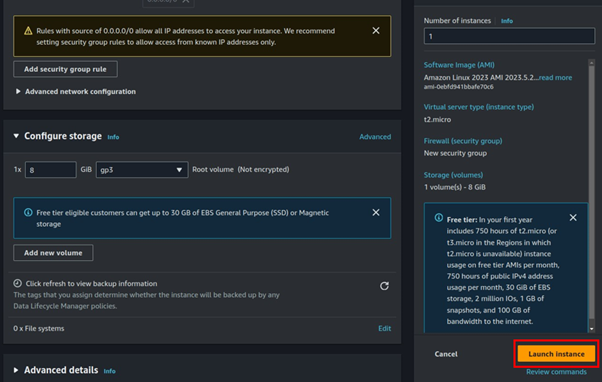

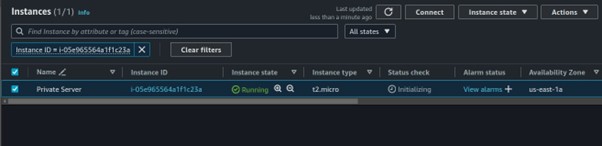

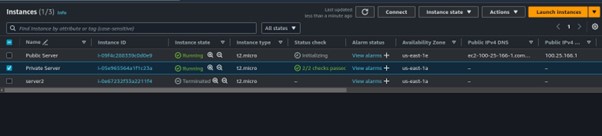

Before proceeding, ensure that you have an EC2 instance up and running as a prerequisite.

AWS Lambda creation

We will configure a Lambda function that automatically deletes stale EBS snapshots when triggered.

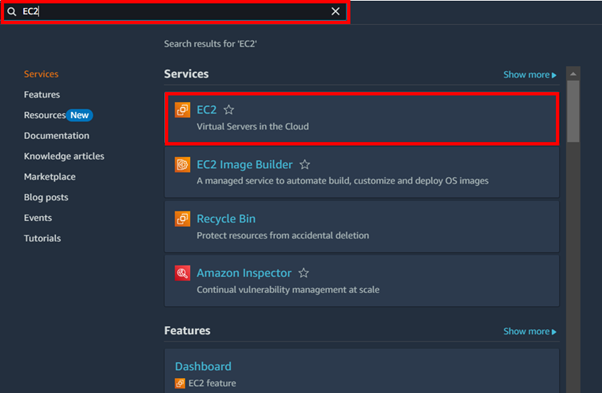

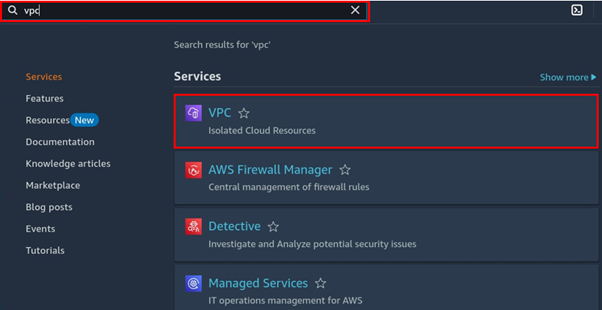

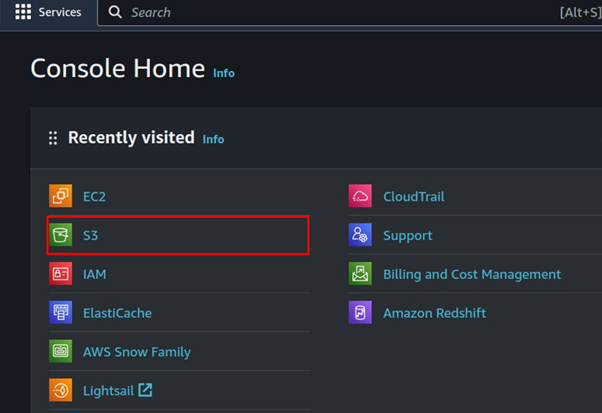

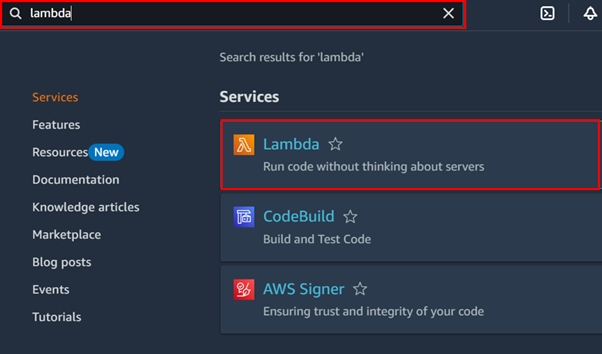

To get started, log in to the AWS Management Console and navigate to the AWS Lambda dashboard. Simply type “Lambda” in the search bar and select Lambda under the services section. Let’s proceed to create our Lambda function.

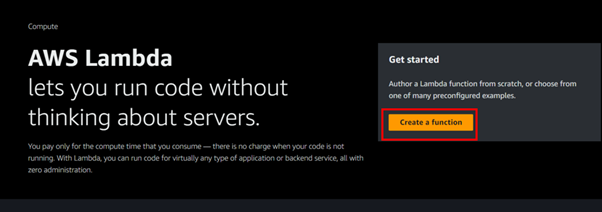

In the Lambda dashboard, click on Create Function.

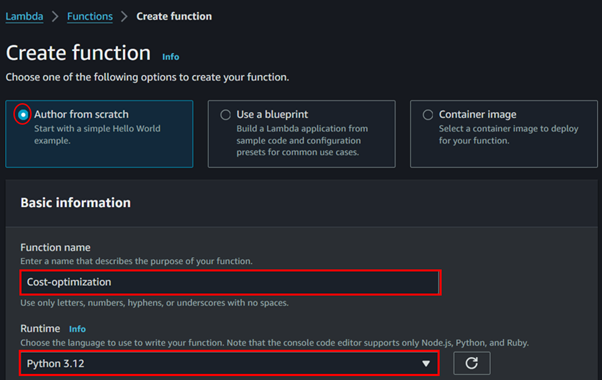

For creation method, select the radio button for Author from scratch, which will create a new Lambda function from scratch.

Next, configure the basic information by giving your Lambda function a meaningful name.

Then, select the runtime environment. Since we are using Python, choose Python 3.12.

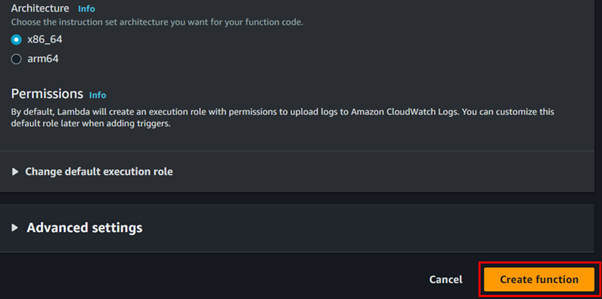

These are the only settings required to create your Lambda function. Click on Create Lambda Function.

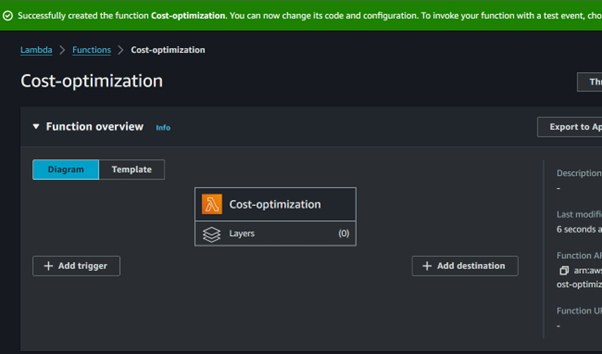

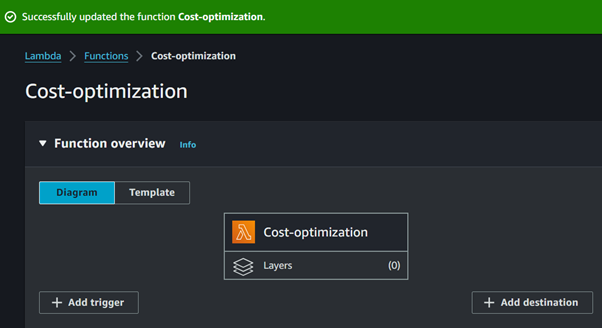

Our function has been successfully created.

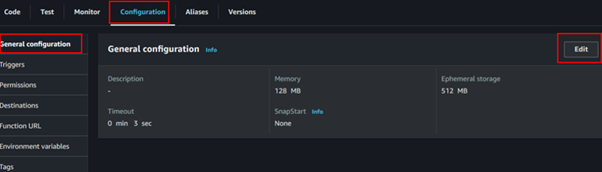

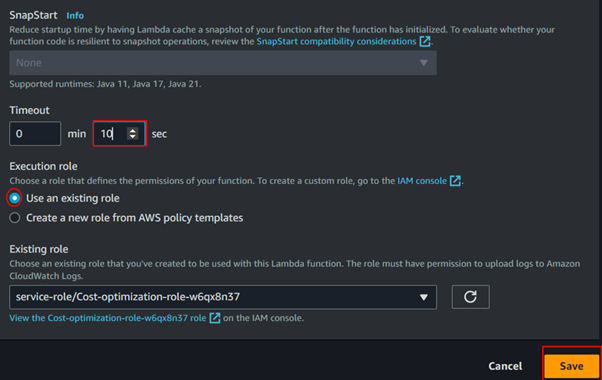

By default, the Lambda timeout is set to 3 seconds, which is the maximum amount of time the function can run before being terminated. We will adjust this timeout to 10 seconds.

To make this adjustment, navigate to the Configuration tab, then click on General Configuration. From there, locate and click the Edit button.

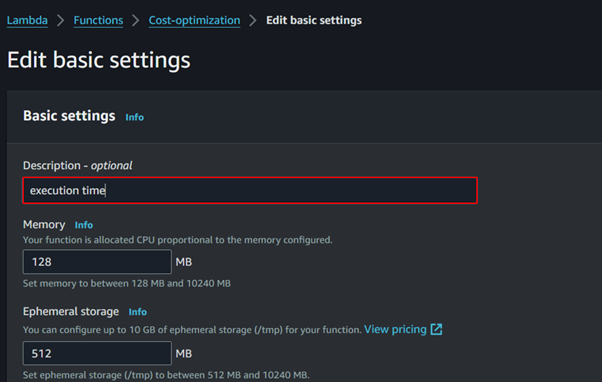

In the edit basic settings dashboard, name your basic settings then scroll down.

Under the Timeout section, adjust the value to 10 seconds, then click Save.

Writing the Lambda Function

import boto3

def lambda_handler(event, context):

ec2 = boto3.client(‘ec2’)

# Get all EBS snapshots

response = ec2.describe_snapshots(OwnerIds=[‘self’])

# Get all active EC2 instance IDs

instances_response = ec2.describe_instances(Filters=[{‘Name’: ‘instance-state-name’, ‘Values’: [‘running’]}])

active_instance_ids = set()

for reservation in instances_response[‘Reservations’]:

for instance in reservation[‘Instances’]:

active_instance_ids.add(instance[‘InstanceId’])

# Iterate through each snapshot and delete if it’s not attached to any volume or the volume is not attached to a running instance

for snapshot in response[‘Snapshots’]:

snapshot_id = snapshot[‘SnapshotId’]

volume_id = snapshot.get(‘VolumeId’)

if not volume_id:

# Delete the snapshot if it’s not attached to any volume

ec2.delete_snapshot(SnapshotId=snapshot_id)

print(f”Deleted EBS snapshot {snapshot_id} as it was not attached to any volume.”)

else:

# Check if the volume still exists

try:

volume_response = ec2.describe_volumes(VolumeIds=[volume_id])

if not volume_response[‘Volumes’][0][‘Attachments’]:

ec2.delete_snapshot(SnapshotId=snapshot_id)

print(f”Deleted EBS snapshot {snapshot_id} as it was taken from a volume not attached to any running instance.”)

except ec2.exceptions.ClientError as e:

if e.response[‘Error’][‘Code’] == ‘InvalidVolume.NotFound’:

# The volume associated with the snapshot is not found (it might have been deleted)

ec2.delete_snapshot(SnapshotId=snapshot_id)

print(f”Deleted EBS snapshot {snapshot_id} as its associated volume was not found.”)

Our Lambda function, powered by Boto3, automates the identification and deletion of stale EBS snapshots.

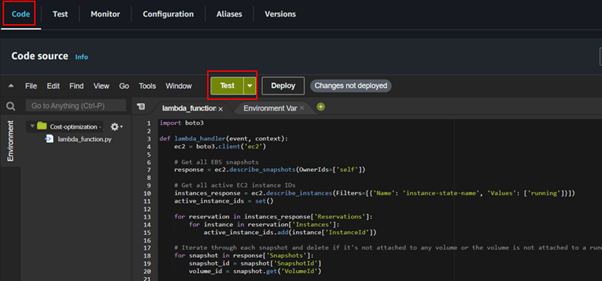

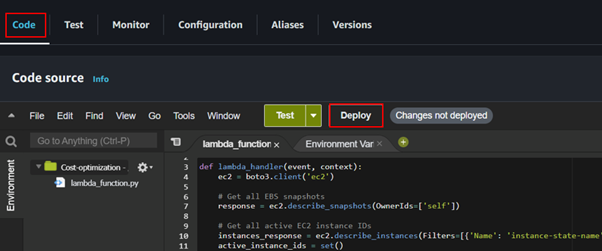

Navigate to the code section then paste in the code.

After pasting the code click on test.

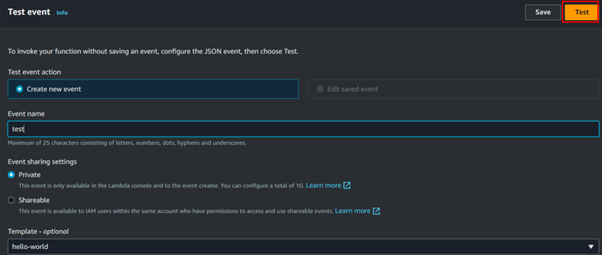

In the test dashboard, fill in the event name, you can save it or just click on test.

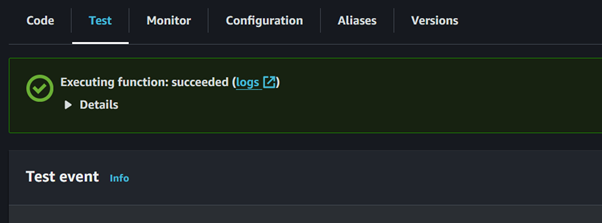

Our test execution is successful.

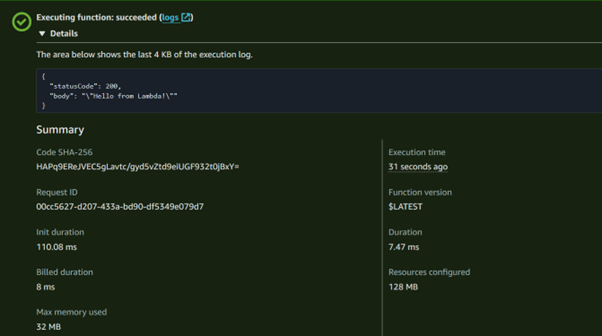

If you expand the view to check the execution details, you should see a status code of 200, indicating that the function executed successfully.

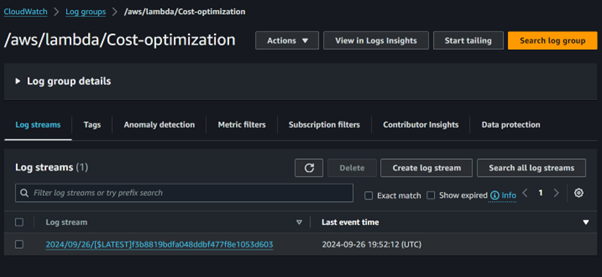

You can also view the log streams to debug any errors that may arise, allowing you to troubleshoot.

IAM Role

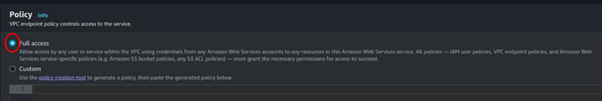

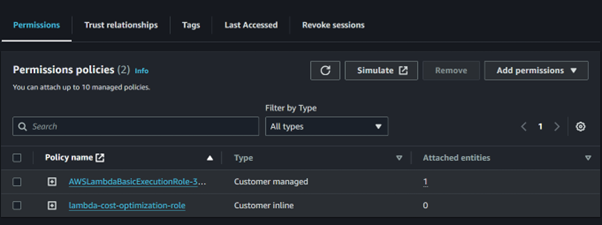

In our project, the Lambda function is central to optimizing AWS costs by identifying and deleting stale EBS snapshots. To accomplish this, it requires specific permissions, including the ability to describe and delete snapshots, as well as to describe volumes and instances.

To ensure our Lambda function has the necessary permissions to interact with EBS and EC2, proceed as follows.

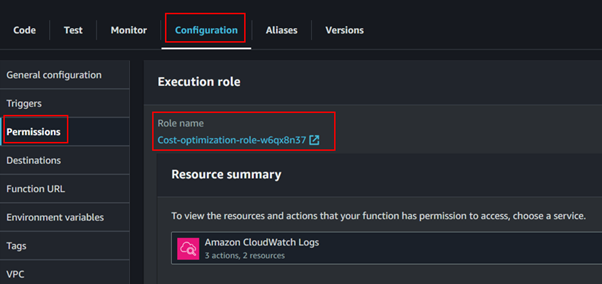

In the Lambda function details page, click on the Configuration tab, scroll down to the Permissions section, and expand it then click on the execution role link to open the IAM role configuration in a new tab.

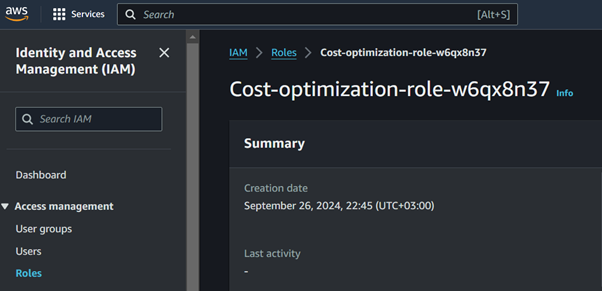

In the new tab that opens, you’ll be directed to the IAM Console with the details of the IAM role associated with your Lambda function.

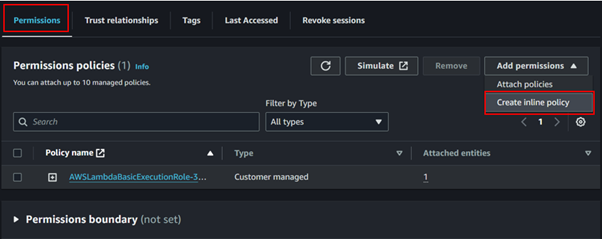

Scroll down to the Permissions section of the IAM role details page, and then click on the Add inline policy button to create a new inline policy.

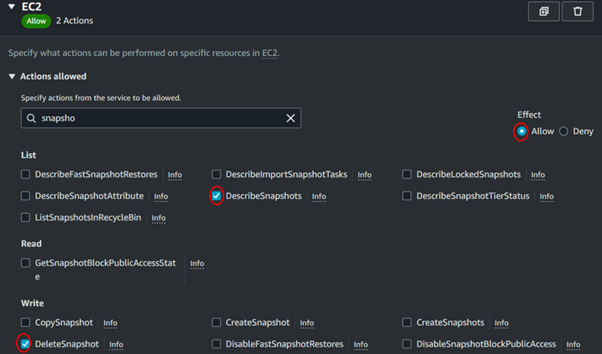

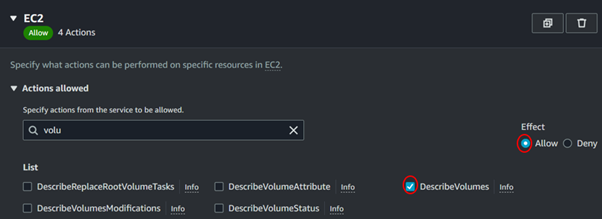

Choose EC2 as the service to filter permissions. Then, search for Snapshot and add the following options: DescribeSnapshots and DeleteSnapshots.

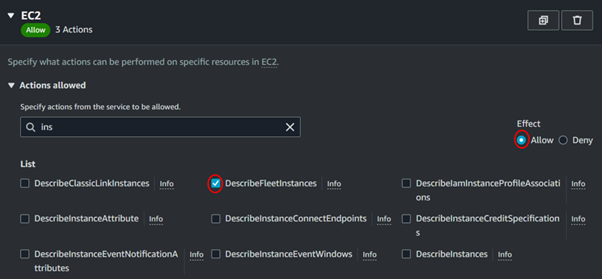

Also, add these permissions as well Describe Instances and Describe Volume.

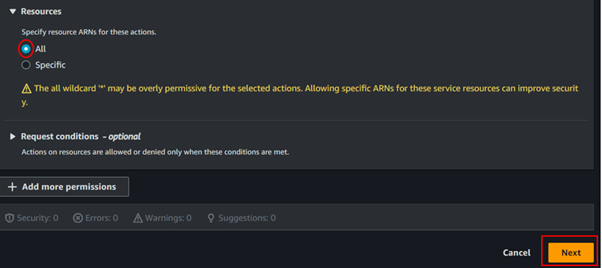

Under the Resources section, select “All” to apply the permissions broadly. Then, click the “Next” button to proceed.

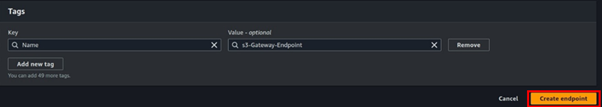

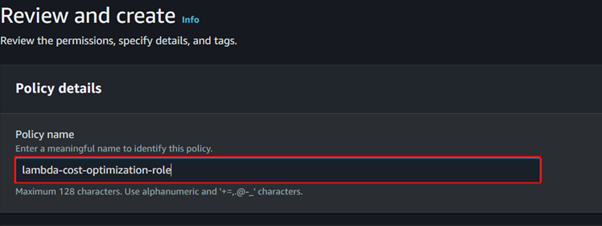

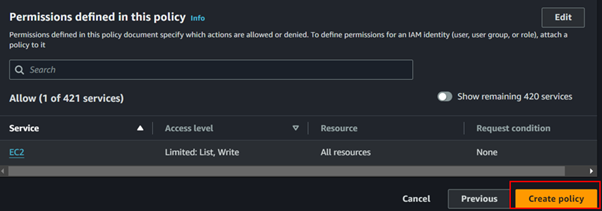

Give the name of the policy then click the Create Policy button.

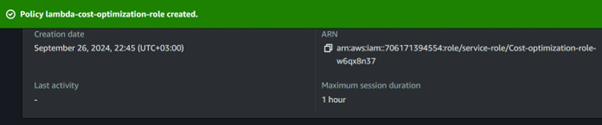

Our policy has been successfully created.

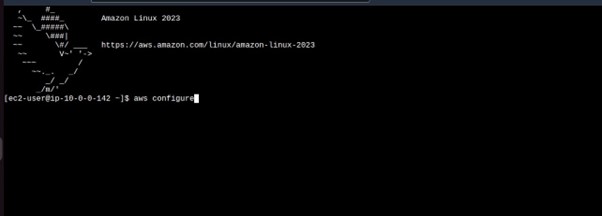

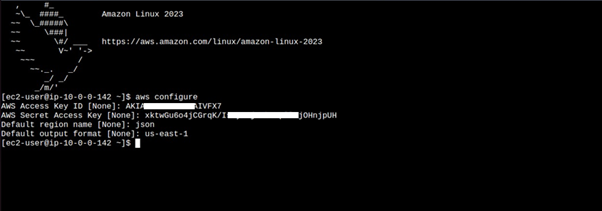

After updating our lambda function permissions, click deploy. After deployment, our lambda function will be ready for invocation, we can either invoke the lambda function directly through the AWS CLI by an API call or indirectly through other AWS services.

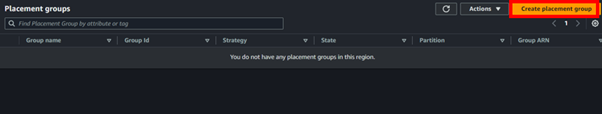

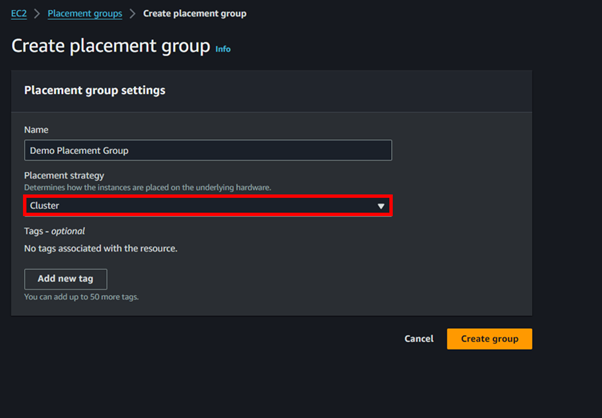

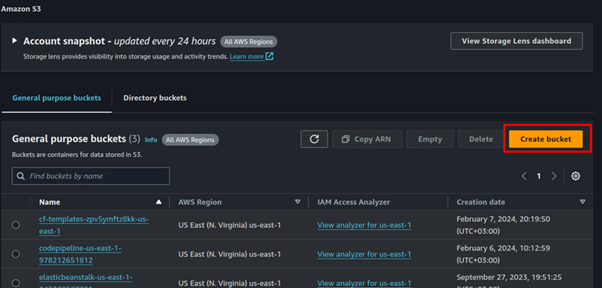

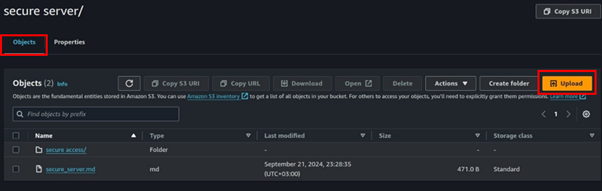

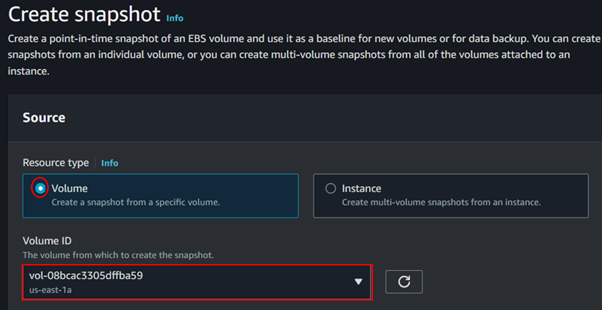

After deployment let’s now head to the EC2 console and create a snapshot. Navigate to the EC2 console and locate snapshot in the left UI of EC2 dashboard then click create snapshot.

For resource type, select volume. Choose the EBS volume for which you want to create a snapshot from the dropdown menu.

Optionally, add a description for the snapshot to provide more context.

Double-check the details you’ve entered to ensure accuracy.

Once you’re satisfied, click on the Create Snapshot button to initiate the snapshot creation process.

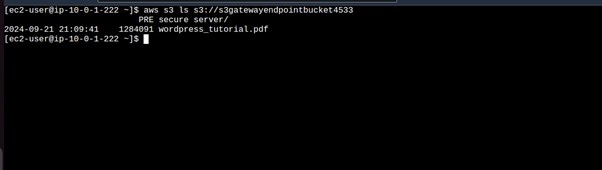

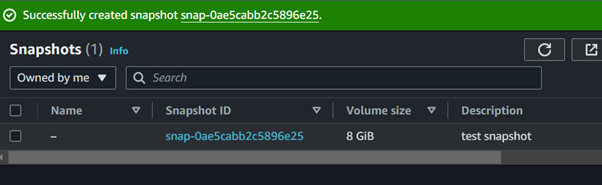

Taking a look at the EC2 dashboard, we can see we have one volume and one snapshot.

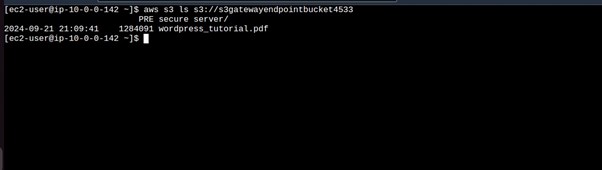

Go a head and delete your snapshot then take a look at the EBS volumes and snapshots, we can see we have one snapshot, we will trigger our lambda function to delete this snapshot.

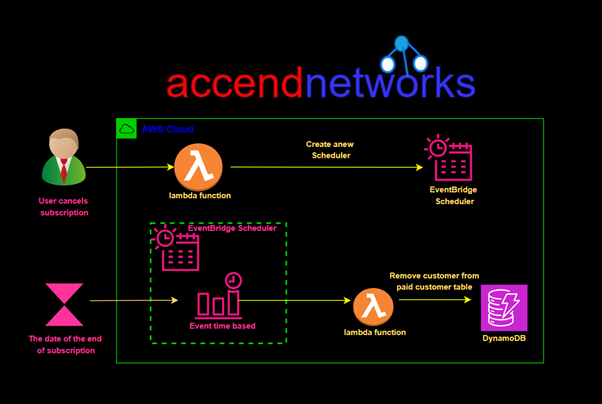

We can use the Eventbridge scheduler to trigger our lambda function, which automates this process, but for this demo, I will run a CLI command to invoke our lambda function directly. Now going back to the EC2 dashboard and checking our snapshot we can see we have zero snapshots.

This brings us to the end of this blog clean-up.

Thanks for reading and stay tuned for more.

If you have any questions concerning this article or have an AWS project that requires our assistance, please reach out to us by leaving a comment below or email us at sales@accendnetworks.com.

Thank you!