How To Create AWS SQS Sending Messages.

In the dynamic realm of cloud computing, efficient communication between components is paramount for building robust and scalable applications. Amazon Simple Queue Service (SQS) emerges as a beacon in this landscape, offering a reliable and scalable message queuing service.

Understanding Amazon SQS Queue

Amazon SQS is a fully managed message queuing service that offers a secure, durable, and available hosted queue that lets you integrate and decouple distributed software systems and components.

Key Features of Amazon SQS

Scalability: SQS seamlessly scales with the increasing volume of messages, accommodating the dynamic demands of applications without compromising performance.

Reliability: With redundant storage across multiple availability zones, SQS ensures the durability of messages, minimizing the risk of data loss and enhancing the overall reliability of applications.

Simple Integration: SQS integrates effortlessly with various AWS services, enabling developers to design flexible and scalable architectures without the need for complex configurations.

Fully Managed: As a fully managed service, SQS takes care of administrative tasks such as hardware provisioning, software setup, and maintenance, allowing developers to focus on building resilient applications.

Different Message Types: SQS supports both standard and FIFO (First-In-First-Out) queues, providing flexibility for handling different types of messages based on their order of arrival and delivery requirements.

Use Cases of Amazon SQS Queue

Decoupling Microservices: SQS plays a pivotal role in microservices architectures by decoupling individual services, allowing them to operate independently and asynchronously.

Batch Processing: The ability of SQS to handle large volumes of messages makes it well-suited for batch processing scenarios, where efficiency and reliability are paramount.

Event-Driven Architectures: SQS integrates seamlessly with AWS Lambda, making it an ideal choice for building event-driven architectures. It ensures that events are reliably delivered and processed by downstream services.

There are two types of Queues:

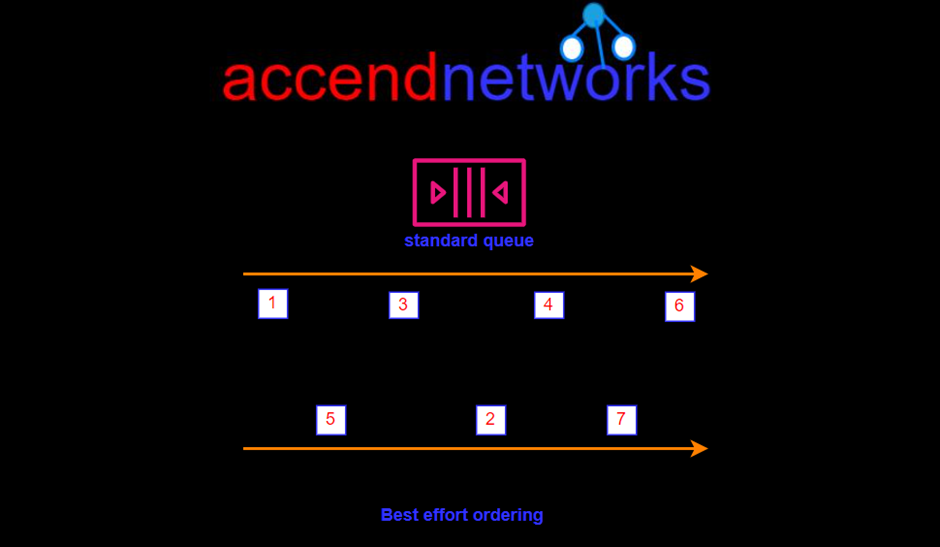

Standard Queues (default)

SQS offers a standard queue as the default queue type. It allows you to have an unlimited number of transactions per second. It guarantees that a message is delivered at least once. However, sometimes, more than one copy of a message might be delivered out of order. It provides best-effort ordering which ensures that messages are generally delivered in the same order as they are sent but it does not provide a guarantee.

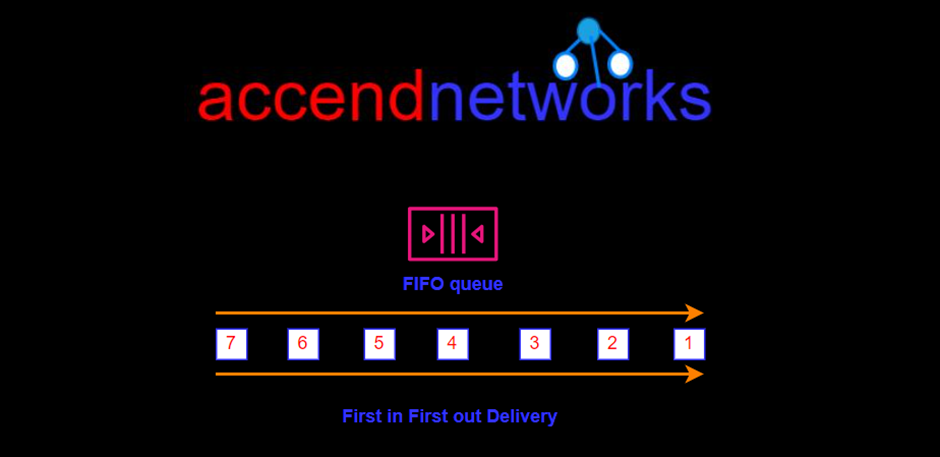

FIFO Queues (First-In-First-Out)

The FIFO Queue complements the standard Queue. It guarantees to order, i.e., the order in which they are sent is also received in the same order.

The most important features of a queue are FIFO Queue and exactly-once processing, i.e., a message is delivered once and remains available until the consumer processes and deletes it. FIFO Queue does not allow duplicates to be introduced into the Queue.

FIFO Queues are limited to 300 transactions per second but have all the capabilities of standard queues.

The most important features of a queue are FIFO Queue and exactly-once processing, i.e., a message is delivered once and remains available until the consumer processes and deletes it. FIFO Queue does not allow duplicates to be introduced into the Queue.

FIFO Queues are limited to 300 transactions per second but have all the capabilities of standard queues.

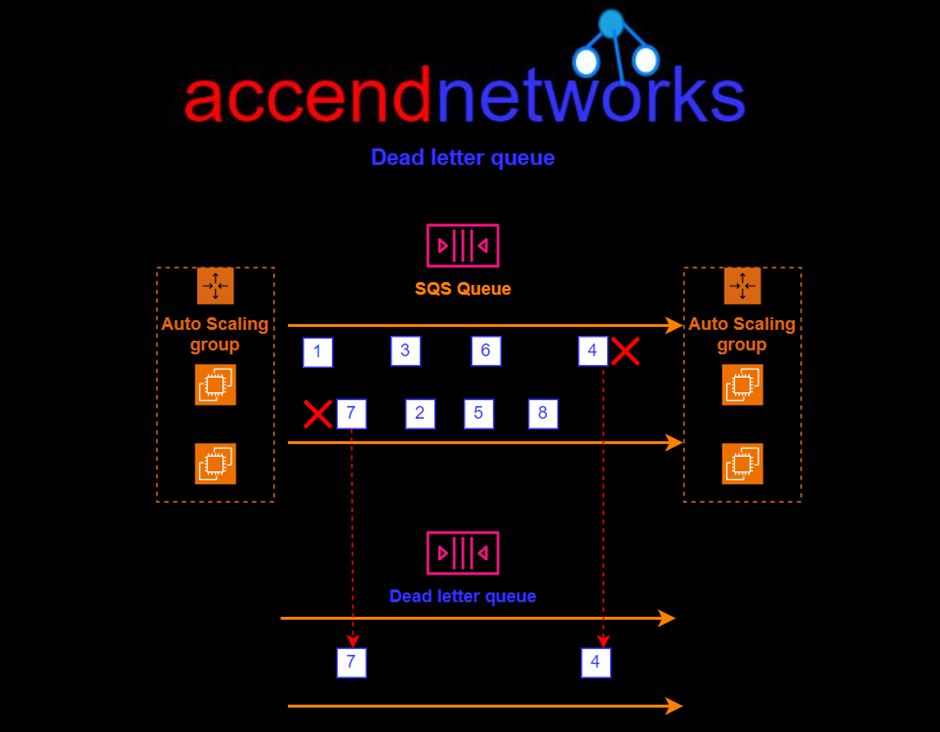

amazon dead letter queue

This is not a type of queue but it’s kind of a use case and configuration. It’s a standard or FIFO queue that has been specified as a dead letter queue. The main task of the dead letter queue is handling message failure. It lets you set aside and isolate messages that can be processed correctly to determine and analyze why they couldn’t be processed.

Let’s make our hands dirty.

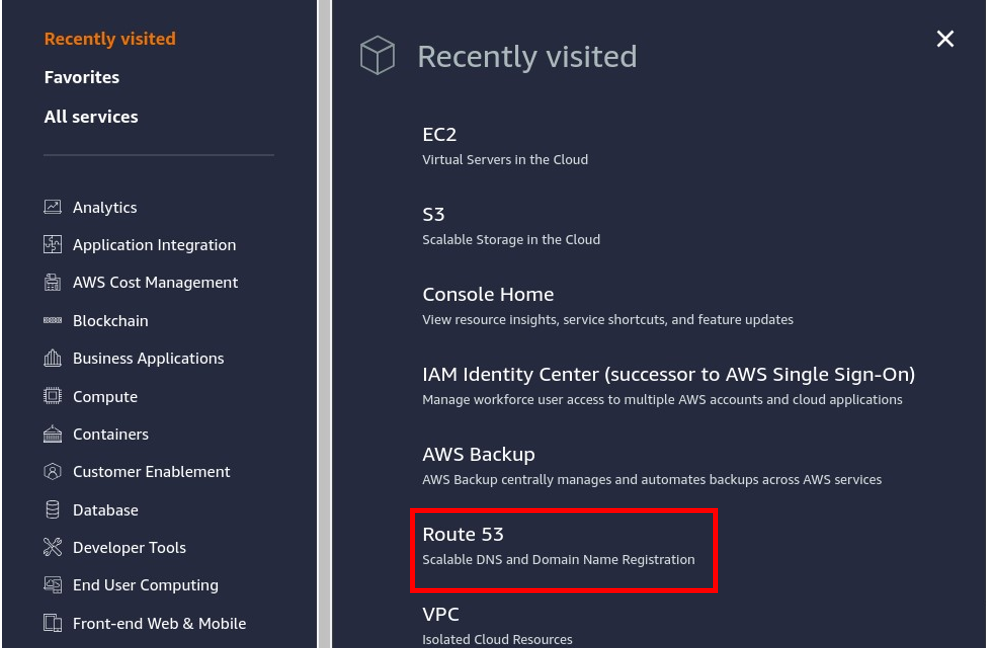

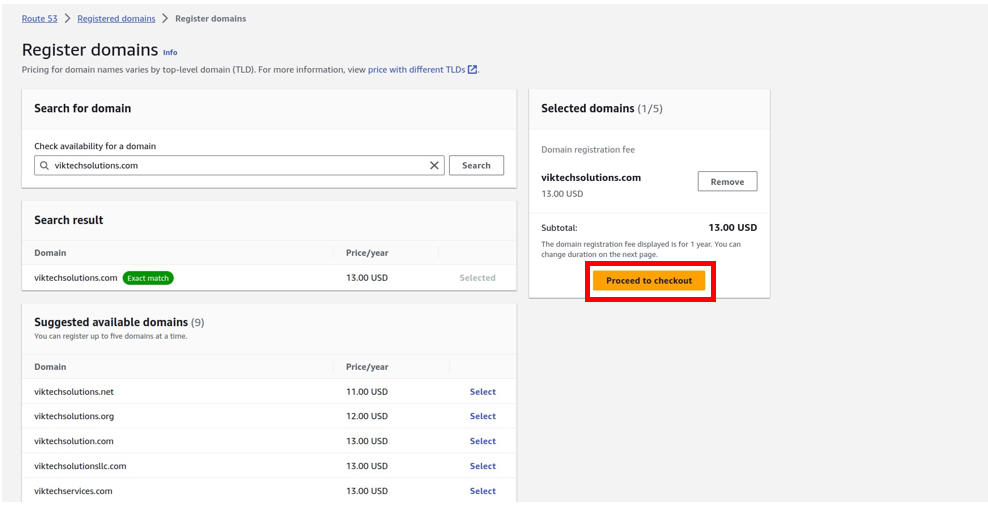

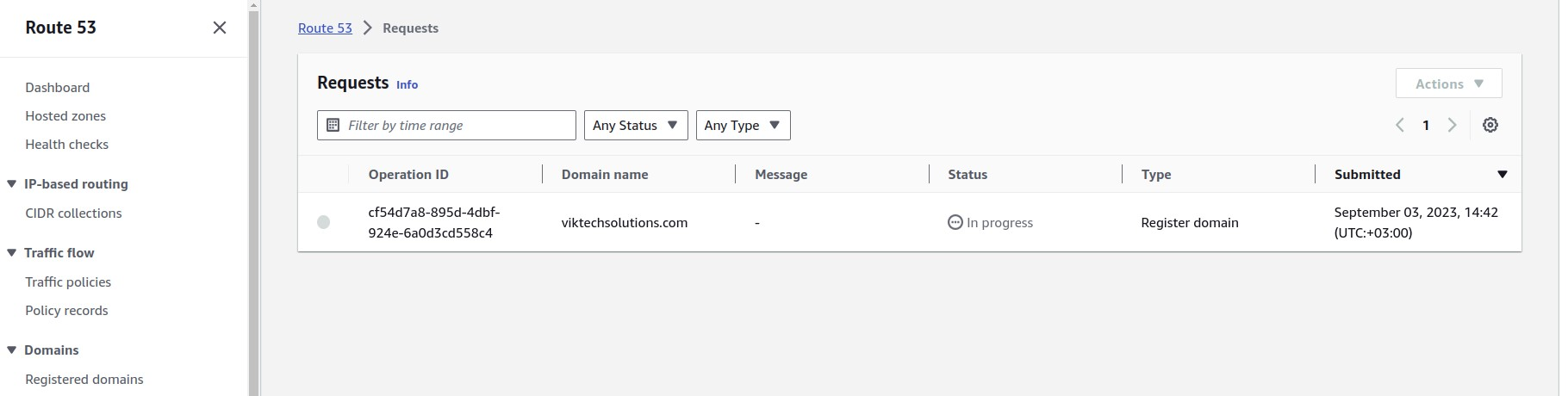

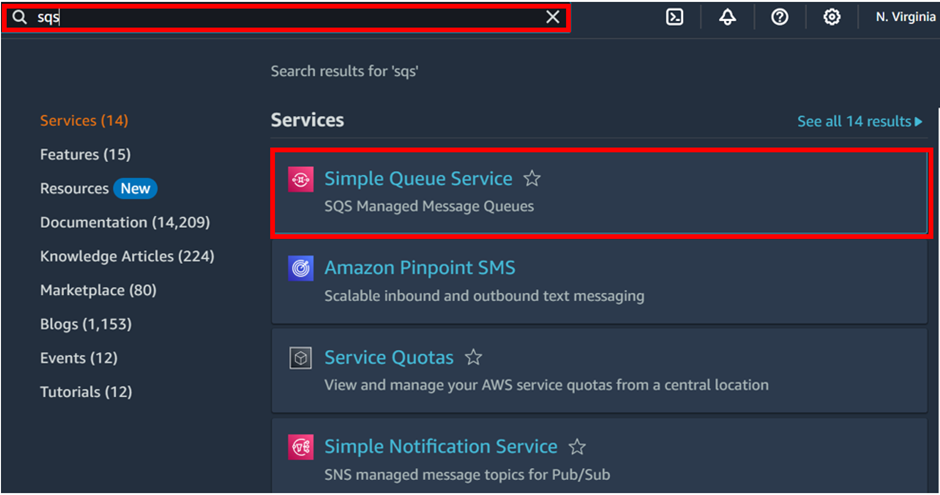

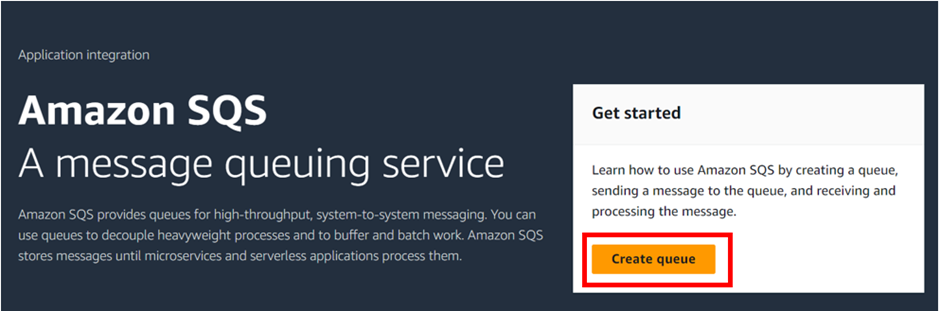

Log into the management console and in the search box type SQS select SQS under services then click Create Queue in the SQS console.

Let’s make our hands dirty.

Log into the management console and in the search box type SQS select SQS under services then click Create Queue in the SQS console.

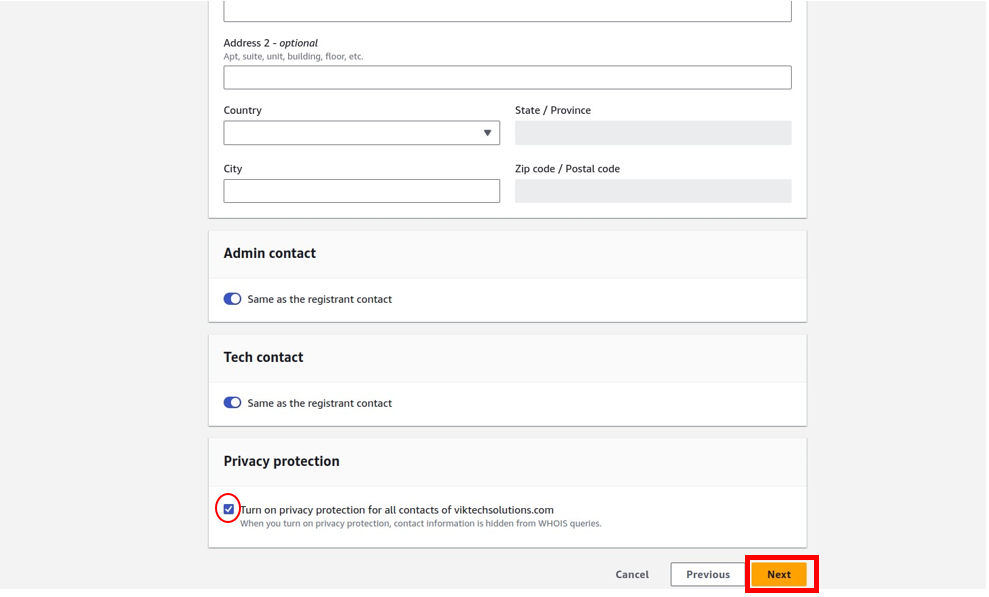

In the create queue dashboard in the details section under type, this is where you choose your queue type. The default is standard. So, I will proceed to create a standard queue. Under name call it my demoqueue then scroll down.

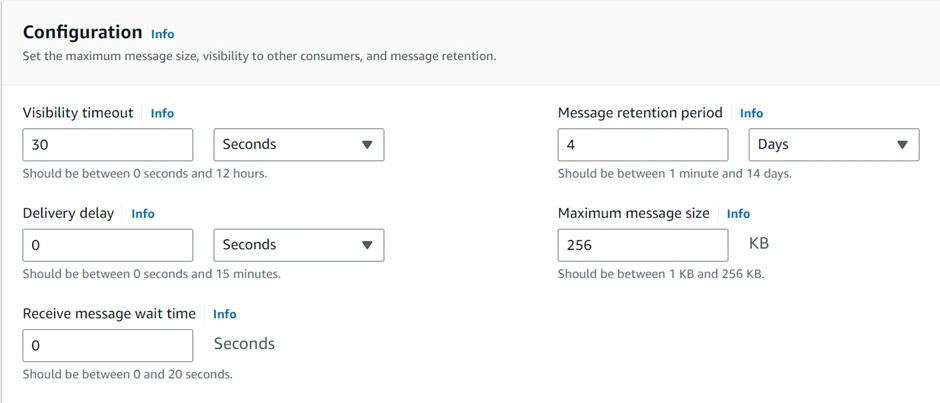

In the configuration section, this is where you specify visibility timeout, delivery delay, receive message wait time, and message retention period. I will move with the default settings. Scroll down.

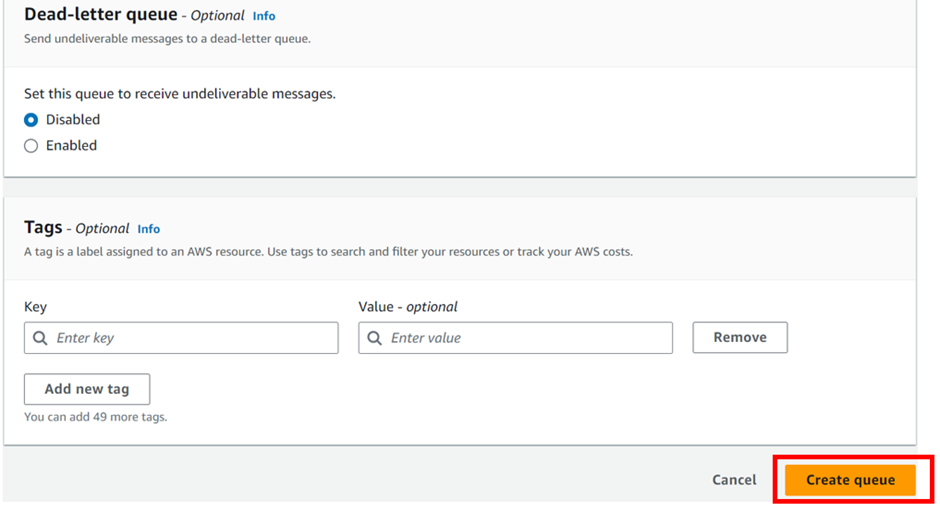

Move with the default encryption and scroll down.

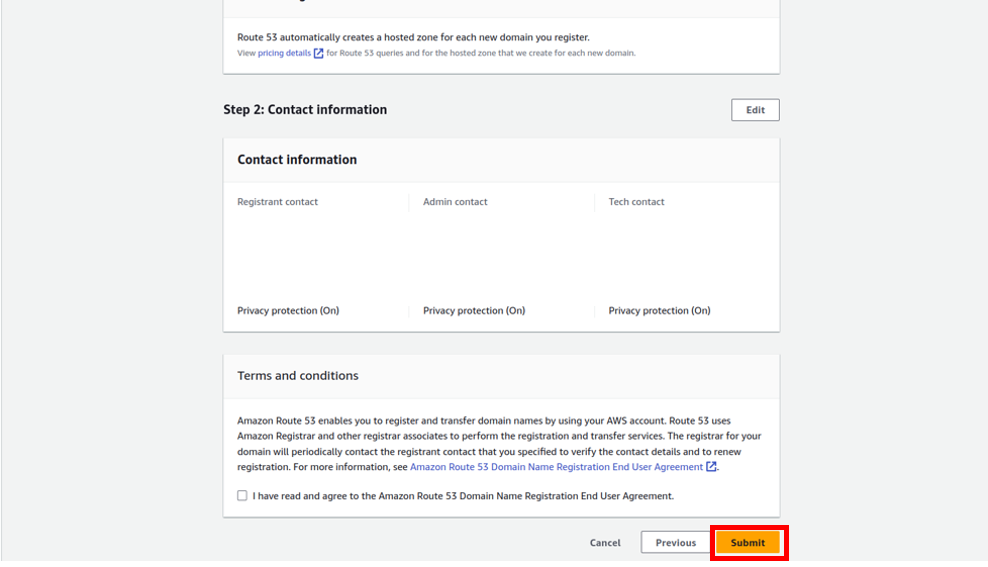

Leave all the other settings as default. Scroll down and click Create Queue.

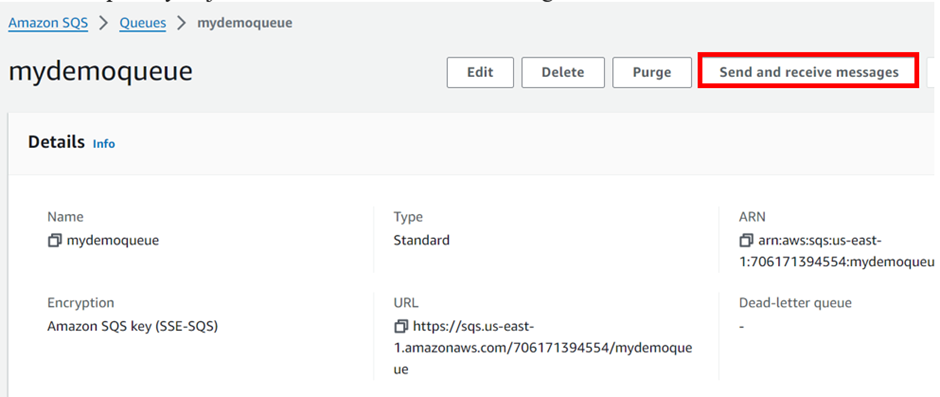

We will now send and poll for messages with our created queue. So, select the queue you just created then click send message.

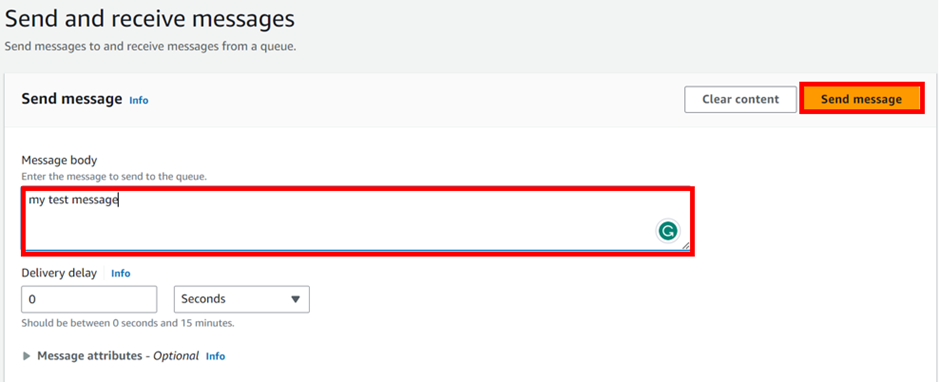

In the send and receive messages section, under the message body, enter any message and then click send.

Send message is successful.

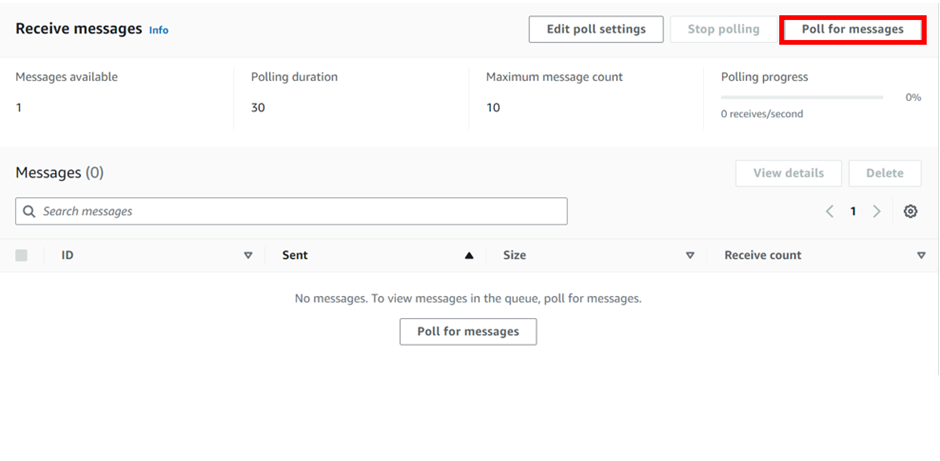

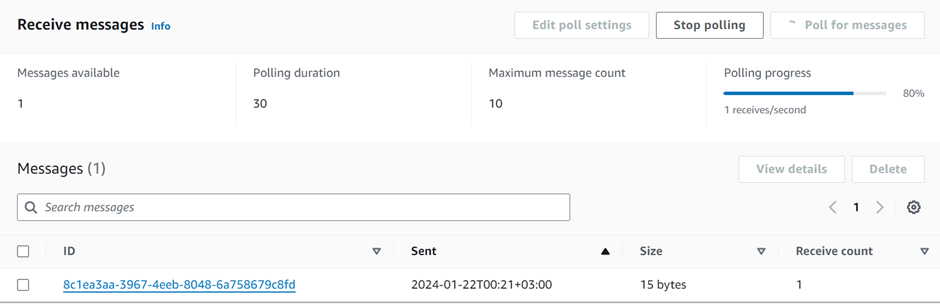

Let’s now poll for our message. Scroll down and click poll for messages.

Under receive message we can see one message, and if you click on the message, it’s the message we sent.

This brings us to the end of this blog Pull down.

Stay tuned for more.

If you have any questions concerning this article or have an AWS project that requires our assistance, please reach out to us by leaving a comment below or email us at sales@accendnetworks.com.

Thank you!