How To Configure AWS Transit Gateway

The network architecture in the cloud can quickly become complex as the number of VPCs, accounts, regions, on-premises data Centers, and edge locations grows. Transit Gateways allow customers to simplify the network layout and connectivity between all these environments.

What is AWS Transit Gateway?

AWS Transit Gateway serves as a central hub that connects VPCs and on-premises networks, eliminating the need for individual connections.

With Transit Gateway, you only need to create connections from the VPCs, VPNs, and Direct Connect links to the Transit Gateway. Transit Gateway will then dynamically route traffic between all the connected networks.

Why Choose Amazon Transit Gateway?

- Simplifies Connectivity: Transit Gateway lets you quickly connect to one central gateway, making it easier for users to interconnect to all VPCS and onsite networks, regardless of how many connected accounts there are. Transit Gateway also supports dynamic and static layer 3 between Amazon VPCs and VPNs.

- Facilitates Greater Control and Monitoring: AWS Transit Gateway allows users to monitor and manage all their Amazon VPC and edge connections in one place. The service makes it easier to find issues and handle events as they come. You may also enable Equal Cost Multipath (ECMP) between these connections to load balance between paths and increase bandwidth.

- Bandwidth On Demand: Obtain the network bandwidth you need to move terabytes of data at a time for your applications, or even migrate into the cloud. You may add Amazon VPCs to your network without needing to provision extra connections from on-site networks.

- Highly Secure: With its integration with IAM, users may control who can access Transit Gateway. Create and manage user accounts and groups and establish permission for them centrally.

Pricing

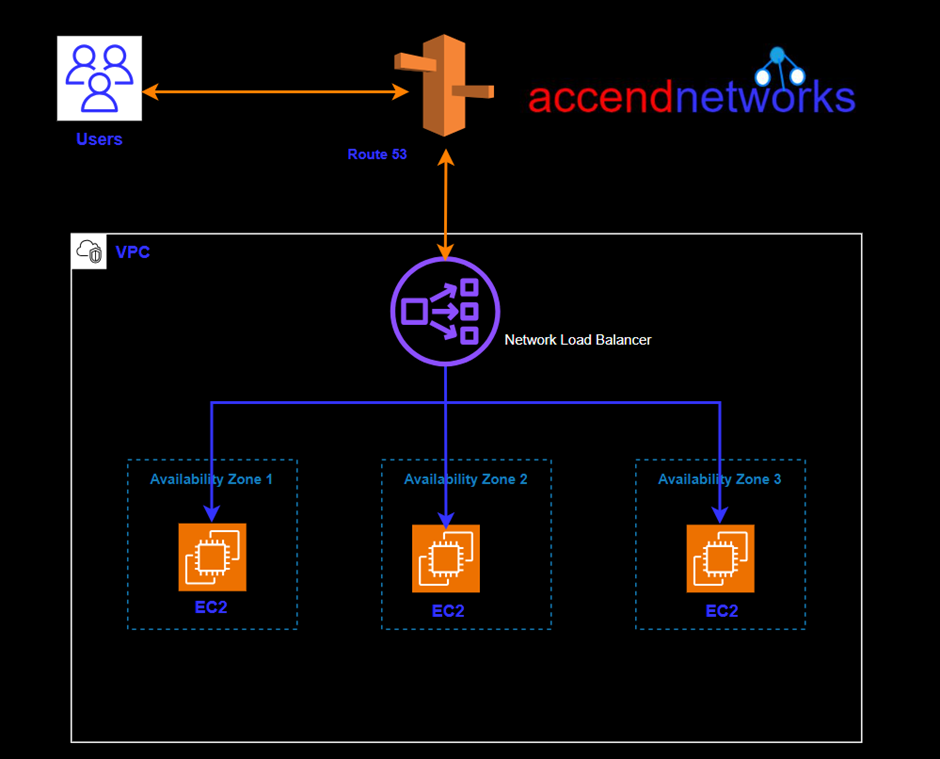

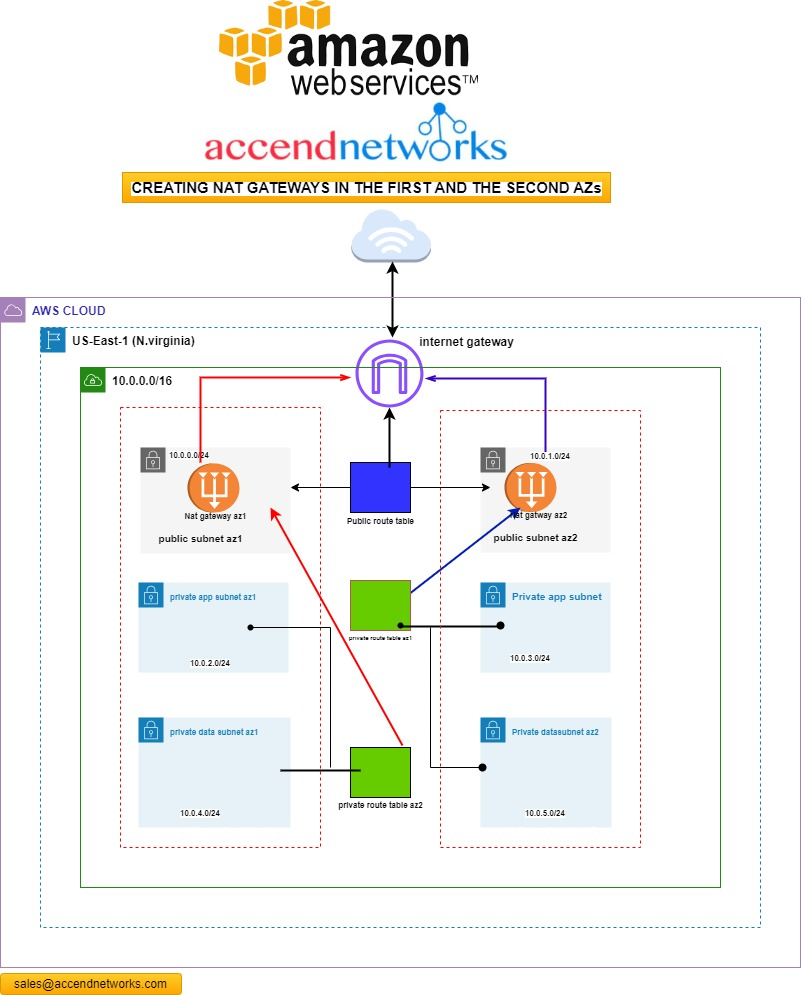

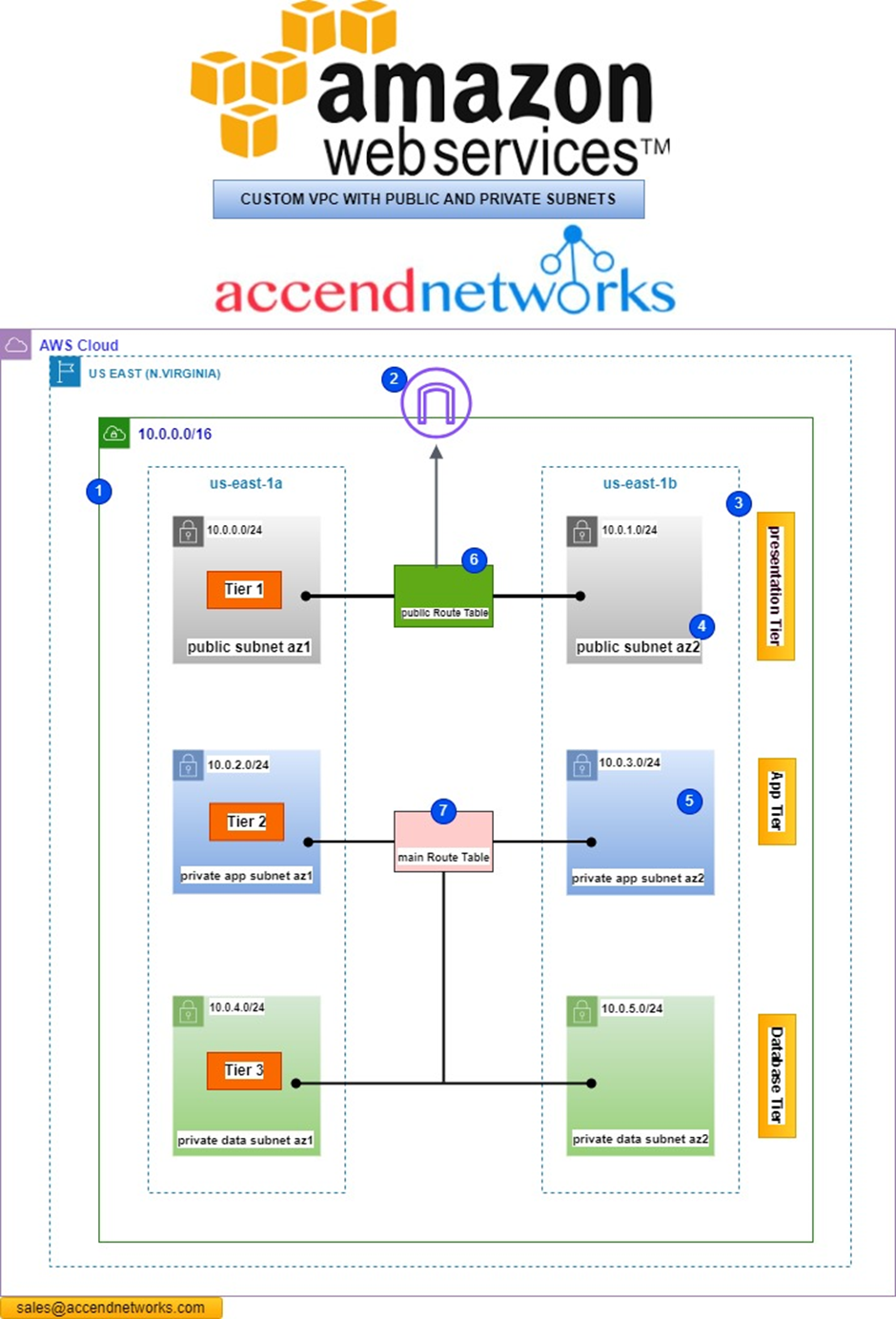

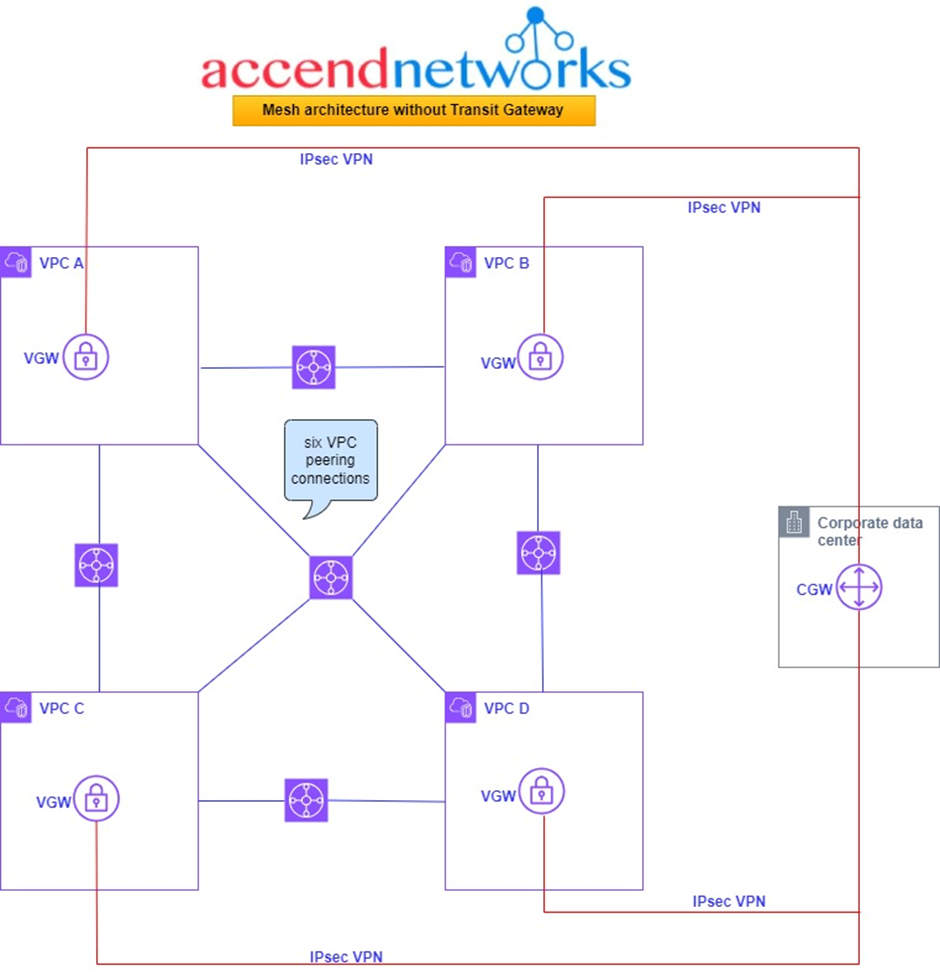

So, as we can see, AWS Transit Gateway is an awesome service. It’s further described by AWS as being a cloud router and it connects VPCs and on-premises locations together using a central hub. To prove how transit gateway simplifies connectivity, we will look at different scenarios. First will concider a fully meshed architecture without AWS Transit Gateway. This will help us understand the problem Transit Gateway is trying to solve.

We get that when we have lots of VPCs and on-premises location connections without Transit Gateway, the peering connections that we set up if we use VPC peering can become extremely complex.

Examining the above architecture, we find ourselves navigating through four distinct Virtual Private Clouds (VPCs) denoted as A, B, C, and D, all seamlessly interconnected through established VPC peering links. The complexity becomes apparent as we observe the presence of six peering links, a testament to the intricacy involved in setting up connections for just four VPCs. It becomes evident that as the number of VPCs increases, the complexity of the setup grows exponentially.

In this complicated setup, we have six connections between the VPCs, and the corporate office is linked in through a customer gateway. Now, here’s where it gets tricky: connecting the corporate office to each VPC using Site-to-Site VPNs. This involves having a virtual gateway in each VPC and making a separate secure connection (VPN) to the customer gateway for each VPC. So, in the end, we’re dealing with four of these VPN connections, and it gets even more complicated if we want a backup plan (redundancy).

If we dive a bit deeper into the problem, adding redundancy means we need an extra customer gateway and twice the number of those VPN connections. The more we look into it, the more complex it becomes, turning our setup into a really tangled network.

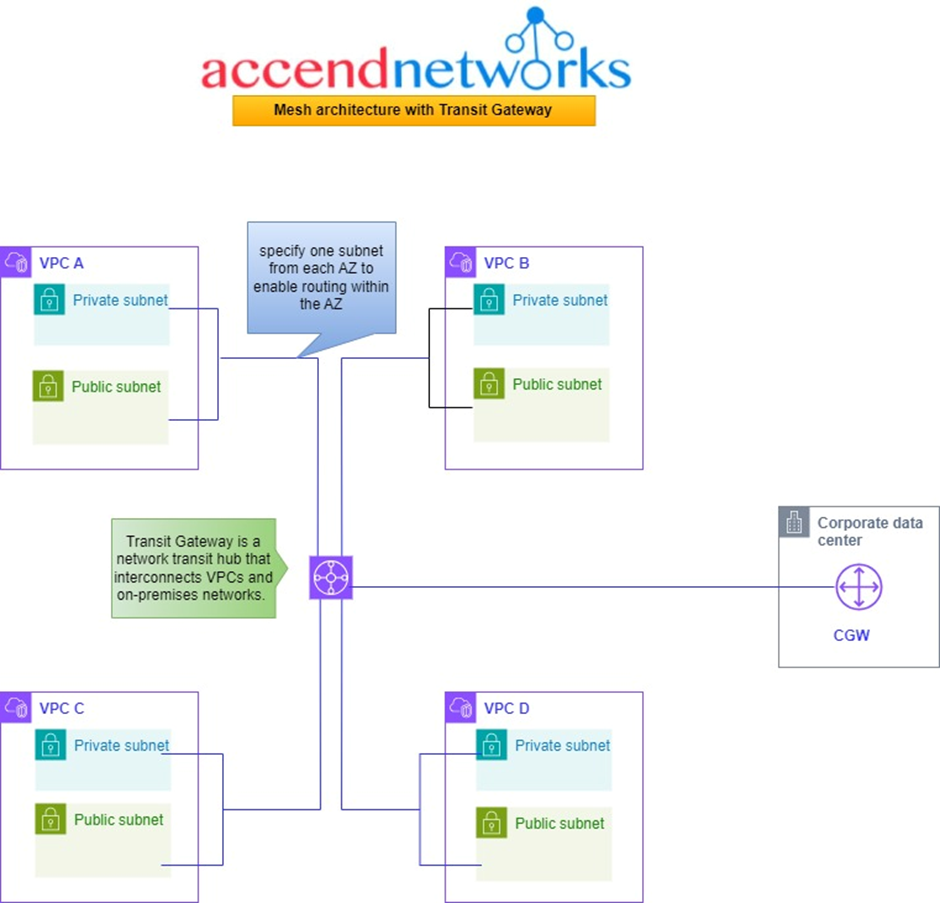

Now, let’s check out the same setup but using a Transit Gateway. This other option can make all the connections simpler and easier to deal with.

In this situation, as seen from the above architecture, we have the same four VPCs and a corporate office. Now, let’s simplify things by putting a Transit Gateway in the middle. It acts like the main hub that connects all the VPCs and the on-premises networks.

So, each of these VPCs gets linked to the Transit Gateway. You choose a subnet from each availability zone, which helps in directing traffic within that zone for other subnets. It’s like giving each area its own route.

Now, there’s also the customer data Center and the corporate office has a customer gateway that also connects to the Transit Gateway. That’s pretty much the setup. This service allows us to connect through a cloud router, this central hub, to any of these VPCs.

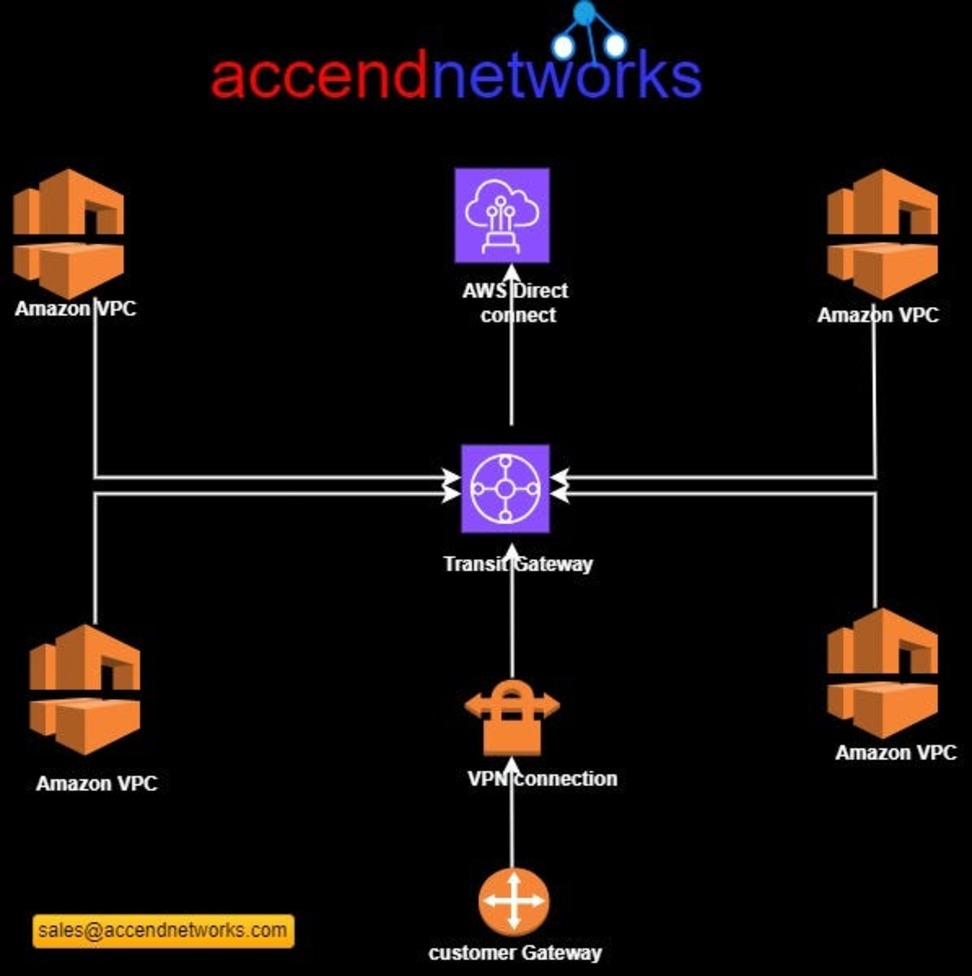

Transit Gateways (TGWs) can be attached to VPNs, Direct Connect Gateways, third-party appliances, and even other Transit Gateways in different regions or accounts.

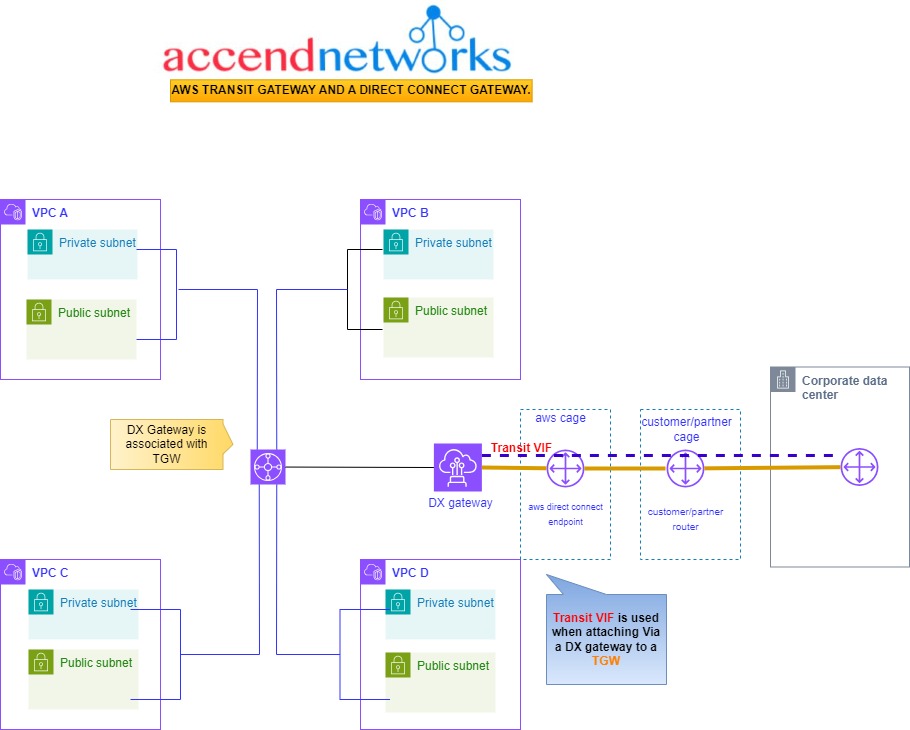

Explore how AWS Transit Gateway seamlessly integrates with Direct Connect Gateway, enabling transitive routing for growing companies with multiple VPCs.

Now, the DX Gateway has a connection with the Transit Gateway. This connection is called an association. We then physically connect back to the corporate office from Direct Connect, creating something called a Transit VIF. This is like a special connection used only when you’re connecting a DX Gateway to a Transit Gateway.

This setup supports full transitive routing between on-premises, the Transit Gateway, and all those connected VPCs. When your company gets bigger and uses more VPCs in different areas, and you want them all to connect smoothly, Transit Gateway becomes super helpful.

Conclusion.

AWS Transit Gateway makes cloud network setups simpler. It acts like a hub connecting your VPCs, VPNs, and data Centers, making things easy to manage. It does away with confusing mesh setups, provides easy scalability, and keeps your network organized and secure.

As your cloud presence grows, Transit Gateway is the key to keeping your network simple, efficient, and secure.

If you have any questions concerning this article or have an AWS project that requires our assistance, please reach out to us by leaving a comment below or email us at sales@accendnetworks.com.

Thank you!