How To Filter Traffic with AWS Web Application Firewall - Part One

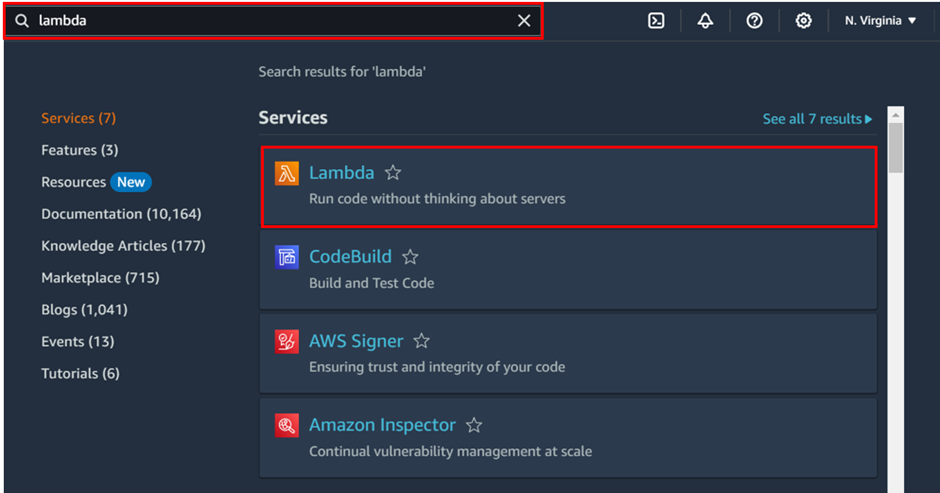

What is AWS Web Application Firewall?

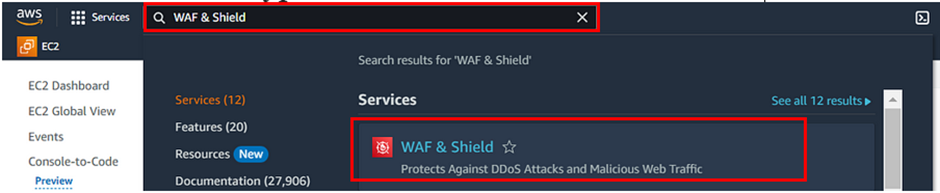

AWS Web Application Firewall is a web application firewall that helps you protect your web applications against common web exploits that might affect availability and compromise security.

WAF helps protect web applications by filtering and monitoring HTTP traffic between a web application and the internet. It typically protects web applications from attacks such as cross-site forgery, cross-site scripting (XSS), file inclusion, and SQL injection, among others

How does it work?

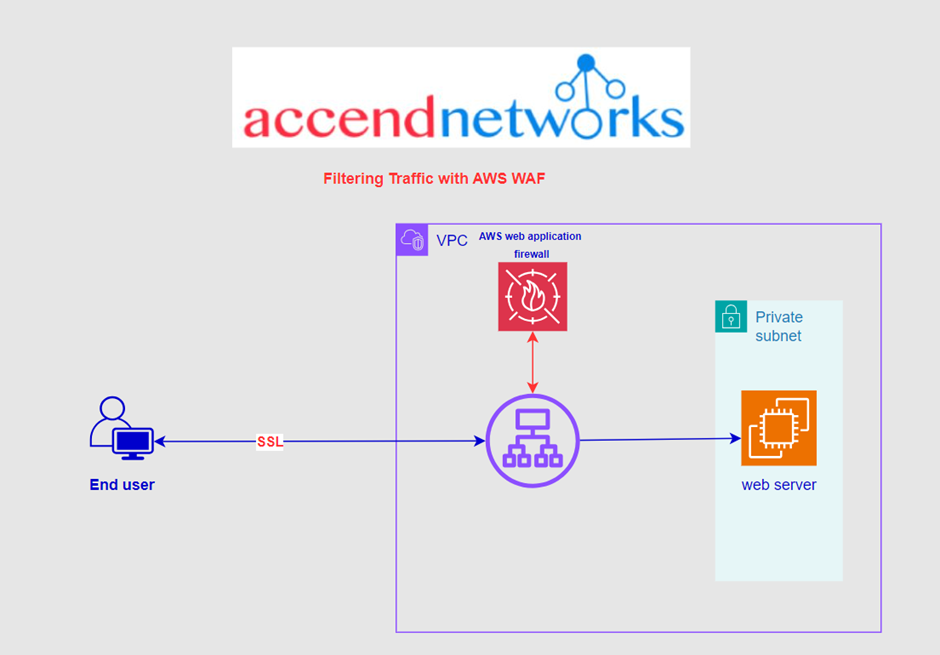

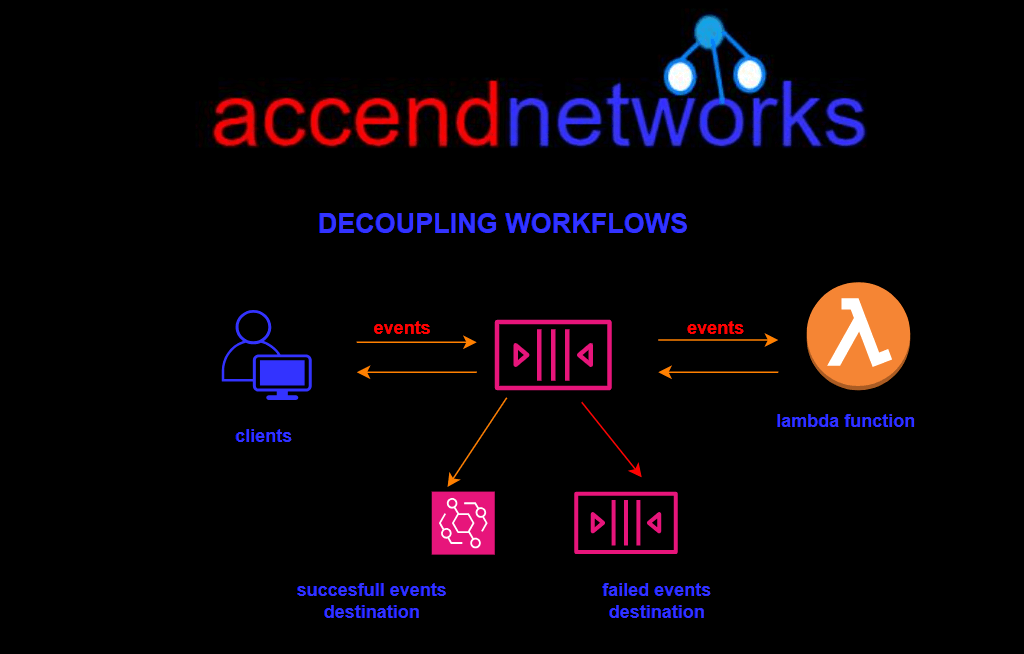

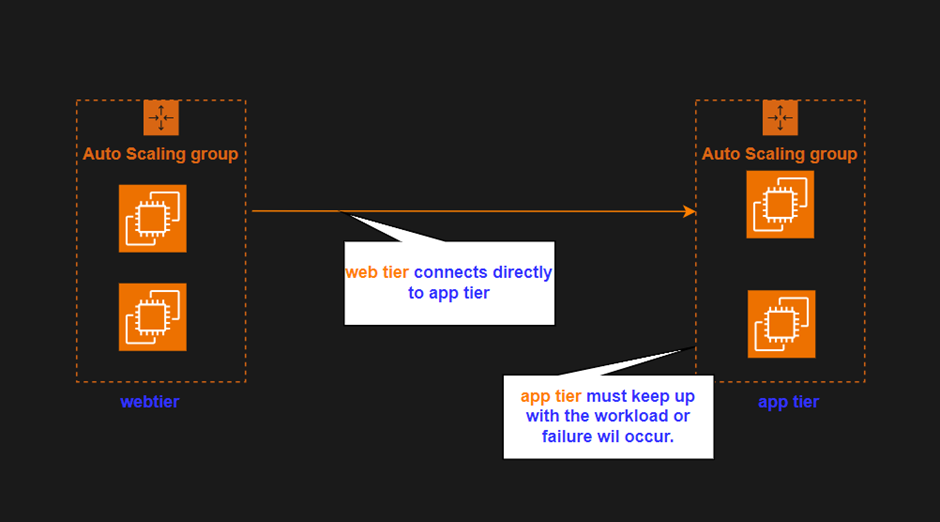

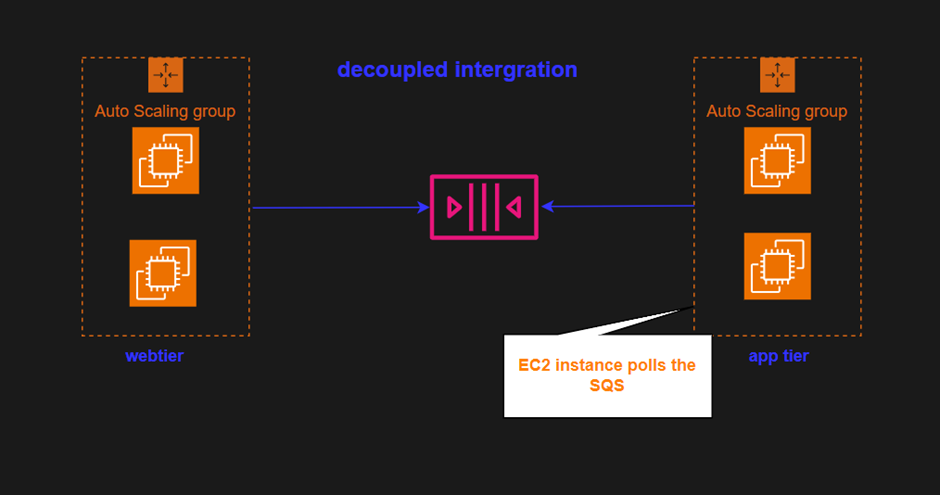

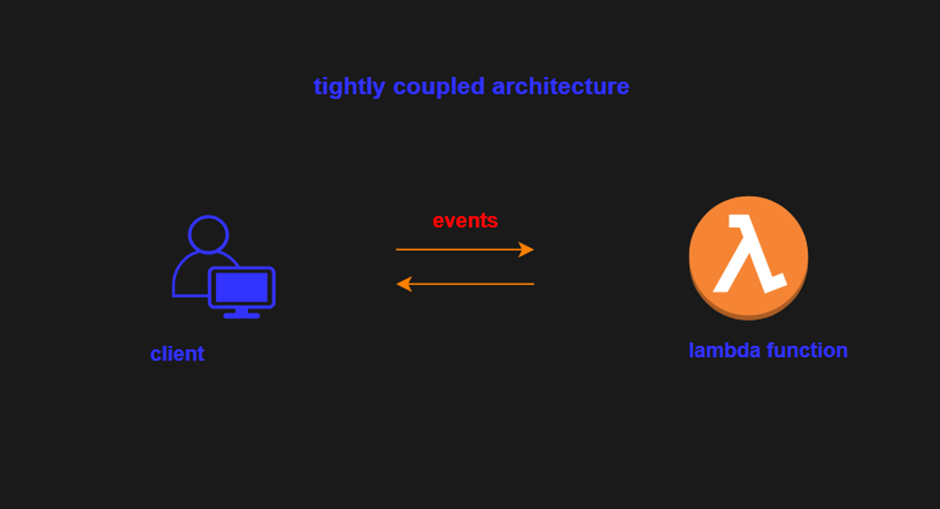

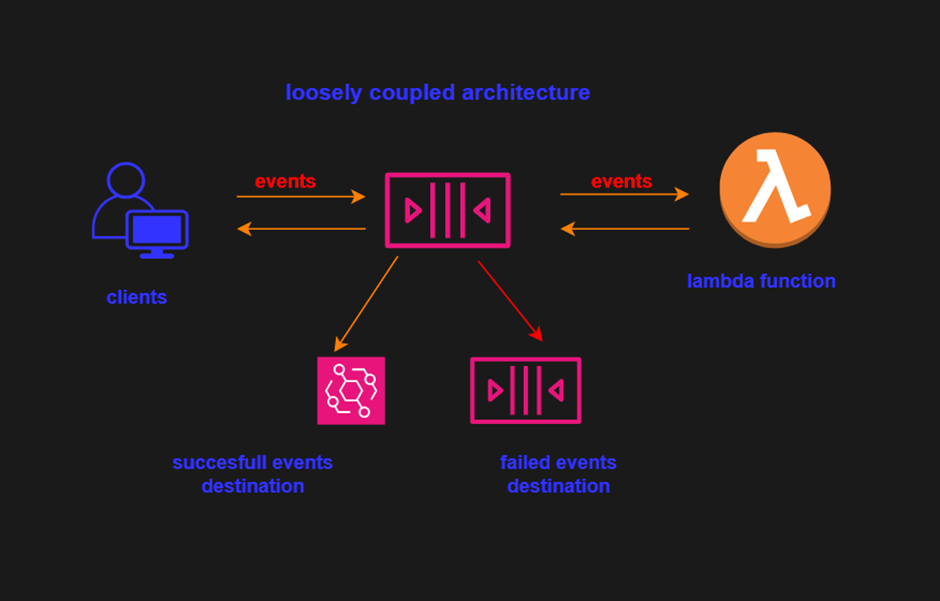

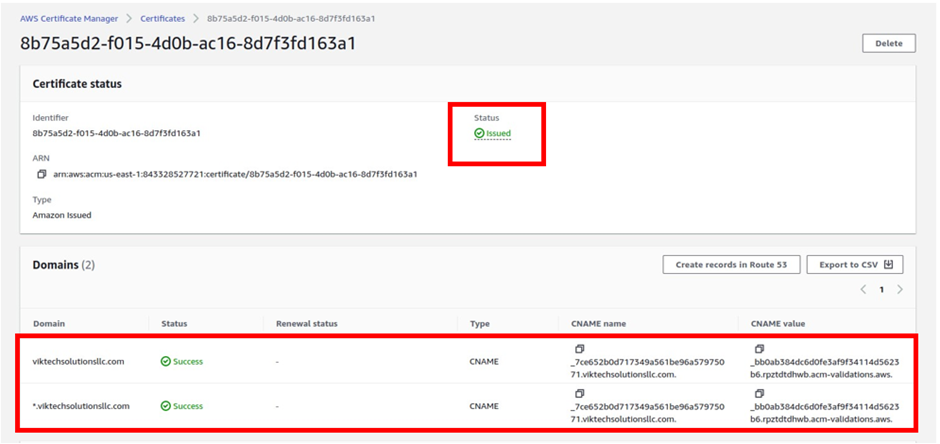

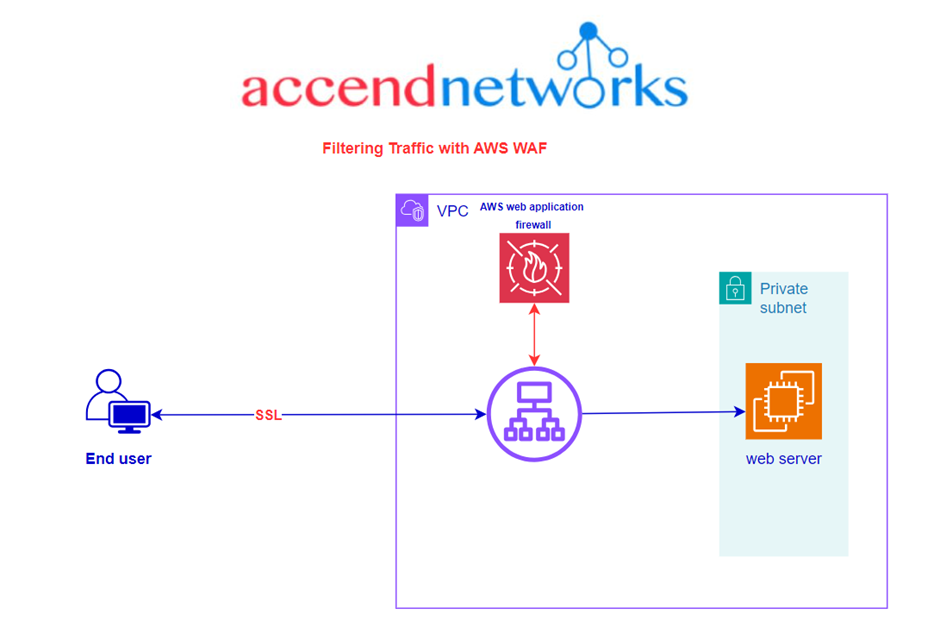

By deploying a WAF for a web application, a shield is placed between the web application and the internet. WAF is a reverse proxy, protecting the server from exposure by having clients pass through the WAF before reaching the server.

A WAF operates through a set of rules often called policies. These policies aim to protect against vulnerabilities in the application by filtering out malicious traffic.|

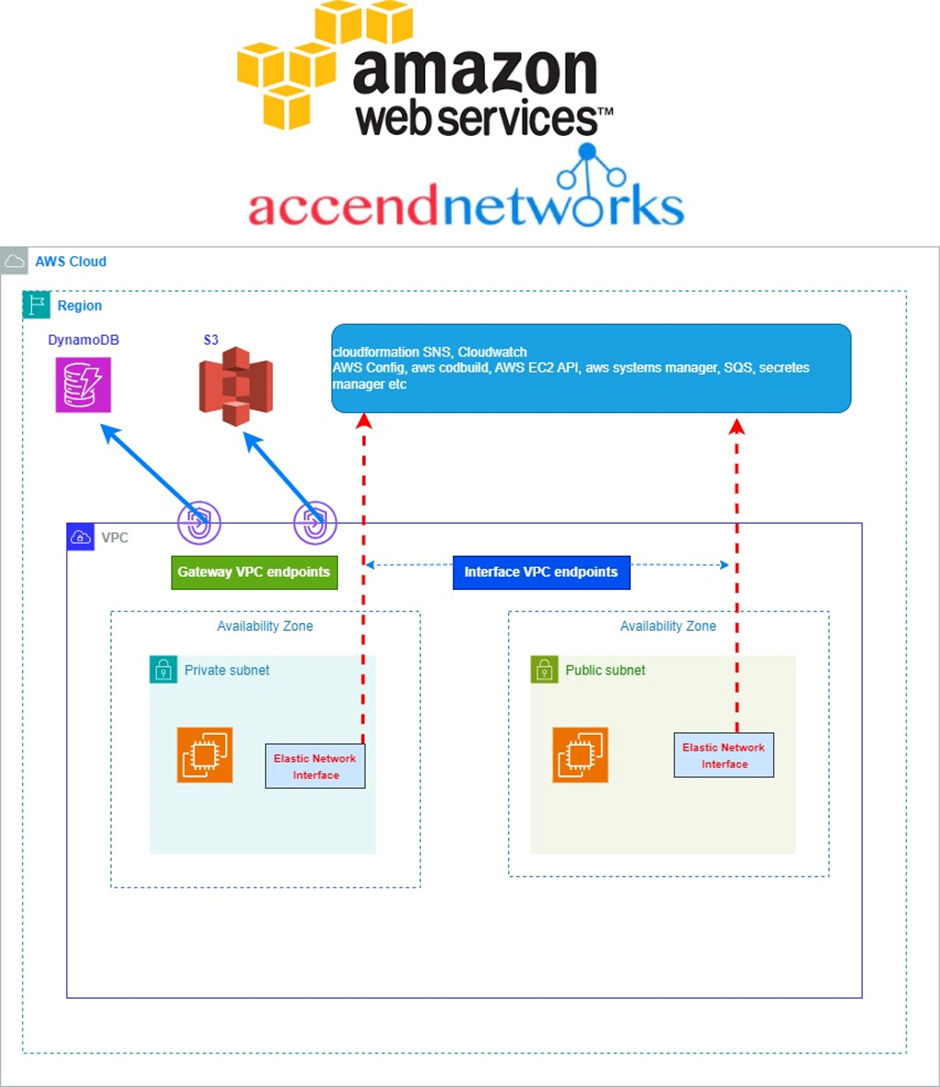

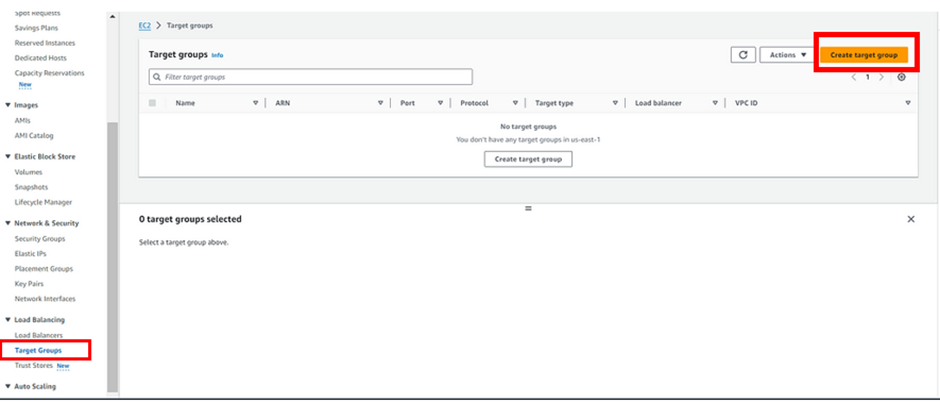

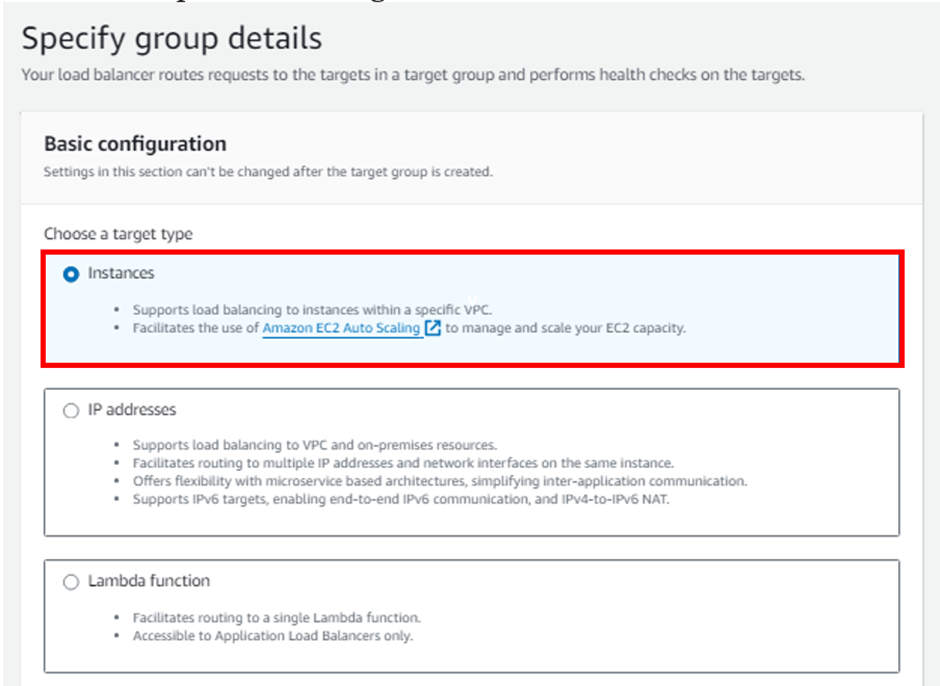

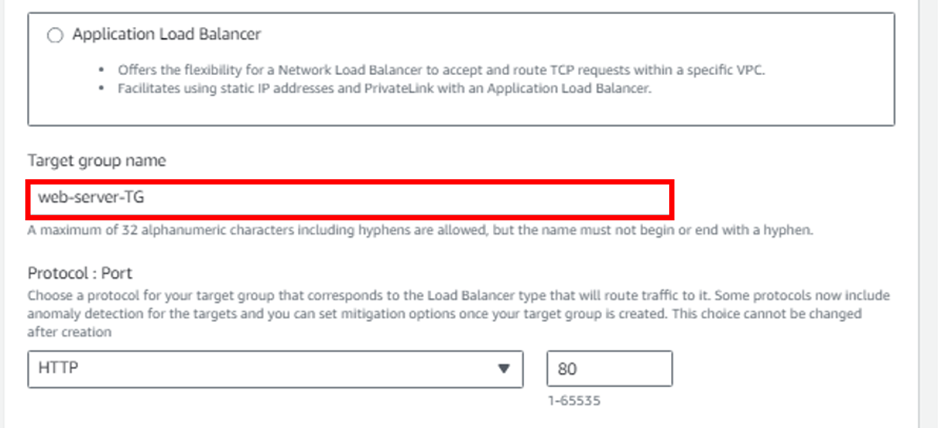

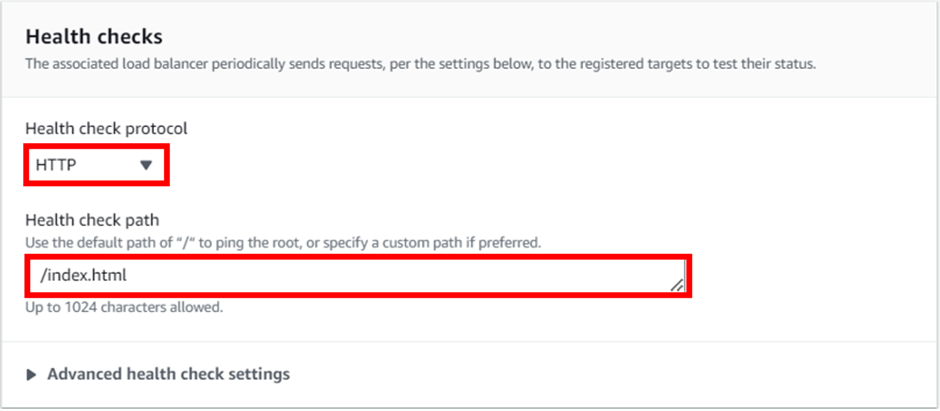

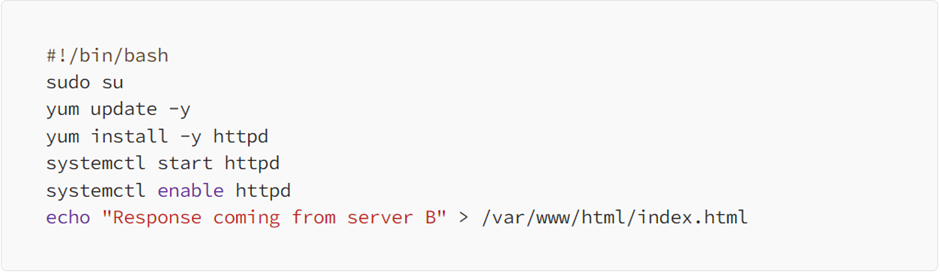

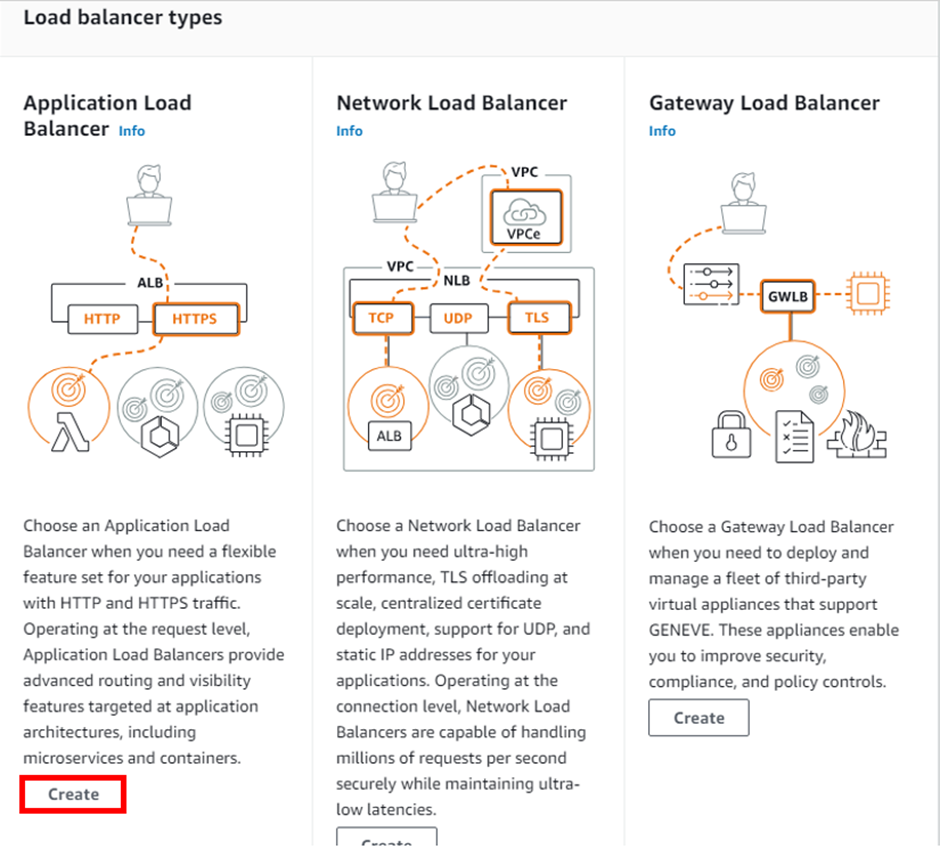

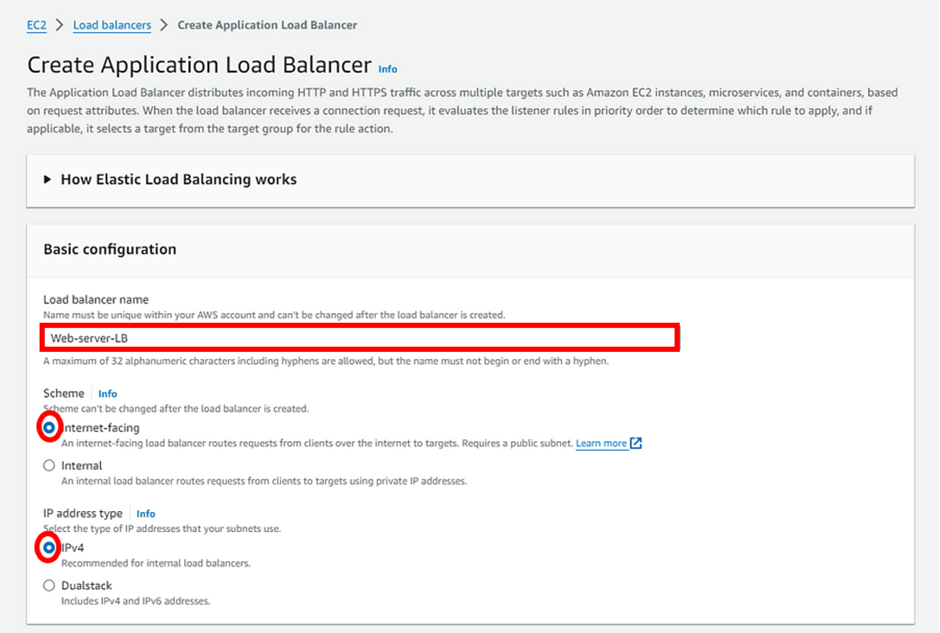

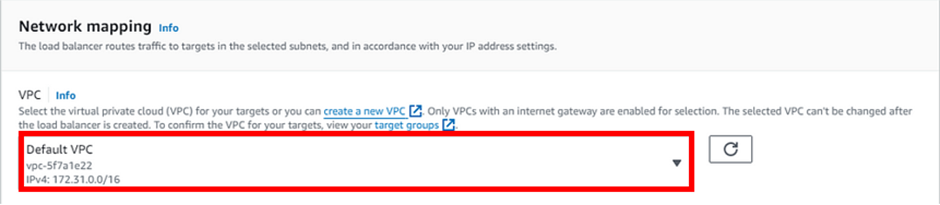

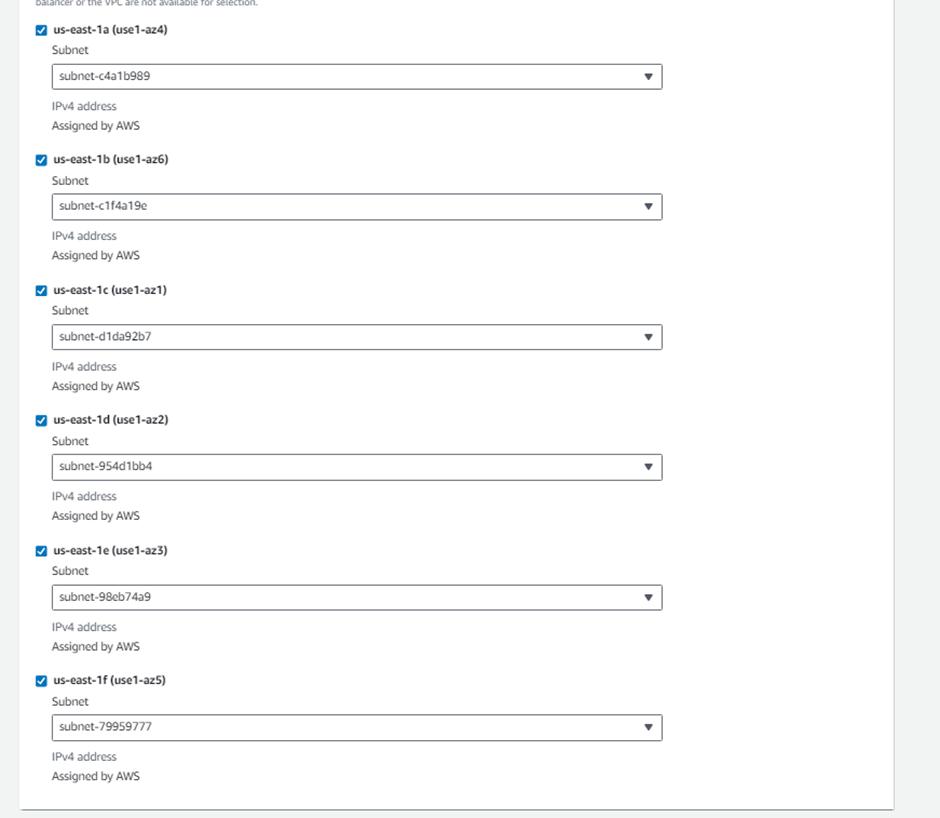

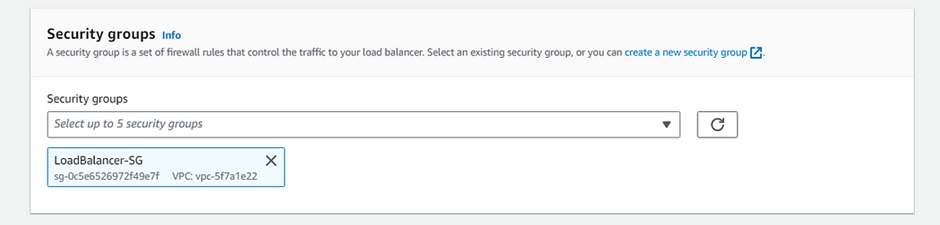

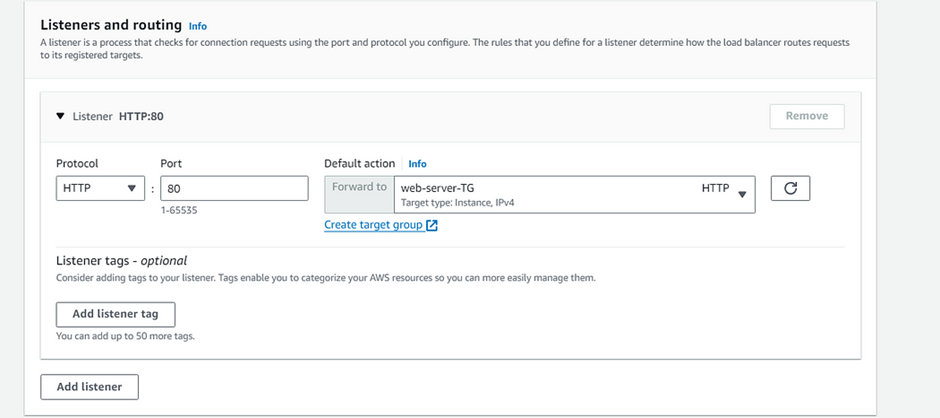

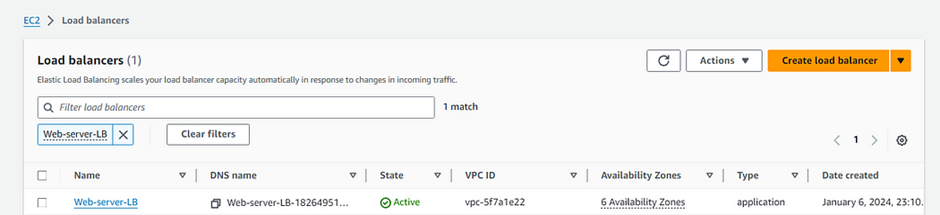

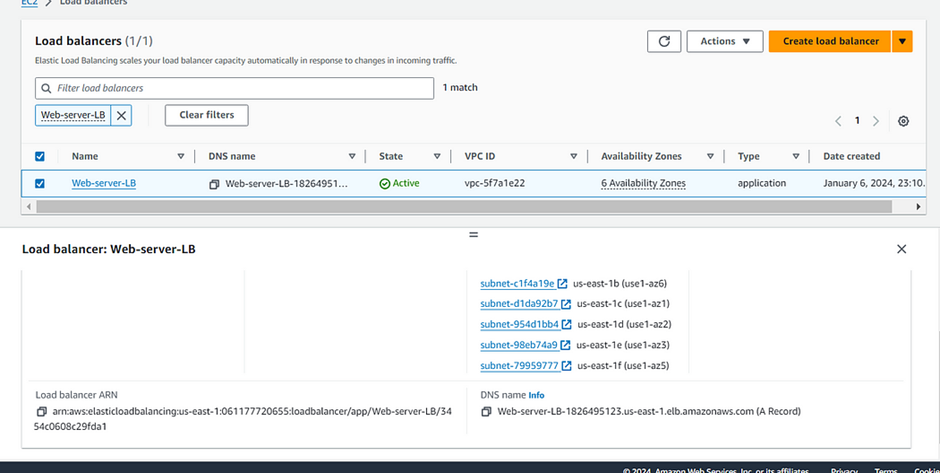

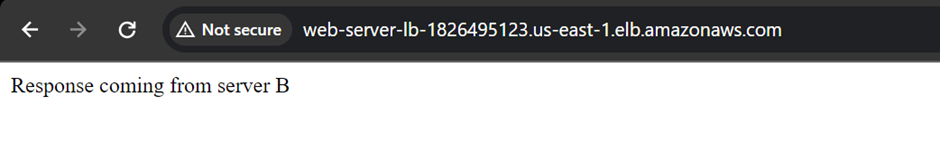

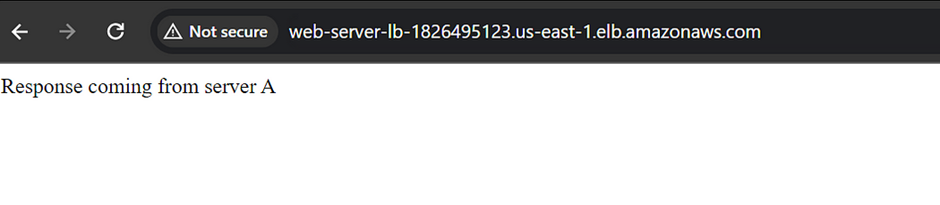

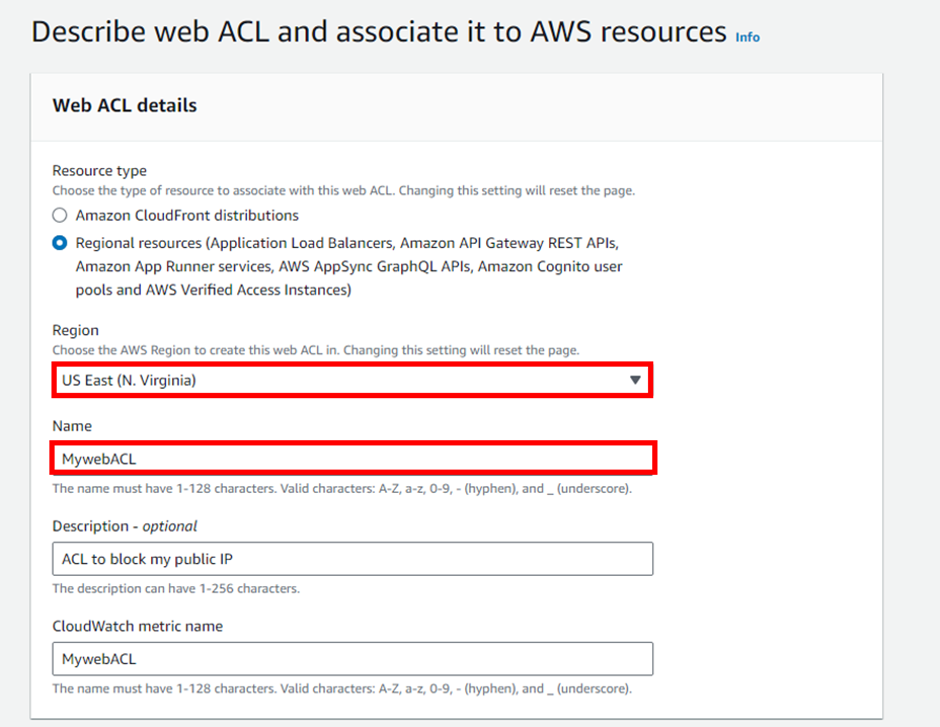

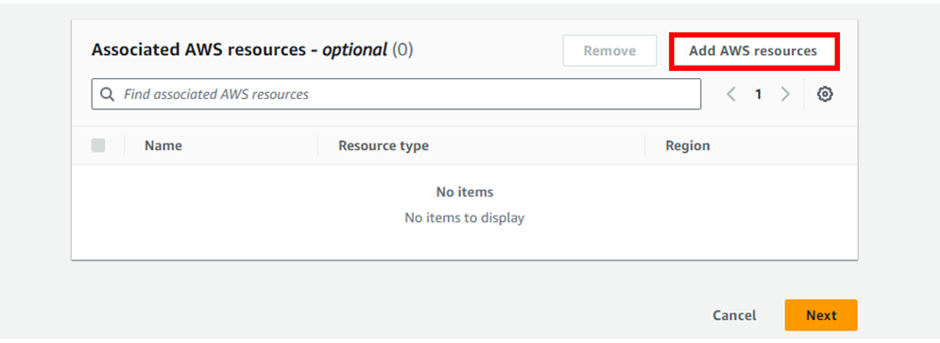

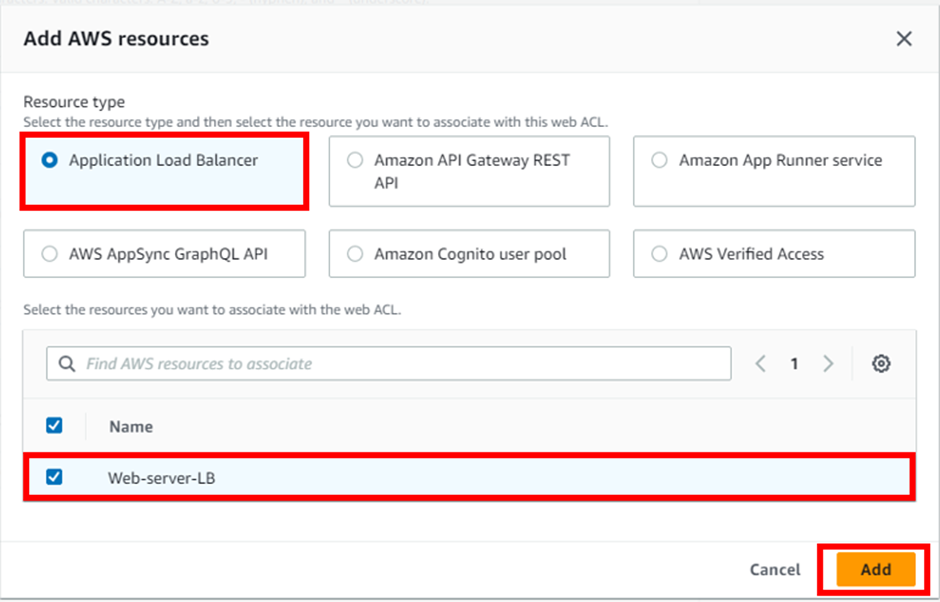

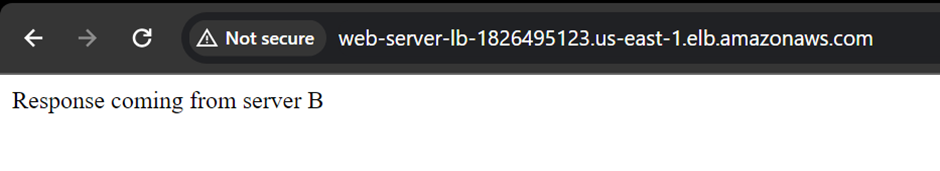

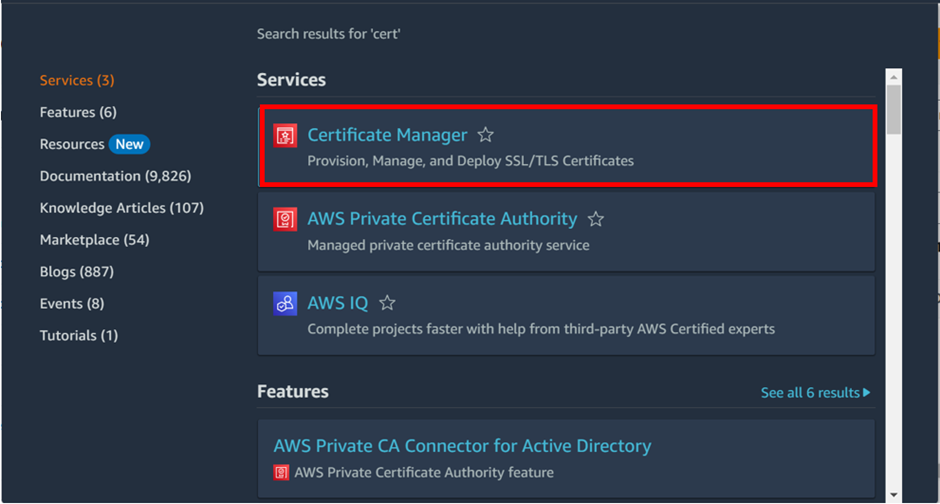

AWS WAF supports and can be used to control how resources like Amazon CloudFront distribution, Amazon API Gateway REST API, Application Load Balancer (ALB), or AWS AppSync GraphQL API respond to web requests.

AWS WAF components

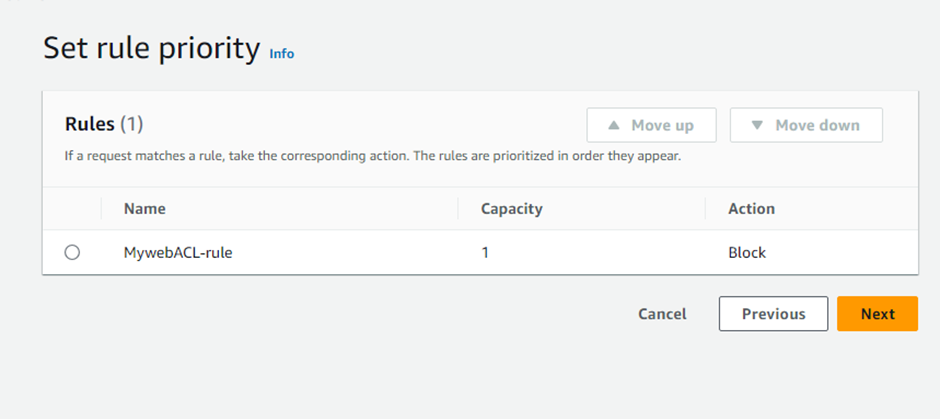

Rule groups are reusable. AWS Managed Rules and AWS Marketplace sellers provide managed rule groups. We can also define our rule groups.

AWS Managed Rule groups

Free rule groups

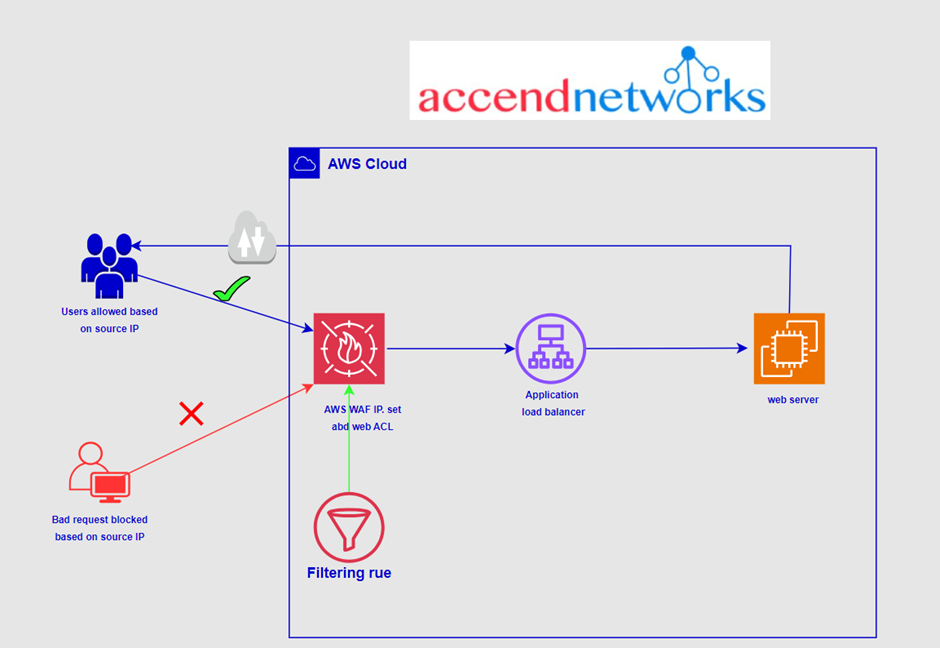

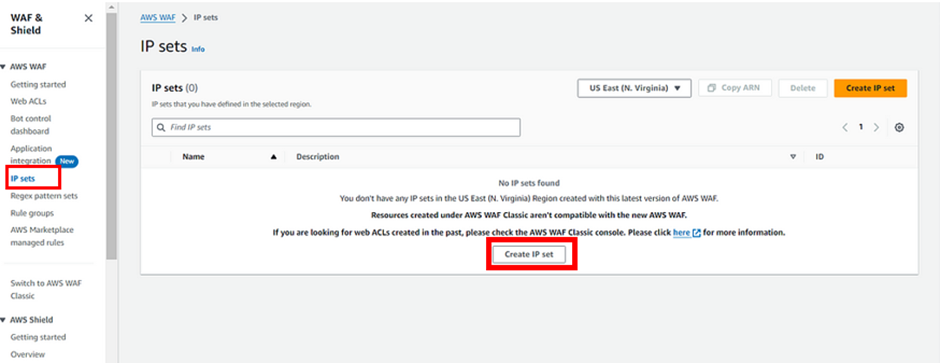

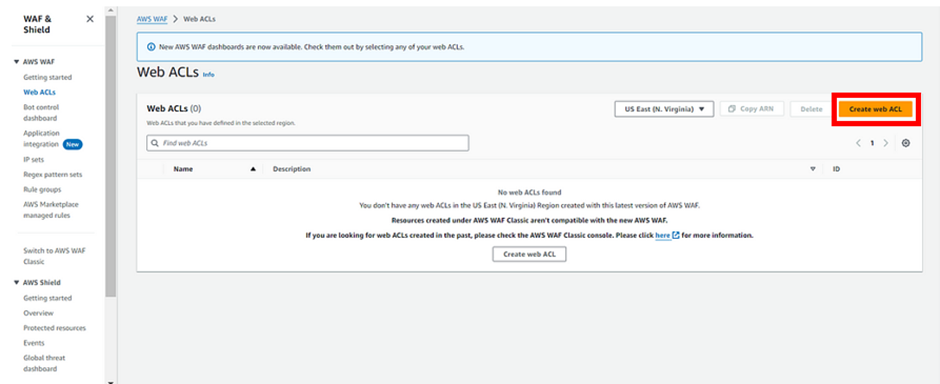

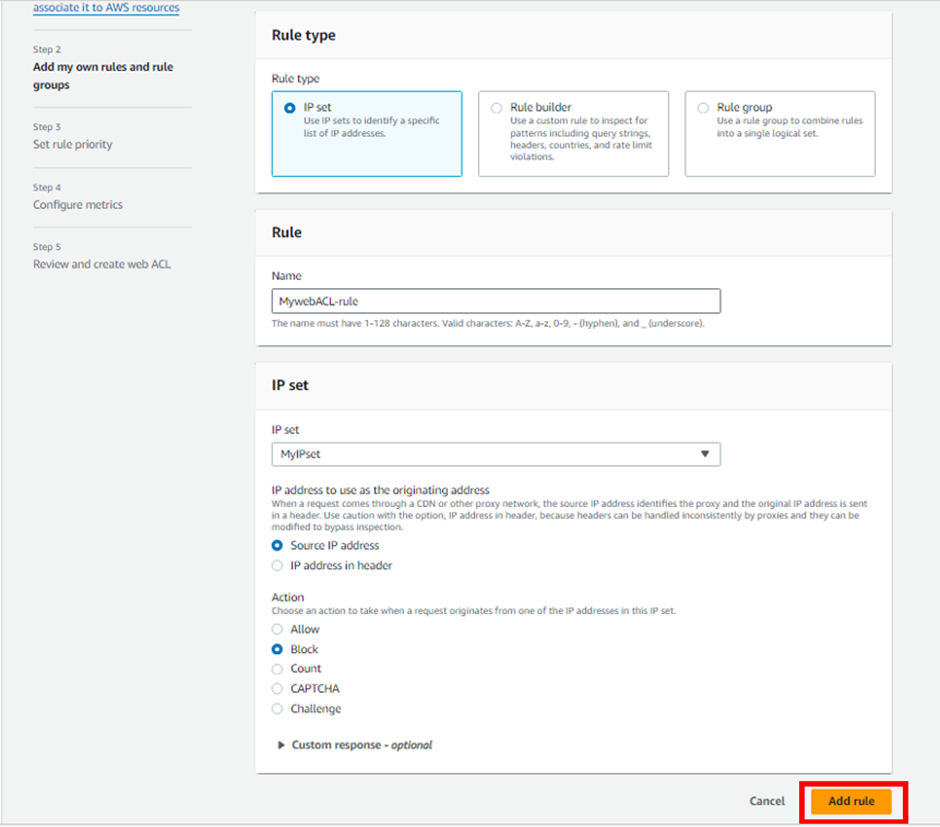

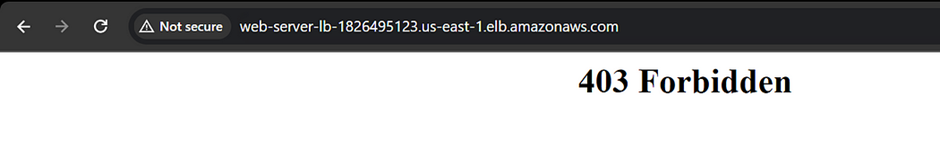

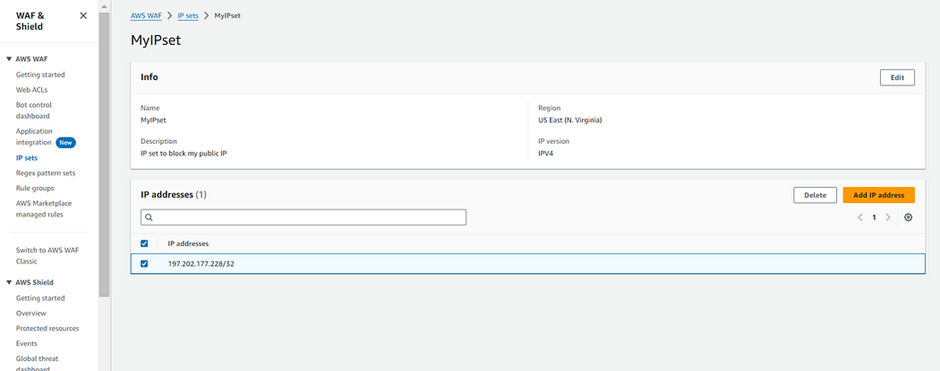

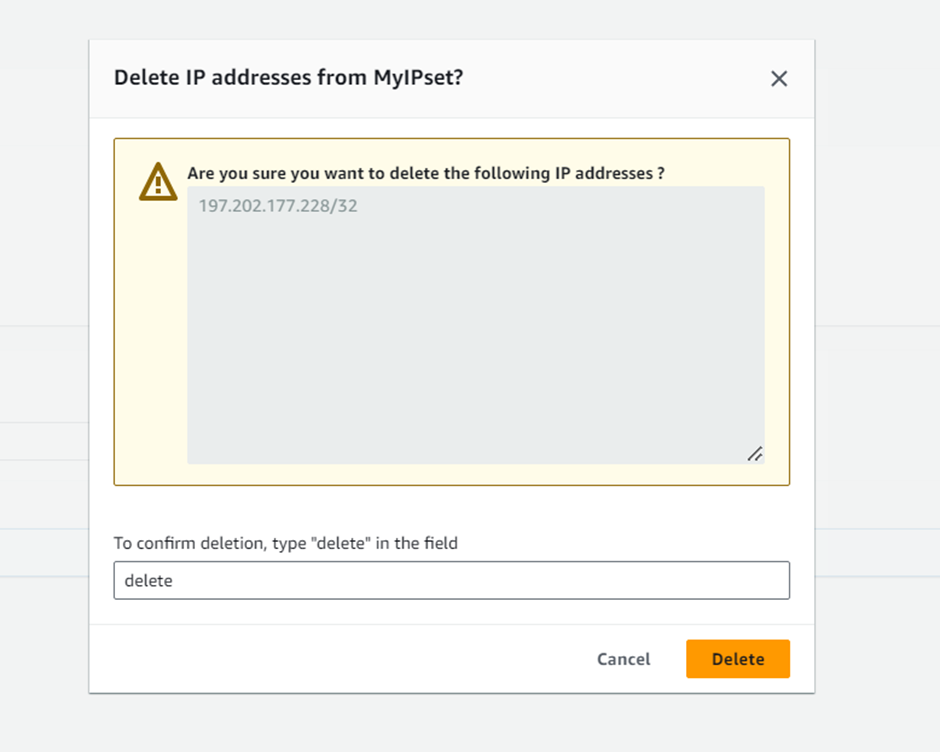

Custom rules can be created to block, allow, or count traffic/access which

- Originates from a country

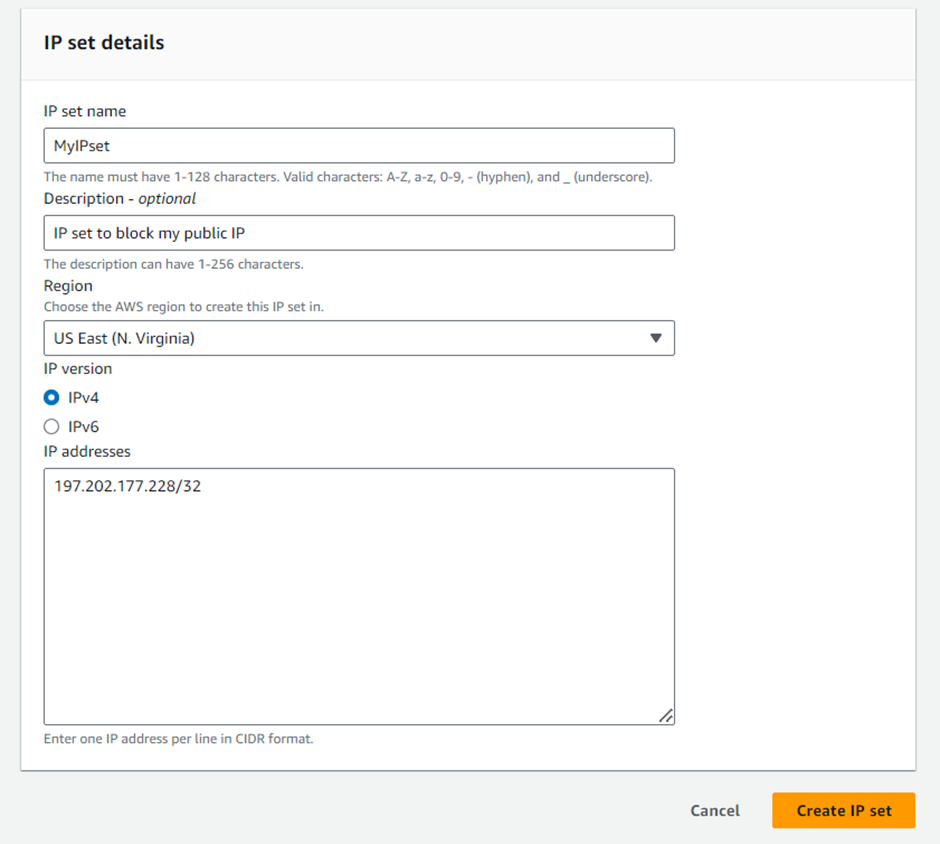

- Originates from a CIDR range

- Requests with a specific header, URI path, or body, And also set whether the traffic that does not match any of the Web ACL rules should be blocked, allowed, or counted.

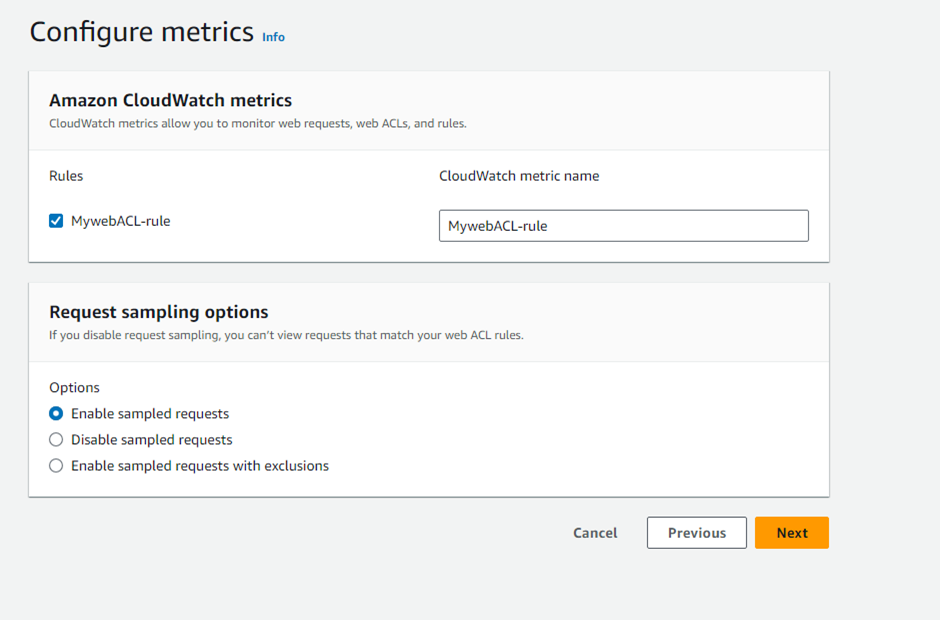

AWS WAF charges are based on the number of web access control lists (web ACLs) that you create, the number of rules that you add per web ACL, and the number of web requests that you receive.

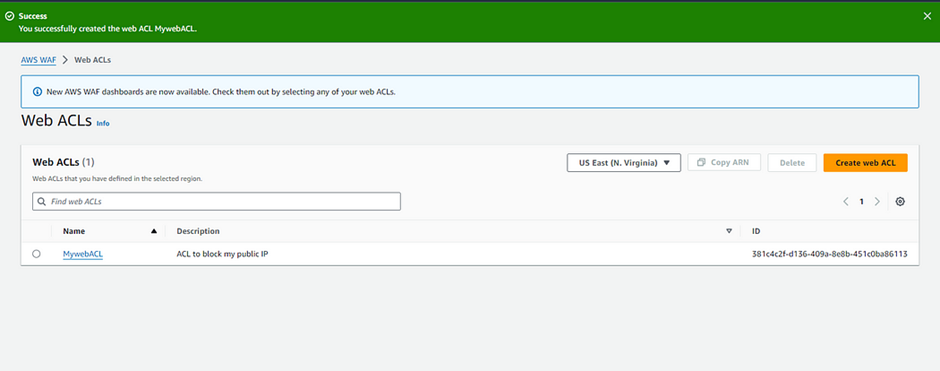

Web ACL $5.00 per month

Rule $1.00 per month

Request $0.60 per 1 million requests (for inspection up to 1500 WCUs)

AWS WAF web ACL capacity units (WCUs)

Rule group WCUs

Conclusion

AWS WAF provides a managed solution to protect your web applications against common exploits and vulnerabilities. By leveraging WAF’s advanced rulesets and integration with services like Application Load Balancer, you can effectively filter malicious web traffic while allowing legitimate users access.

Stay tuned for more.

If you have any questions concerning this article or have an AWS project that requires our assistance, please reach out to us by leaving a comment below or email us at sales@accendnetworks.com.

Thank you!